Jacobian methods for inverse

kinematics and planning

Slides from Stefan Schaal

USC, Max Planck

�

The Inverse Kinematics

Problem

Direct Kinematics

Inverse Kinematics

x = f θ( )

θ= f −1 x( )

Possible Problems of Inverse Kinematics

Infinitely many solutions

Multiple solutions

No solutions

No closed-form (analytical solution)

�

Analytical (Algebraic)

Solutions

Analytically invert the direct kinematics equations and

enumerate all solution branches

Note: this only works if the number of constraints is the same as

the number of degrees-of-freedom of the robot

What if not?

Iterative solutions

Invent artificial constraints

Examples

2DOF arm

See S&S textbook 2.11 ff

�

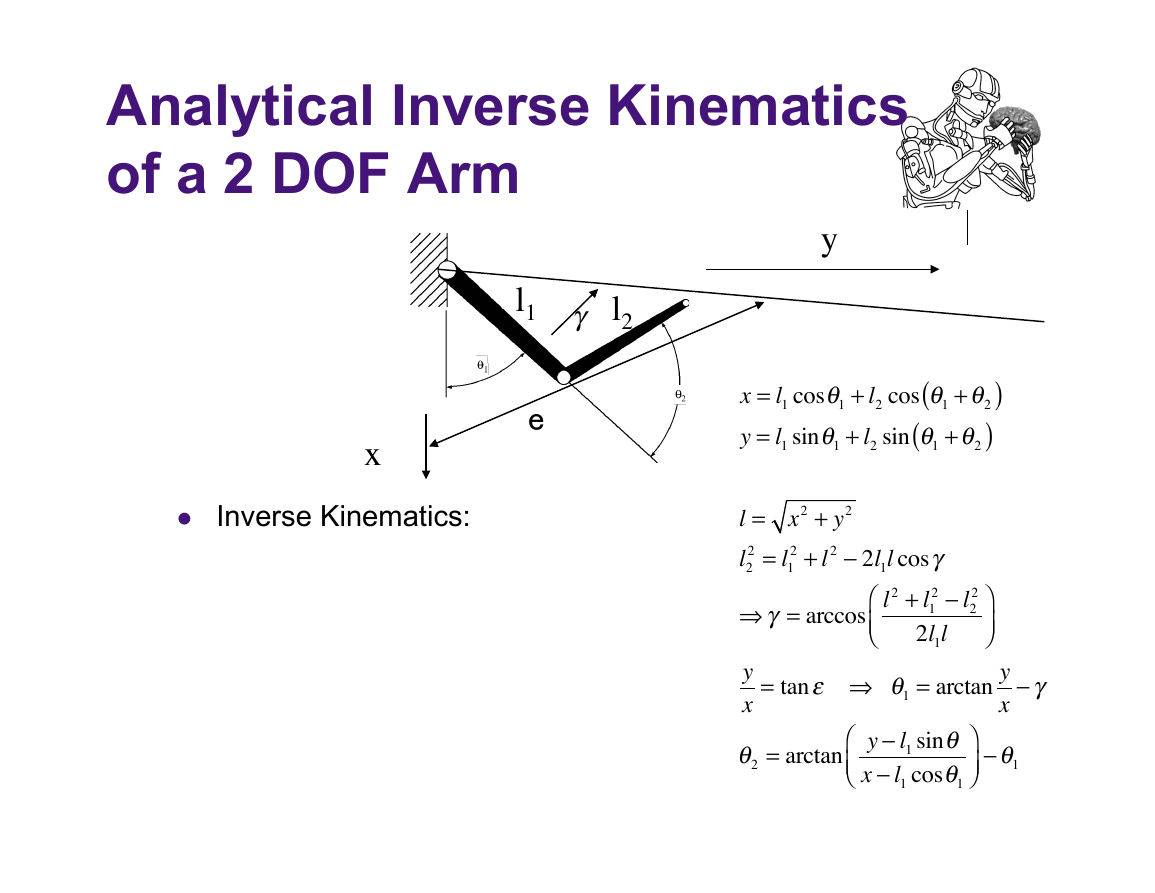

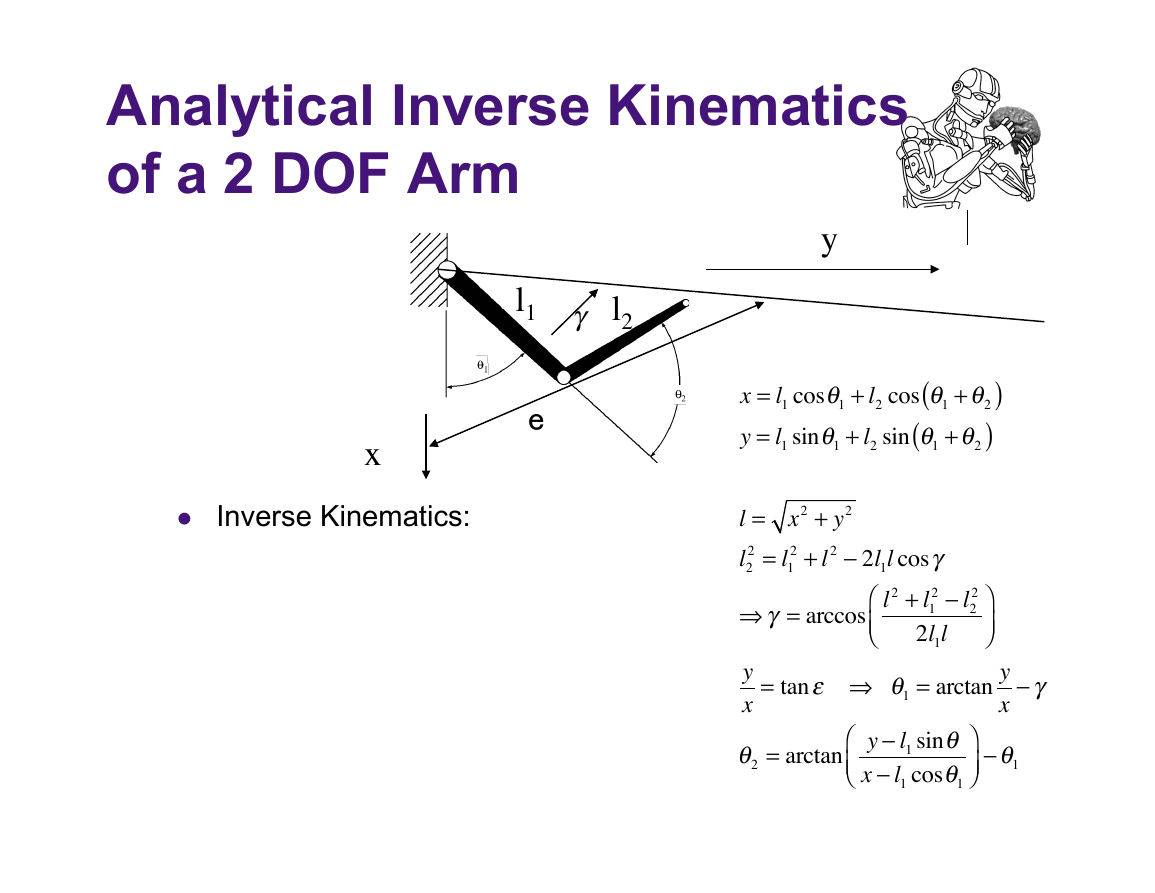

Analytical Inverse Kinematics

of a 2 DOF Arm

y

l1

l2

γ

e

x

Inverse Kinematics:

x = l1 cosθ1 + l2 cos θ1 +θ2

y = l1 sinθ1 + l2 sin θ1 +θ2

)

(

(

)

⎛

⎝⎜

l = x2 + y2

2 + l2 − 2l1lcosγ

l2

2 = l1

⇒γ = arccos l2 + l1

2 − l2

2

⎞

2l1l

⎠⎟

y

x = tanε ⇒ θ1 = arctan y

⎞

θ2 = arctan

⎠⎟ −θ1

y − l1 sinθ

x − l1 cosθ1

x −γ

⎛

⎝⎜

�

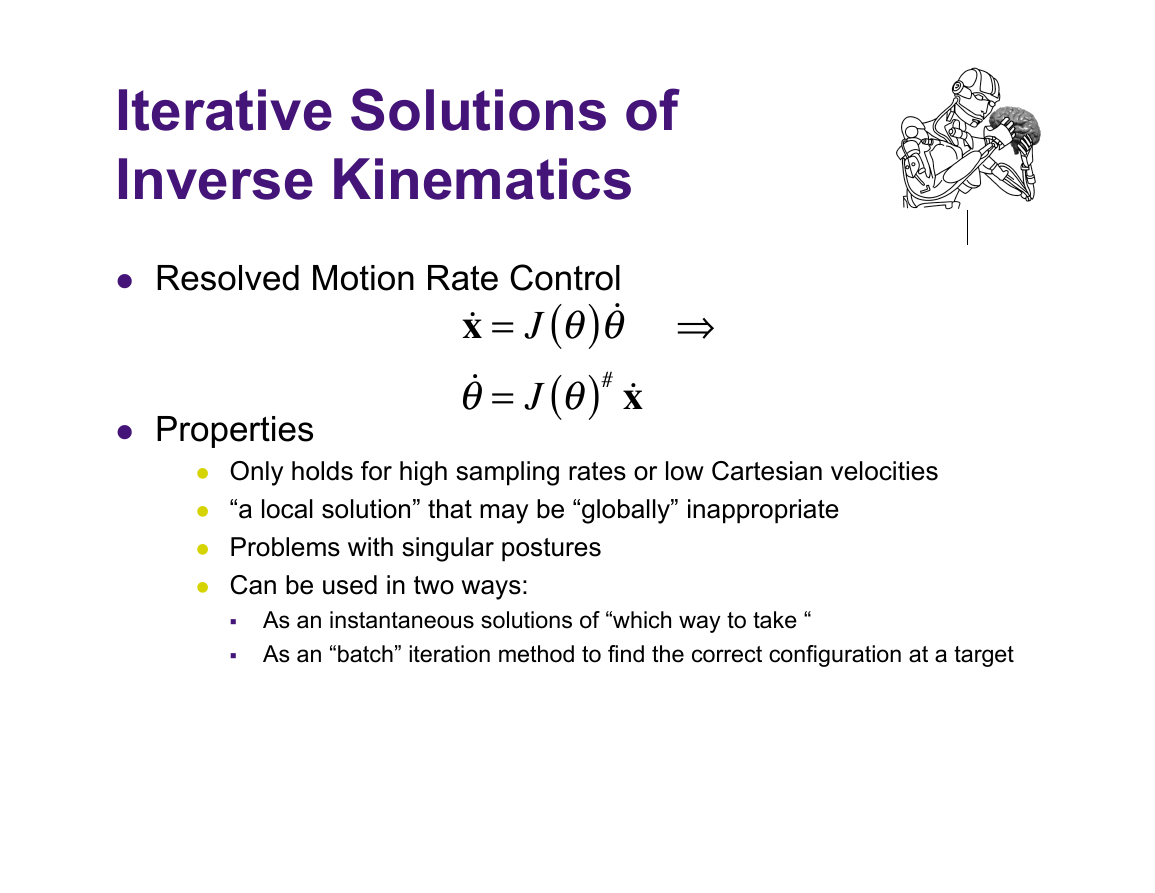

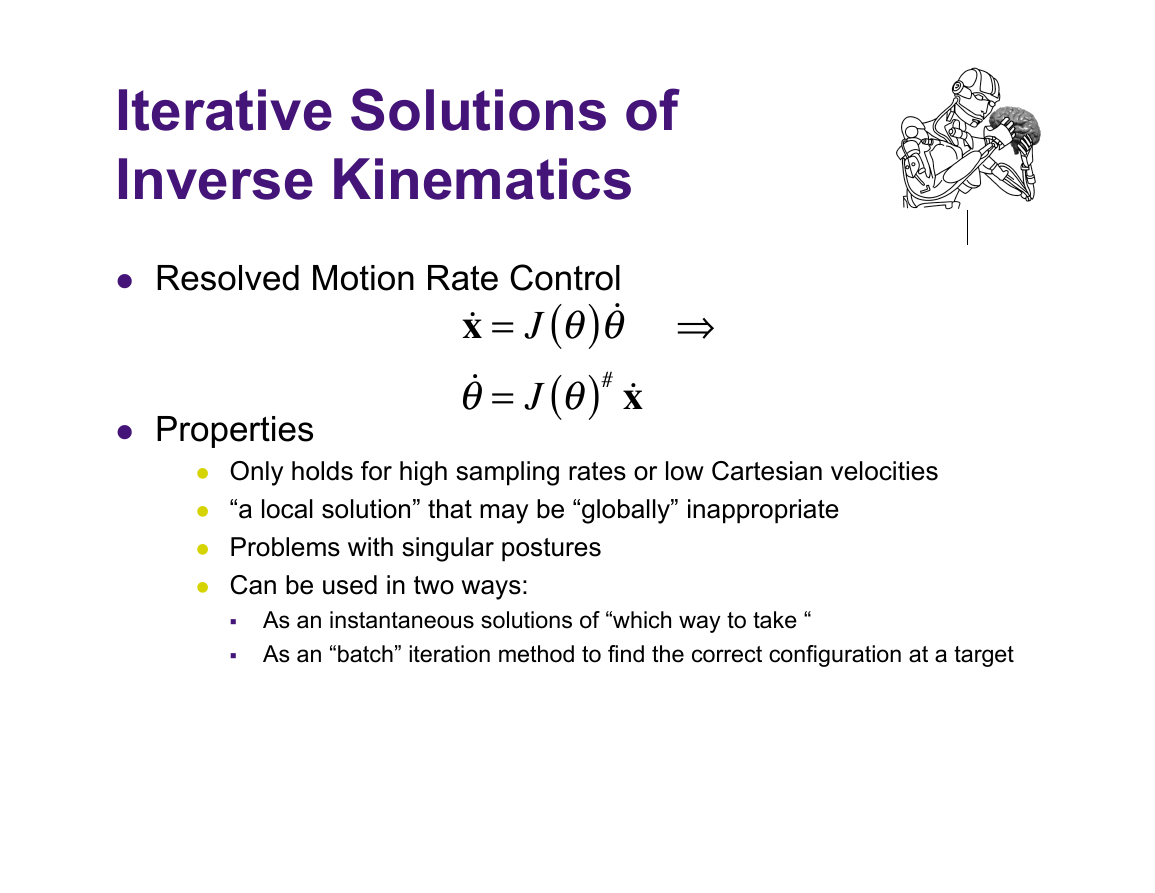

Iterative Solutions of

Inverse Kinematics

Resolved Motion Rate Control

x = J θ( ) θ ⇒

θ= J θ( )# x

Properties

Only holds for high sampling rates or low Cartesian velocities

“a local solution” that may be “globally” inappropriate

Problems with singular postures

Can be used in two ways:

As an instantaneous solutions of “which way to take “

As an “batch” iteration method to find the correct configuration at a target

�

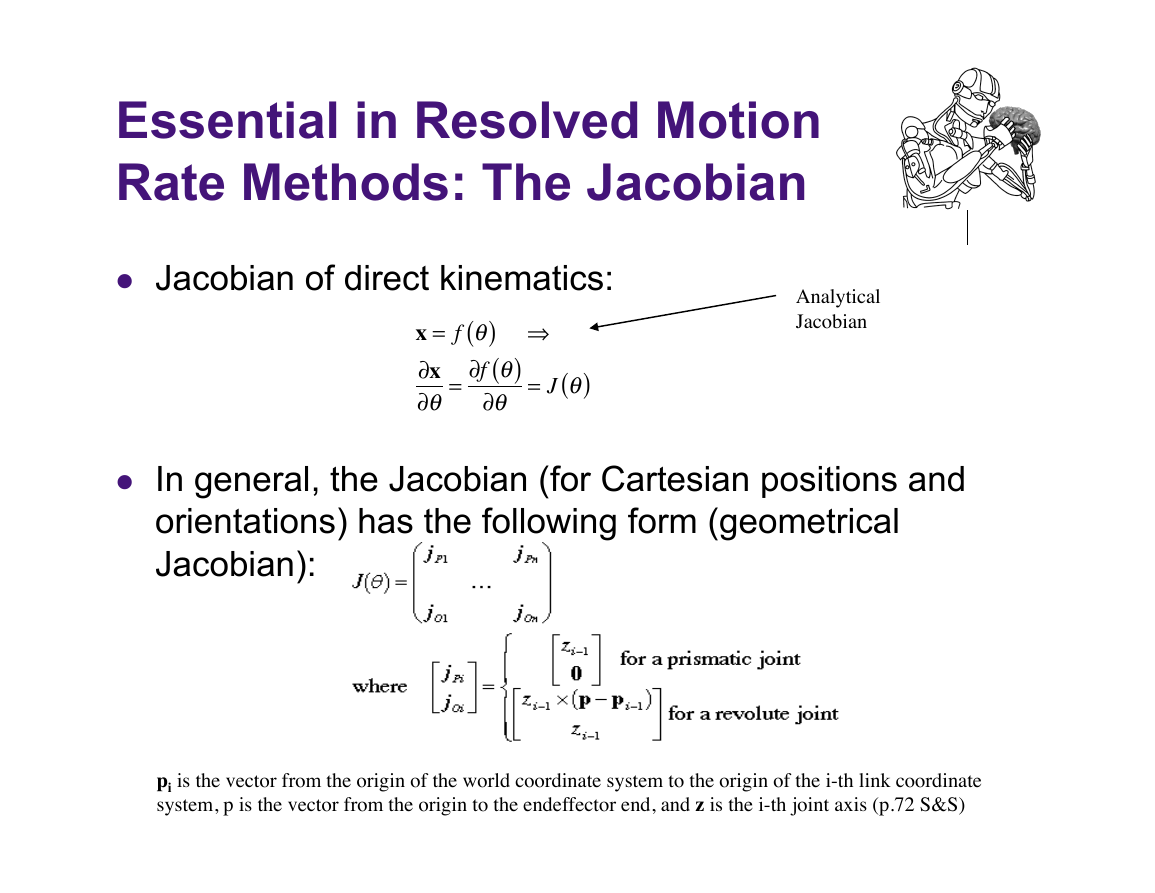

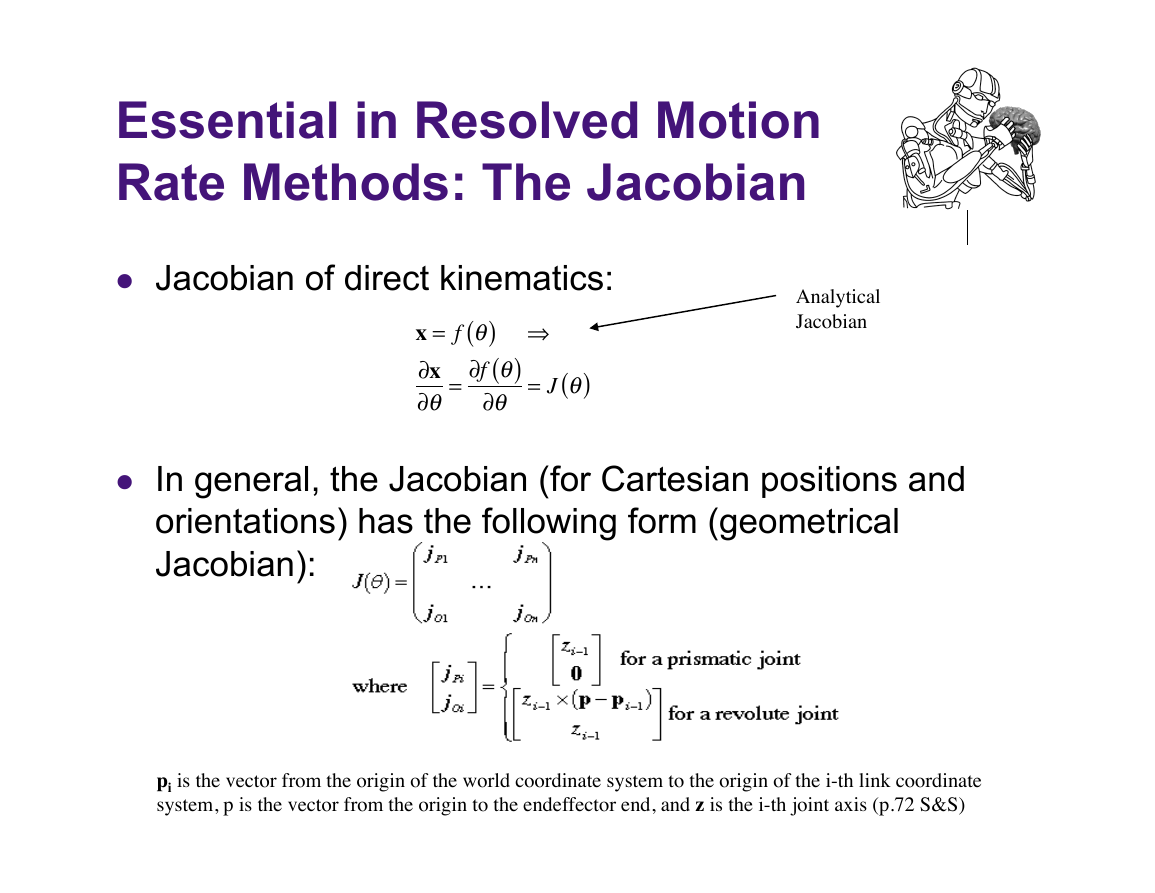

Essential in Resolved Motion

Rate Methods: The Jacobian

Jacobian of direct kinematics:

x = f θ( ) ⇒

∂x

∂θ

∂f θ( )

∂θ

=

= J θ( )

Analytical

Jacobian

In general, the Jacobian (for Cartesian positions and

orientations) has the following form (geometrical

Jacobian):

pi is the vector from the origin of the world coordinate system to the origin of the i-th link coordinate

system, p is the vector from the origin to the endeffector end, and z is the i-th joint axis (p.72 S&S)

�

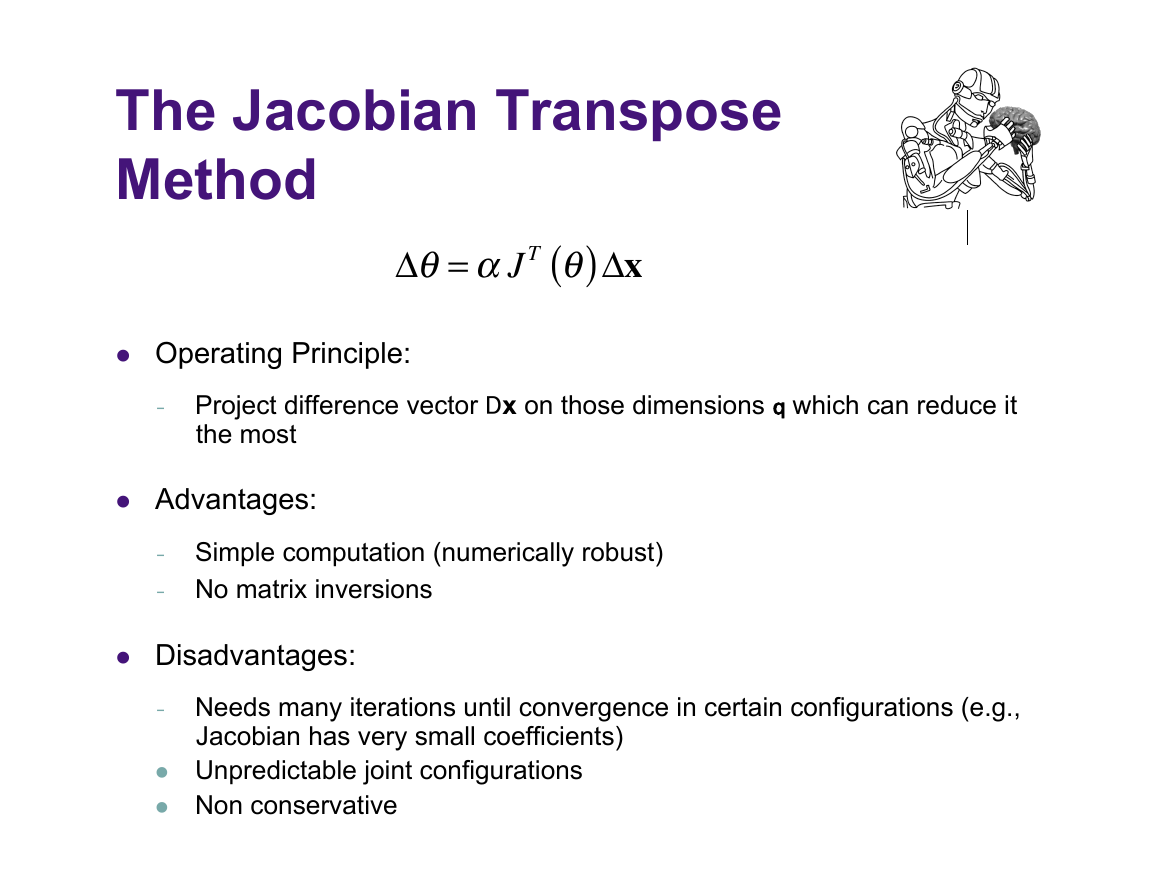

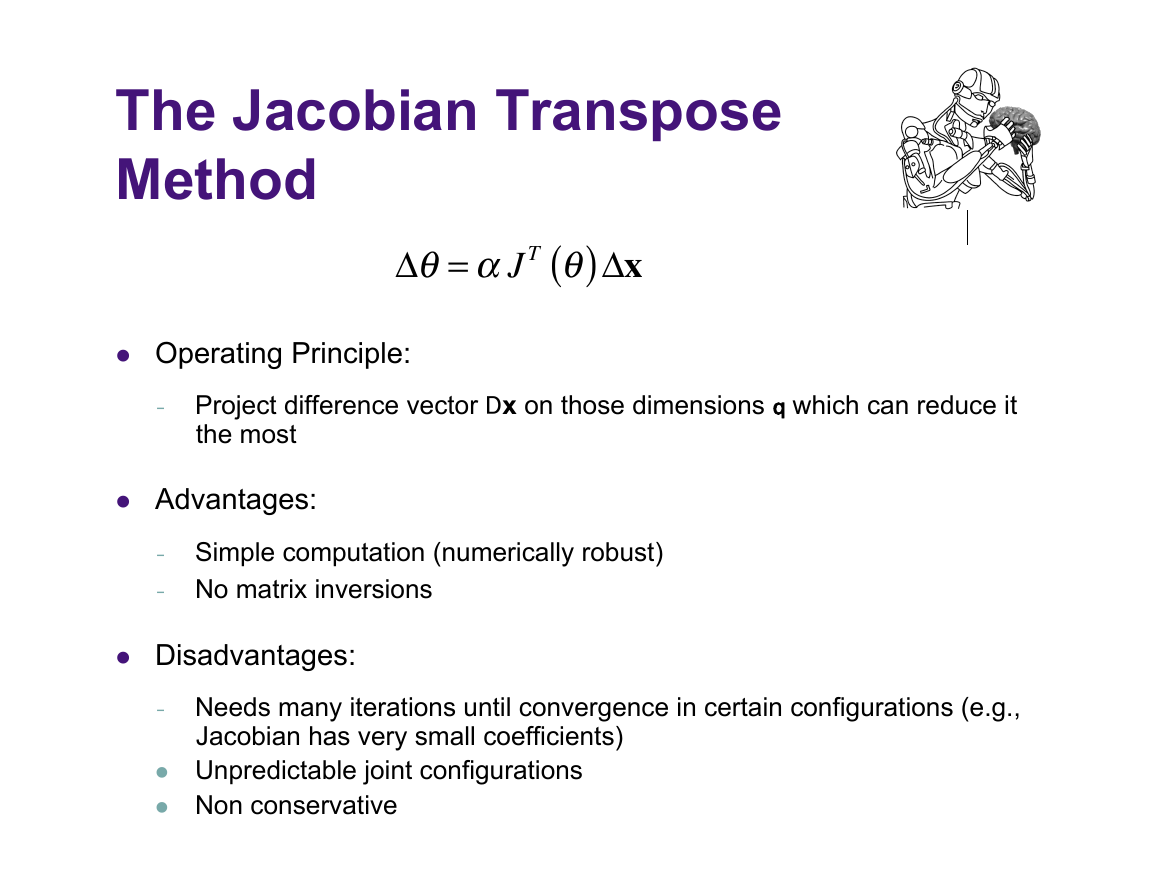

The Jacobian Transpose

Method

Δθ=αJ T θ( )Δx

Operating Principle:

- Project difference vector Dx on those dimensions q which can reduce it

the most

Advantages:

- Simple computation (numerically robust)

- No matrix inversions

Disadvantages:

- Needs many iterations until convergence in certain configurations (e.g.,

Jacobian has very small coefficients)

Unpredictable joint configurations

Non conservative

�

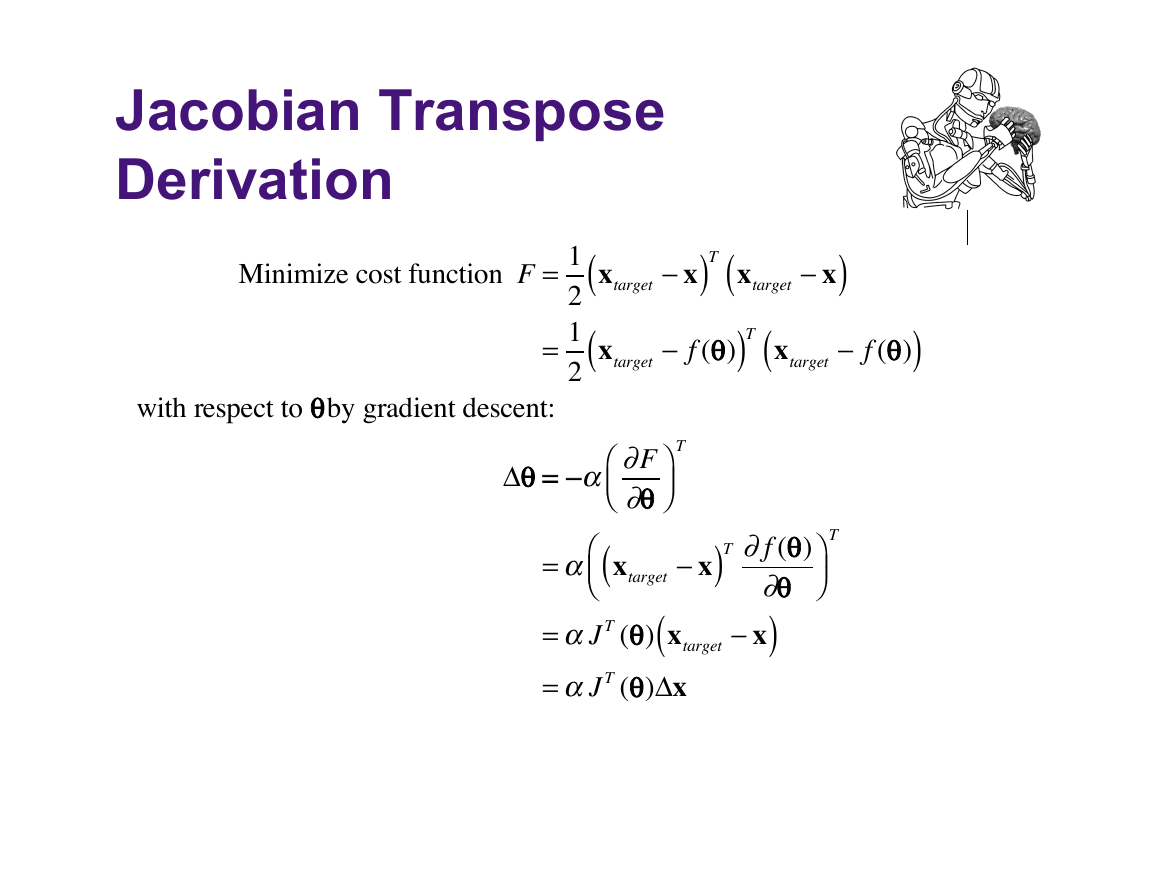

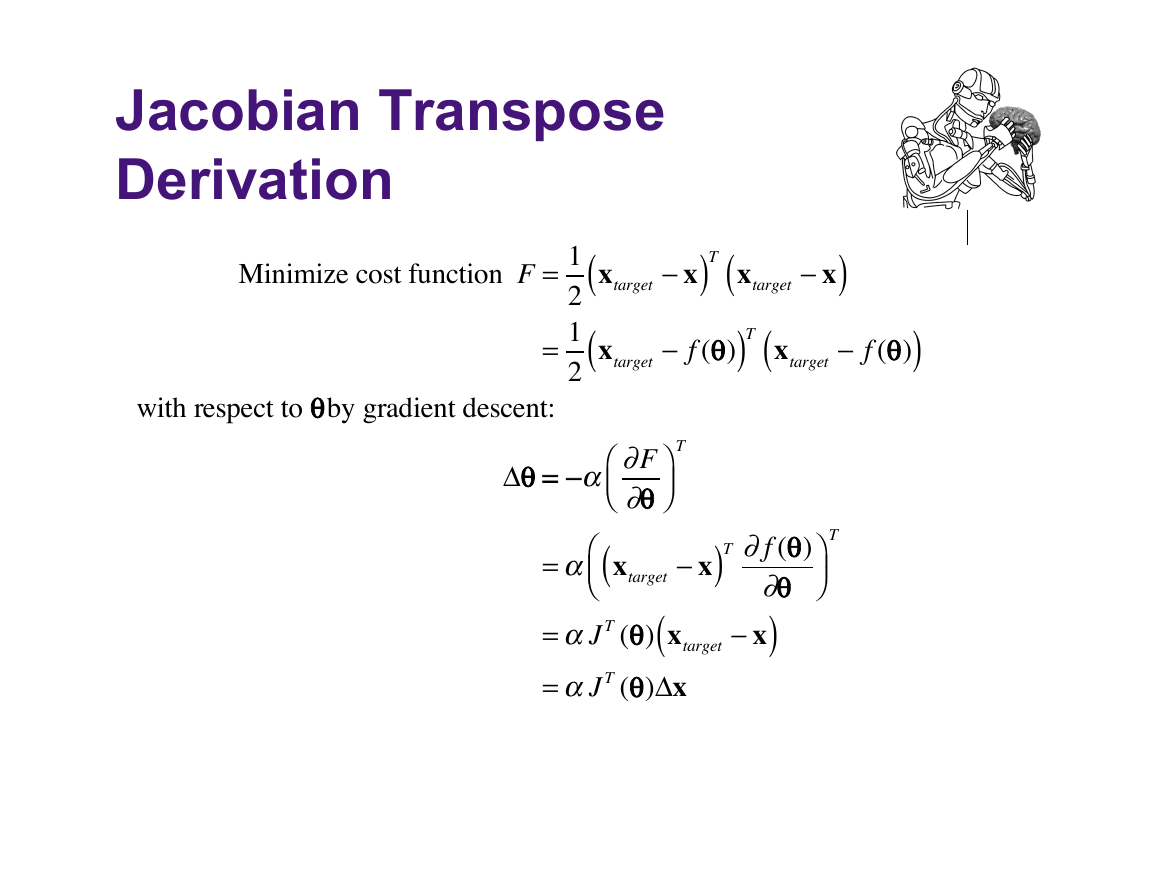

Jacobian Transpose

Derivation

Minimize cost function F =

=

with respect to θ by gradient descent:

1

(

)T xtarget − x

(

2 xtarget − x

1

(

2 xtarget − f (θ)

)

)T xtarget − f (θ)

(

)

Δθ = −α ∂F

∂θ

T

⎞

⎠⎟

⎛

⎝⎜

(

T

⎞

⎠⎟

⎛

⎝⎜

)T ∂f (θ)

=α xtarget − x

∂θ

(

)

=αJ T (θ) xtarget − x

=αJ T (θ)Δx

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc