10

Automatic White Balancing in Digital Photography

Edmund Y. Lam and George S. K. Fung

10.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 267

10.2 Human Visual System and Color Theory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 268

10.2.1 Illumination . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 269

10.2.2 Object

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 270

10.2.3 Color Stimulus . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271

10.2.4 Human Visual System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 273

10.2.5 Color Matching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 275

10.3 Challenges in Automatic White Balancing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 278

10.4 Automatic White Balancing Algorithms . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 279

10.4.1 Gray World . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 280

10.4.2 White Patch . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 281

10.4.3 Iterative White Balancing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 282

10.4.4 Illuminant Voting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 284

10.4.5 Color by Correlation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 286

10.4.6 Other Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 287

10.5 Implementations and Quality Evaluations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 288

10.6 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

10.1

Introduction

Color constancy is one of the most amazing features of the human visual system. When

we look at objects under different illuminations, their colors stay relatively constant. This

helps humans to identify objects conveniently. While the precise physiological mechanism

is not fully known, it has been postulated that the eyes are responsible for capturing different

wavelengths of the light reflected by an object, and the brain attempts to “discount” the

contribution of the illumination so that the color perception matches more closely with the

object reflectance, and therefore is mostly constant under different illuminations [1].

A similar behavior is highly desirable in digital still and video cameras. This is achieved

via white balancing which is an image processing step employed in a digital camera imag-

ing pipeline (detailed description of the camera imaging pipeline can be found in Chapters 1

and 3) to adjust the coloration of images captured under different illuminations [2], [3].

© 2009 by Taylor & Francis Group, LLC

267

�

268

Single-Sensor Imaging: Methods and Applications for Digital Cameras

This is because the ambient light has a significant effect on the color stimulus. If the color

temperature of a light source is low, the object being captured will appear reddish. An ex-

ample is the domestic tungsten lamp, whose color temperature is around 3000 Kelvins (K).

On the other hand, with a high color temperature light source, the object will appear bluish.

This includes the typical daylight, with color temperature above 6000 K [4].

Various manual and automatic methods exist for white balancing. For the former, the

camera manufacturer often has predefined settings for typical lighting conditions such as

sunlight, cloudy, fluorescent, or incandescent. The user only needs to make the selec-

tion, and the camera will compute the adjustment automatically. Higher-end cameras, such

as prosumer (professional-consumer) and single-lens reflex (SLR) digital cameras, would

even allow the user to define his or her own white balance reference. Most amateur users,

however, prefer automatic white balancing. The camera then needs to be able to dynam-

ically detect the color temperature of the ambient light and compensate for its effects, or

determine from the image content the necessary color correction due to the illumination.

The automatic white balancing (AWB) algorithm employed in the camera imaging pipeline

is thus critical to the color appearance of digital pictures. This chapter is devoted to a study

of such algorithms commonly used in digital photography.

We organize this chapter as follows. In Section 10.2, we first briefly review the human

visual system and the theory of color, which are necessary background materials for our

discussion on AWB strategies in cameras. Certain terminologies would also be introduced

that are commonly used in discussing color. This is followed by looking into the physical

principles of color formation on an electronic sensor. The challenges that exist in digital

photography are described in Section 10.3. Then, in Section 10.4, we describe a few repre-

sentative AWB algorithms. Our goal is not to be encyclopedic, which is rather impossible

considering the wide array of methods in existence and the proprietary nature of some of

these schemes, but to be illustrative of the main principles behind the major approaches. In

Section 10.5, experimental results of some of these representative algorithms are presented

to evaluate and compare the efficacy of various techniques. Some concluding remarks are

given in Section 10.6.

10.2 Human Visual System and Color Theory

It is instructive for us to begin the discussion on color with the physics of light and the

physiology of the human visual system. The primary reason is that humans are typically

the end-user and the judge of the images in our camera systems. A secondary reason is

that the eye is itself a complex and beautifully made organ that acts as an image capturing

device for our brain. In many cases, we model our camera system design on the natural

design of our eyes.

Visible light occupies a small section of the electromagnetic spectrum, which we call

the visible spectrum. In the seventeenth century, Sir Isaac Newton (1642–1727) was the

first to demonstrate that when a white beam entered a prism, due to the law of refraction of

light the exit beam would consist of shades of different colors. Further experimentation and

© 2009 by Taylor & Francis Group, LLC

�

Automatic White Balancing in Digital Photography

269

�

270

Single-Sensor Imaging: Methods and Applications for Digital Cameras

(a)

(c)

(b)

(d)

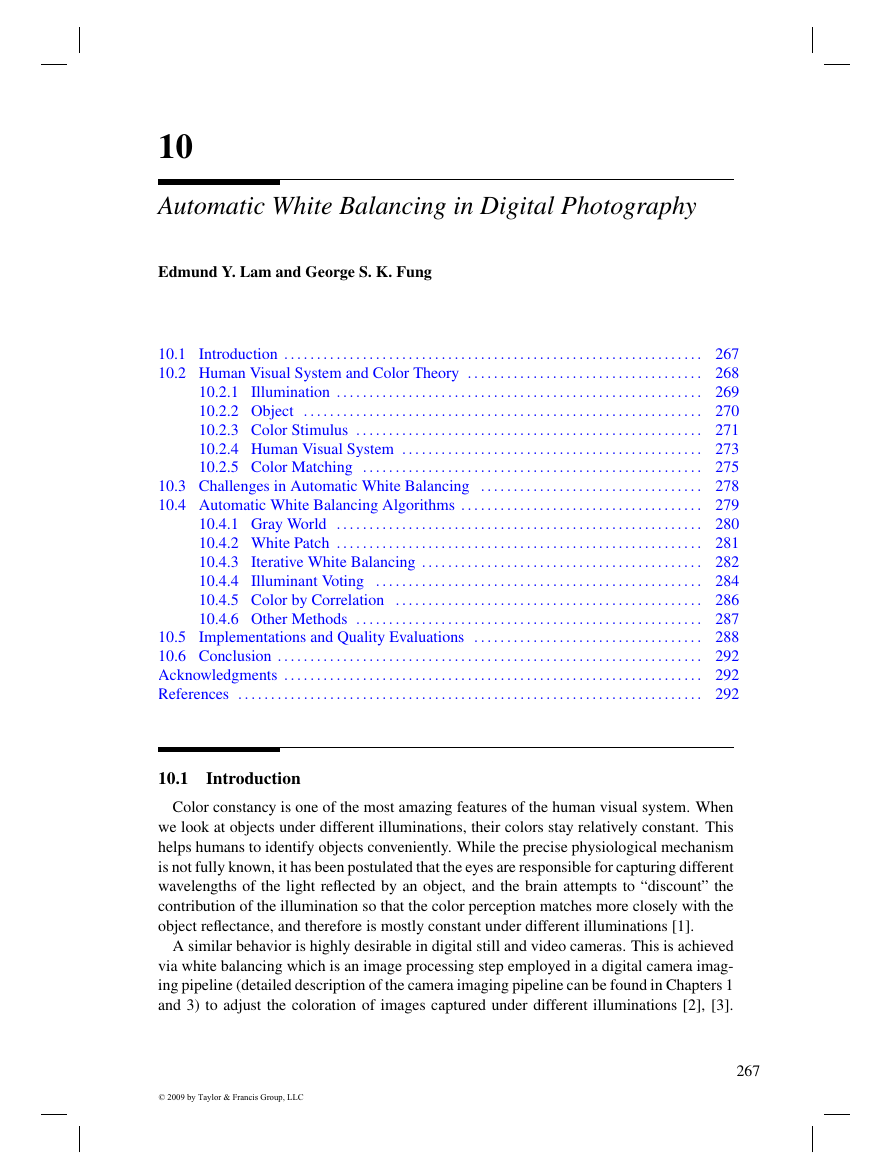

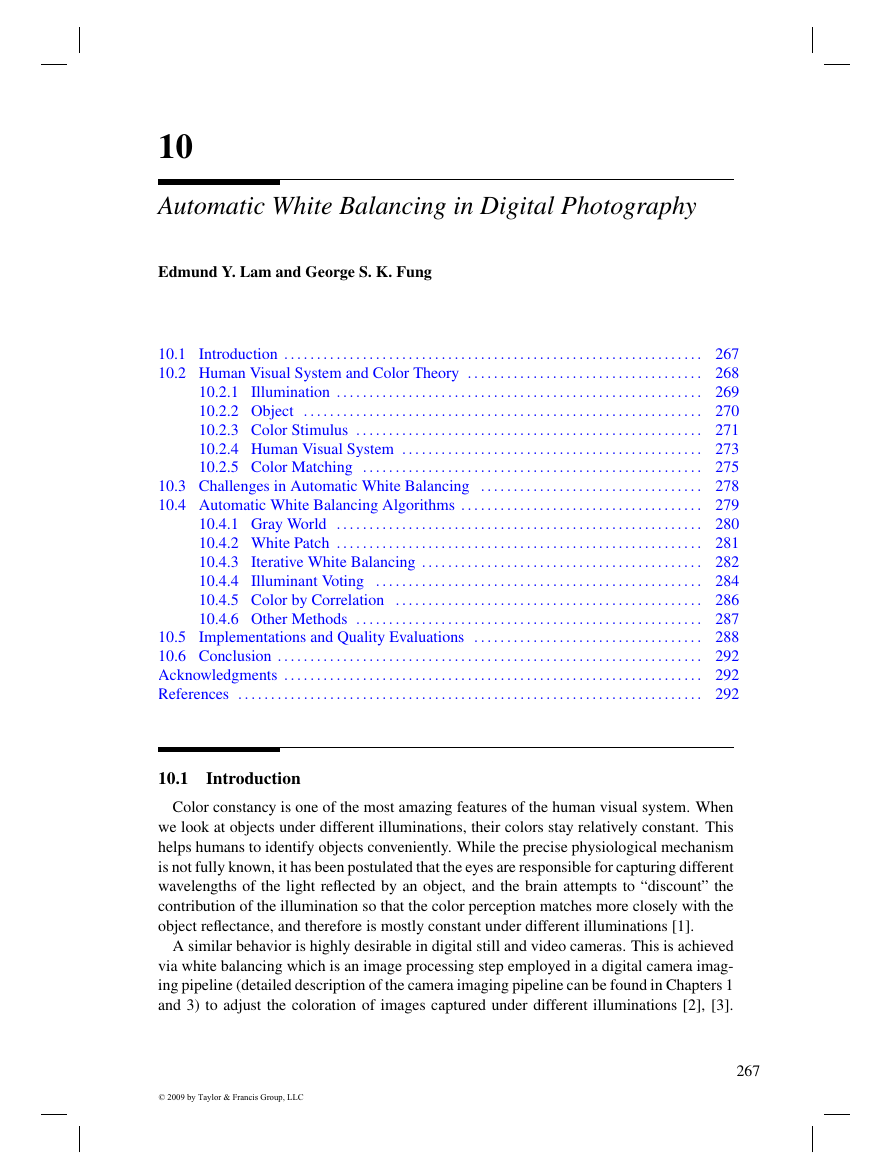

FIGURE 10.1

Spectral power distribution of various common types of illuminations: (a) sunlight, (b) tungsten light, (c)

fluorescent light, and (d) light-emitting diode (LED).

as a linear combination of known basis functions Ij(λ) with

I(λ) =

m∑

j=1

αjIj(λ),

(10.1)

where, for example, three basis functions (corresponding to m = 3) are sufficient to repre-

sent standard daylights [5]. This property can be used in the design of AWB algorithms.

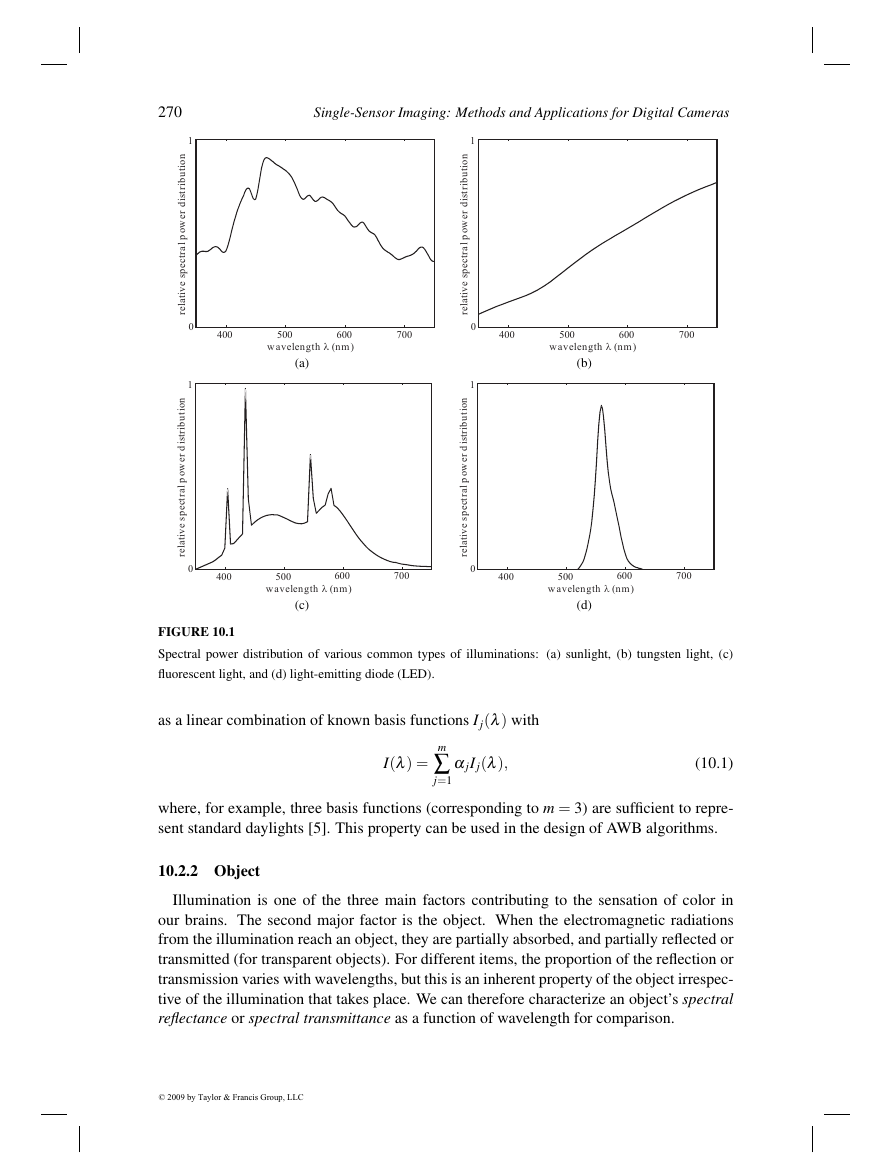

10.2.2 Object

Illumination is one of the three main factors contributing to the sensation of color in

our brains. The second major factor is the object. When the electromagnetic radiations

from the illumination reach an object, they are partially absorbed, and partially reflected or

transmitted (for transparent objects). For different items, the proportion of the reflection or

transmission varies with wavelengths, but this is an inherent property of the object irrespec-

tive of the illumination that takes place. We can therefore characterize an object’s spectral

reflectance or spectral transmittance as a function of wavelength for comparison.

© 2009 by Taylor & Francis Group, LLC

10400500600700wavelengthl(nm)relativespectralpowerdistribution10400500600700wavelengthl(nm)relativespectralpowerdistribution10400500600700wavelengthl(nm)relativespectralpowerdistribution10400500600700wavelengthl(nm)relativespectralpowerdistribution�

Automatic White Balancing in Digital Photography

271

�

272

Single-Sensor Imaging: Methods and Applications for Digital Cameras

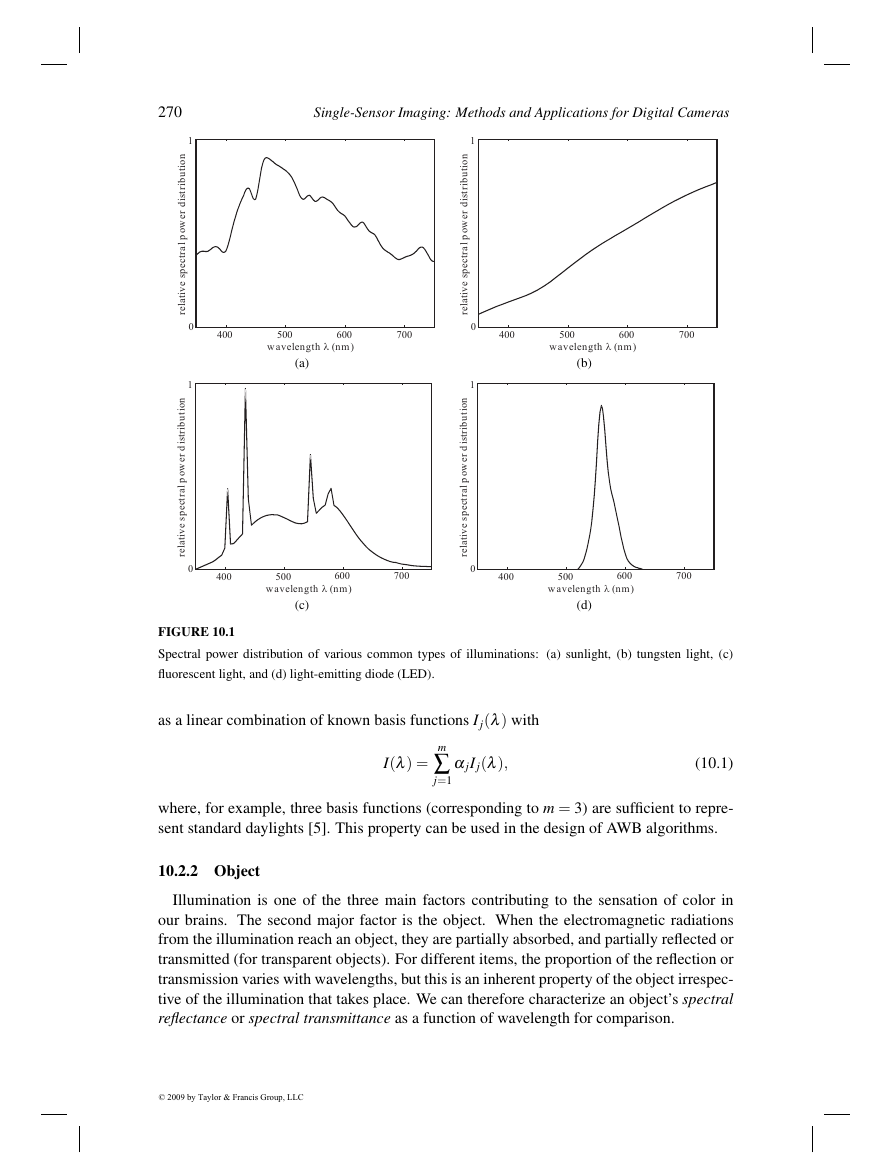

(a)

(c)

(b)

(d)

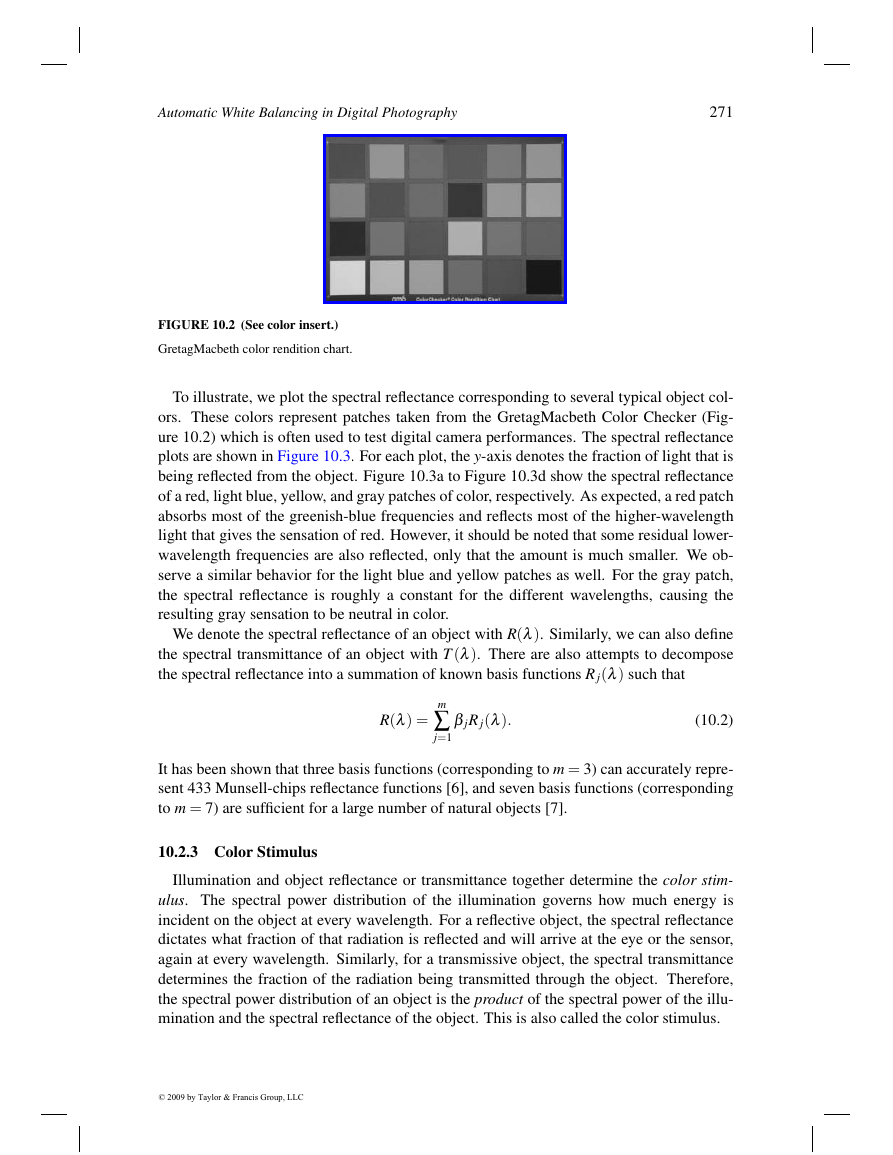

FIGURE 10.3

Spectral reflectance of various color patches: (a) red patch, (b) light blue patch, (c) yellow patch, (d) gray

patch.

Mathematically, we denote the color stimulus with S(λ). This is related to the illumina-

tion I(λ) and object reflectance R(λ) by [8]

S(λ) = I(λ)R(λ).

If we use the basis decomposition in Equations 10.1 and 10.2, this becomes

S(λ) =

=

j=1

m∑

αjIj(λ)

m∑

n∑

j=1

k=1

αjβkIj(λ)Rk(λ).

n∑

k=1

βkRk(λ)

(10.3)

(10.4)

(10.5)

Although the above equations appear deceptively simple, they underscore an important

fact in color science that we must emphasize here. The color stimulus depends on both the

illumination and the object. Therefore, given any object, we can theoretically manipulate

the illumination so that it produces any desired color stimulus! We will see shortly that the

© 2009 by Taylor & Francis Group, LLC

10400500600700wavelengthl(nm)spectralreflectance10400500600700wavelengthl(nm)spectralreflectance10400500600700wavelengthl(nm)spectralreflectance10400500600700wavelengthl(nm)spectralreflectance�

Automatic White Balancing in Digital Photography

273

FIGURE 10.4

An anatomy of a human eye.

color stimulus in turn contributes to our perception of color. One noteworthy corollary is

that color is not an inherent feature of an object. A red object, for example, can be perceived

as blue by a clever design of the illumination.

In other words, the illumination is as important as the object reflectance. This fact is

very important for our camera system design. When we take a picture of the same object

first under sunlight and then under fluorescent light, for example, the color stimuli vary

significantly due to differences in illumination. We must adapt our camera to interpret

the color stimuli differently, or otherwise the color of the photographs would look very

different. This explains why AWB is so critical and challenging. However, before we can

proceed on discussing AWB algorithms, we need to explore how our eyes interpret the color

stimuli first. This brings us to the third major factor contributing to the color sensation: the

physiology of our eyes.

10.2.4 Human Visual System

The color stimulus is a function of wavelength. If we had to faithfully reproduce the

color stimulus, there is a lot of information to be stored. A key fact from color science is

that there is no need to reproduce the entire spectral distribution in color reproduction. As

a matter of fact, we often need only three values to specify a color, despite the obvious loss

in information and possibility of ambiguity. The reason lies in our human visual system.

A basic anatomy of a human eye is shown in Figure 10.4. Visible light enters through

the pupil and is refracted by the lens to create an image on the retina. The optical nerve

transfers the image on the retina to the brain for interpretation. The retina is able to form

an image because of tiny sensors called photoreceptors. For a normal person, there are two

types of photoreceptors, cones and rods, which function very differently.

The rods are responsible for scotopic, or dim-light, vision. We have somewhere between

75 and 150 million rods in each of our eyes, and they are distributed all over the retina.

Their chief aim is to give us an overall picture of the field of view of our eyes, rather than

for color vision. When we enter a room that is rather dark, we may still be able to see

objects even though they tend to be colorless. This is because under such illumination,

only the rods are able to give us the images.

© 2009 by Taylor & Francis Group, LLC

opticalnerveblindspotretina(photoreceptors)pupillens�

274

Single-Sensor Imaging: Methods and Applications for Digital Cameras

(a)

(b)

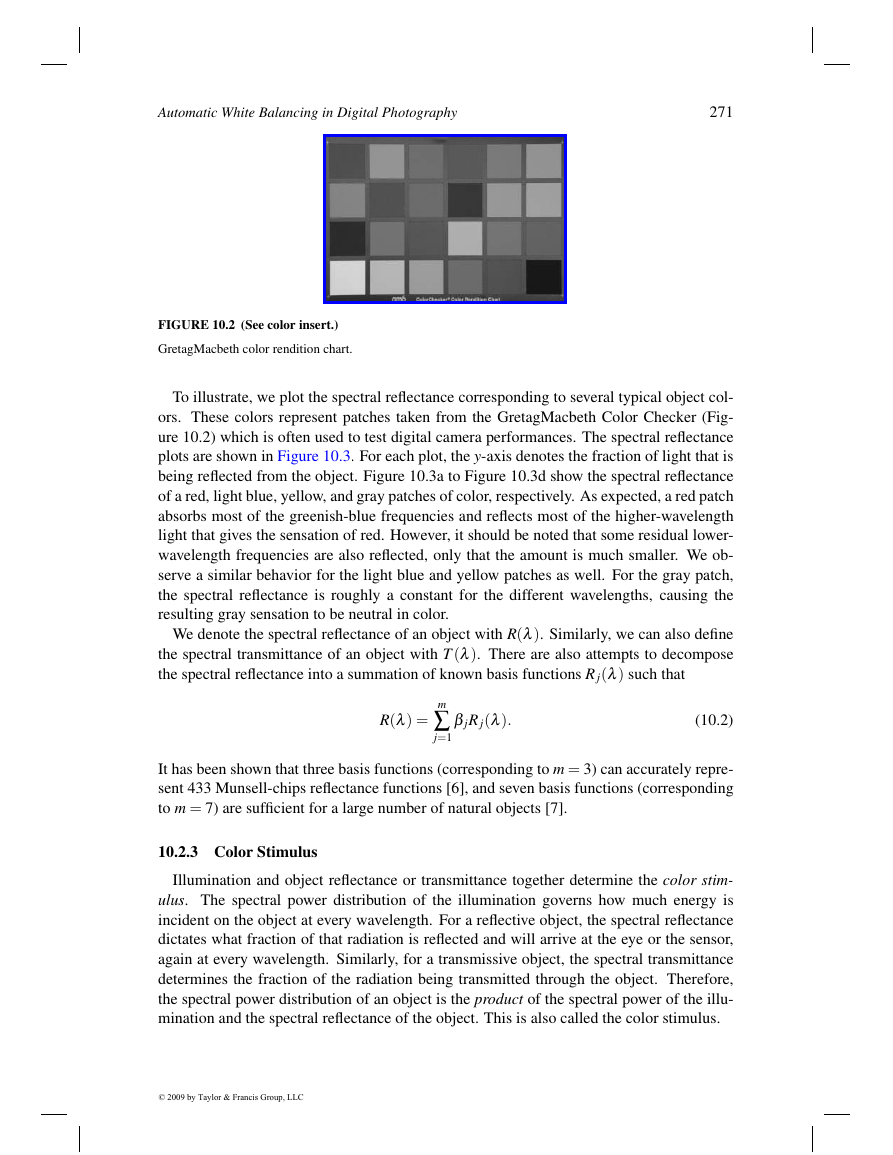

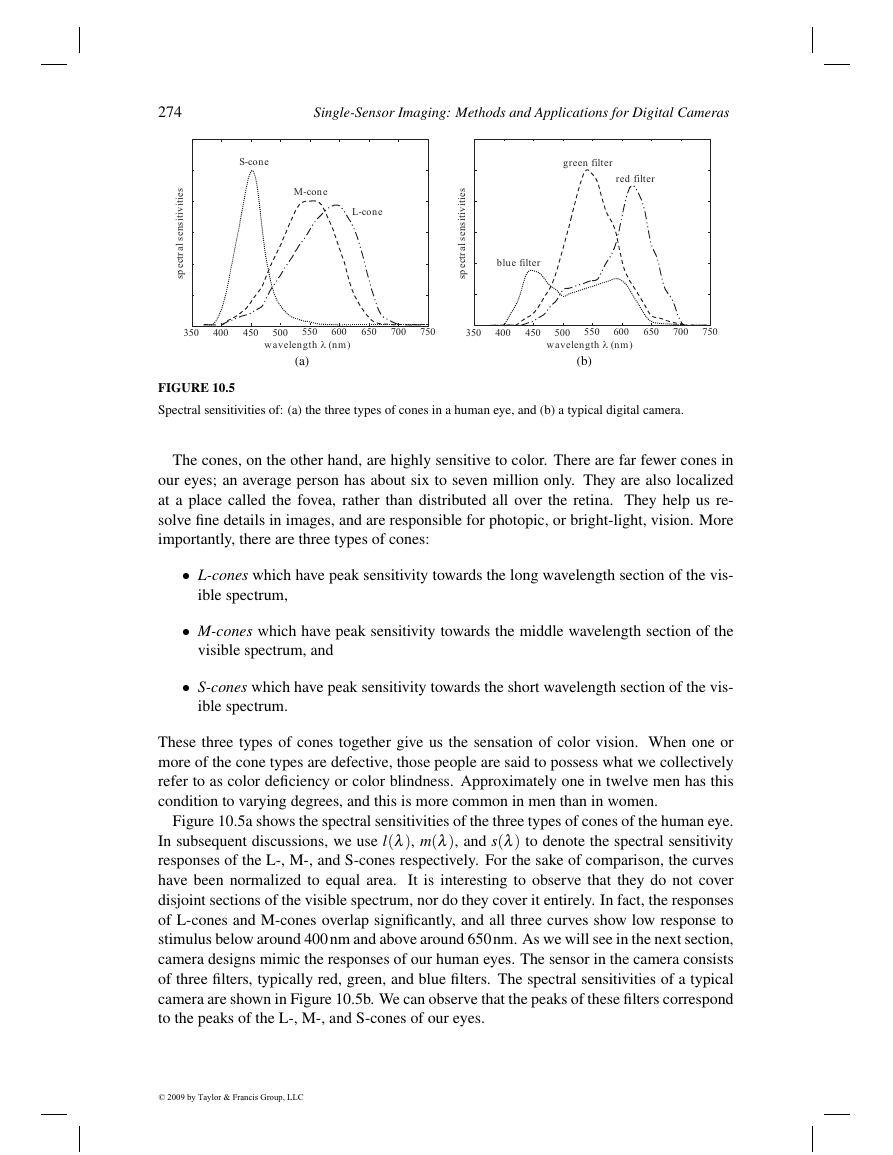

FIGURE 10.5

Spectral sensitivities of: (a) the three types of cones in a human eye, and (b) a typical digital camera.

The cones, on the other hand, are highly sensitive to color. There are far fewer cones in

our eyes; an average person has about six to seven million only. They are also localized

at a place called the fovea, rather than distributed all over the retina. They help us re-

solve fine details in images, and are responsible for photopic, or bright-light, vision. More

importantly, there are three types of cones:

• L-cones which have peak sensitivity towards the long wavelength section of the vis-

ible spectrum,

• M-cones which have peak sensitivity towards the middle wavelength section of the

visible spectrum, and

• S-cones which have peak sensitivity towards the short wavelength section of the vis-

ible spectrum.

These three types of cones together give us the sensation of color vision. When one or

more of the cone types are defective, those people are said to possess what we collectively

refer to as color deficiency or color blindness. Approximately one in twelve men has this

condition to varying degrees, and this is more common in men than in women.

Figure 10.5a shows the spectral sensitivities of the three types of cones of the human eye.

In subsequent discussions, we use l(λ), m(λ), and s(λ) to denote the spectral sensitivity

responses of the L-, M-, and S-cones respectively. For the sake of comparison, the curves

have been normalized to equal area.

It is interesting to observe that they do not cover

disjoint sections of the visible spectrum, nor do they cover it entirely. In fact, the responses

of L-cones and M-cones overlap significantly, and all three curves show low response to

stimulus below around 400nm and above around 650nm. As we will see in the next section,

camera designs mimic the responses of our human eyes. The sensor in the camera consists

of three filters, typically red, green, and blue filters. The spectral sensitivities of a typical

camera are shown in Figure 10.5b. We can observe that the peaks of these filters correspond

to the peaks of the L-, M-, and S-cones of our eyes.

© 2009 by Taylor & Francis Group, LLC

400500600700wavelengthl(nm)spectralsensitivities350450550650750L-coneS-coneM-cone400500600700wavelengthl(nm)spectralsensitivities350450550650750redfilterbluefiltergreenfilter�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc