-1723-

1723

GestureRecognitionwithDepthImages-ASimpleApproachUpalMahbub1,HafizImtiaz1,TonmoyRoy1,Md.ShafiurRahman1,andMd.AtiqurRahmanAhad21DepartmentofElectricalandElectronicEngineering,BangladeshUniversityofEngineeringandTechnology,Dhaka-1000,BangladeshE-mail:omeecd@eee.buet.ac.bd;hafiz.imtiaz@live.com;tonmoyroy@live.com;shafeey@live.com2DepartmentofAppliedPhysics,Electronics&CommunicationEngineeringUniversityofDhaka,BangladeshEmail:atiqahad@univdhaka.eduAbstract:Anovelapproachforgesturerecognitionisdevelopedinthispaperbasedontemplatematchingfrommotiondepthimage.Theproposedmethodusesasingleexampleofanactionasaquerytofindsimilarmatchesfromagoodnumberoftestsamples.Nopriorknowledgeabouttheactions,theforeground/backgroundsegmentation,oranymotionestimationortrackingisrequired.Anovelapproachtoseparatedifferentgesturesfromasinglevideoisalsointroduced.Theproposedmethodisbasedonthecomputationofspace-timedescriptorsfromthequeryvideowhichmeasuresthelikenessofagestureinalexicon.Thedescriptorextractionmethodincludesthestandarddeviationofthedepthimagesofagesture.Moreover,twodimensionaldiscreteFouriertransformisemployedtoreducetheeffectofcamerashift.Classificationisdonebasedoncorrelationcoefficientoftheimagetemplatesandanintelligentclassifierisproposedtoensurebetterrecognitionaccuracy.Extensiveexperimentationisdoneonavastandverycomplicateddatasettoestablishtheeffectivenessofemployingtheproposedmethod.Keywords:gesturerecognition,depthimage,standarddeviation,2DFouriertransform.1.INTRODUCTIONHandgesturerecognitionandanalysisisveryimpor-tantincomputervisionandman-machineinteractionsforvariousapplications[1],[2].WuandHuang[3]presentedasurveyongesturerecognition,whereas,actionrecogni-tionissuesareanalyzedin[1].Theapplicationarenasaregaming,computerinterfaces,robotics,videosurveil-lance,bodypostureanalysis,actionrecognition,behavioranalysis,signlanguageinterpretationsfordeaf,facialandemotionunderstanding,etc.[4][5],[6].Thereareseveralmethodsproposedbyagoodnumberofresearchersinthefieldofactionandgesturerecog-nition[4],whicharealreadyimplementedinpracticalapplications.Thebasisofrepresentationoftheaction,whichisusuallydifferentineachmethod,iscommonlyrelatedtoappearance,shape,spatio-temporalorientation,opticalflow,interest-pointorvolume.Forexample,inthespatio-temporaltemplatebasedapproaches,anim-agesequenceisusedtoprepareamotionenergyimage(MEI)andamotionhistoryimage(MHI),whichindicatetheregionsofmotionsandpreservethetimeinformationofthemotion[5],[2]aswell.Anextensionofthisap-proachtothreedimensionisproposedin[7].Recently,someresearchersexploredopticalflowbasedmotionde-tectionandlocalizationmethodsandfrequencydomainrepresentationofgestures[8],[9].Amongothermeth-ods,theBag-of-wordsmethodhasbeenusedsuccessfullyforobjectcategorizationin[10].[11]proposedamethodforsegmentingaperiodicactionintocyclesandtherebyclassifytheaction.Eventhoughitisveryimportanttounderstandvar-ioushandgestures,itisextremelydifficulttodosoduetovariouschallengingaspects:dimensionalityandvarietiesofgestures,partialocclusion,boundaryselec-tion,headmovement,background,culturalvariationsinsignlanguages,etc.Usually,mostoftheexistingworksongesturerecognitionandanalysiscoveronlyafewclassesortypesofgesturesandusually,thesearealsonotcontinuousandnotinrandomorders.Exist-ingmethodscanbeclassifiedintoseveralcategories,suchasview/appearance-based,model-based,space-timevolume-based,ordirectmotion-based[1]methods.Template-matchingapproaches[2],[12]aresimplerandfasteralgorithmsformotionanalysisorrecognitionthatcanrepresentanentirevideosequenceintoasingleimageformat.Recently,approachesrelatedtoSpatio-TemporalInterestfeaturePoints(STIP)arebecomingprominentforactionrepresentation[13].However,duetovariouslimitationsandconstraints,nosingleapproachseemstobeenoughforwiderapplicationsinactionunderstandingandrecognition.Hence,thechallengingaspectsofges-turerecognitionstillremainsinitsinfancy.Moreover,thoughanumberoftheavailablegesturerecognitionmethodshaveacquiredhighaccuracyindif-ferentdatasets,mostofthemdependonagoodamountinputtotrainthesystemanddonotperformverywellifthenumberoftrainingdatawerelimited.[14]proposedamethodforactionrecognitionusingapatchbasedmotiondescriptorandmatchingschemewithasingleclipasthetemplate.TheKinectsensorwithamounteddepthsensoren-couragedtheresearcherstodevelopsomealgorithmstotakeadvantageofthedepthinformation.Amongsuchworks,[15]proposedamethodforhanddetectionbyintegratingRGBanddepthdatawhichinvolvedfindingpossiblehandpixelsbyskincolordetectionbasedonthefactthatneckandotherskincoloredclothspartsofthebodywillbebehindthehandinmostofthecases.SICE Annual Conference 2012 August 20-23, 2012, Akita University, Akita, Japan PR0001/12/0000- ¥ 400 ©2012 SICE �

-1724-

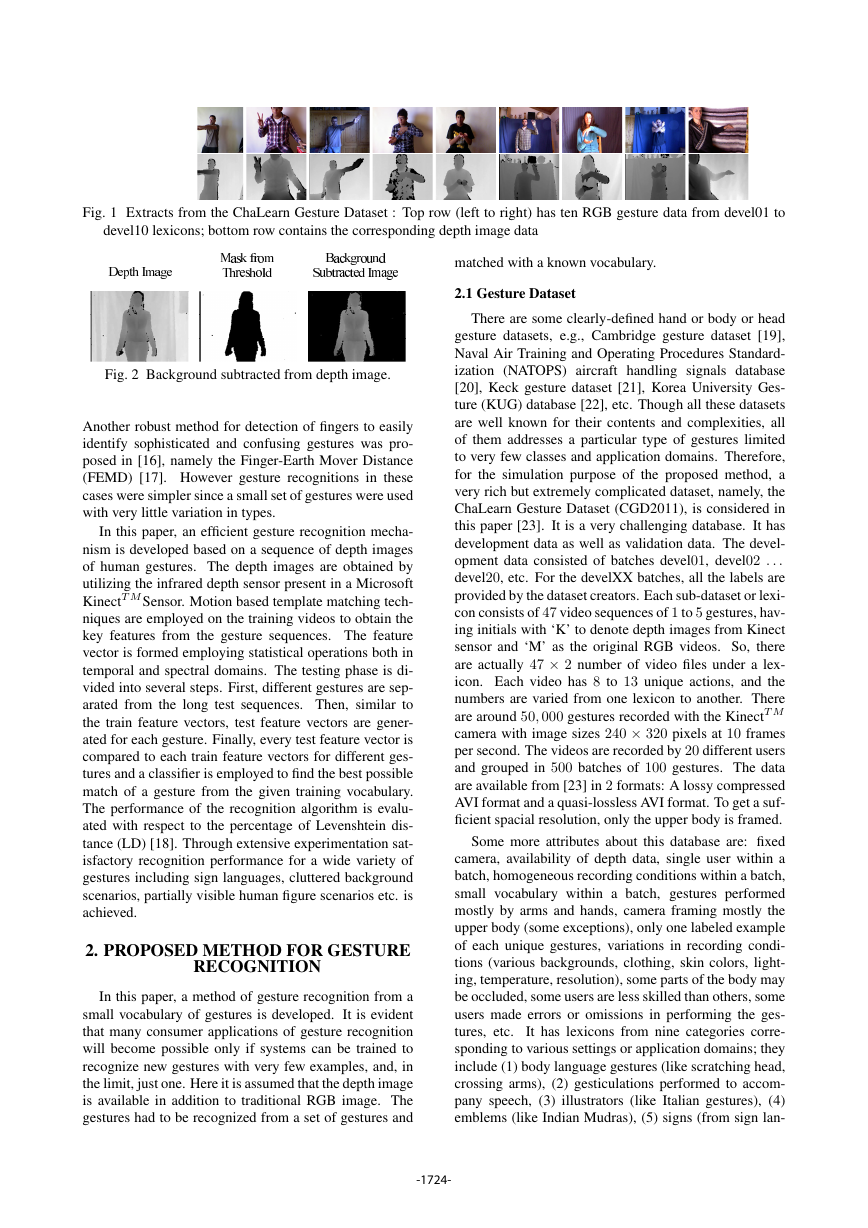

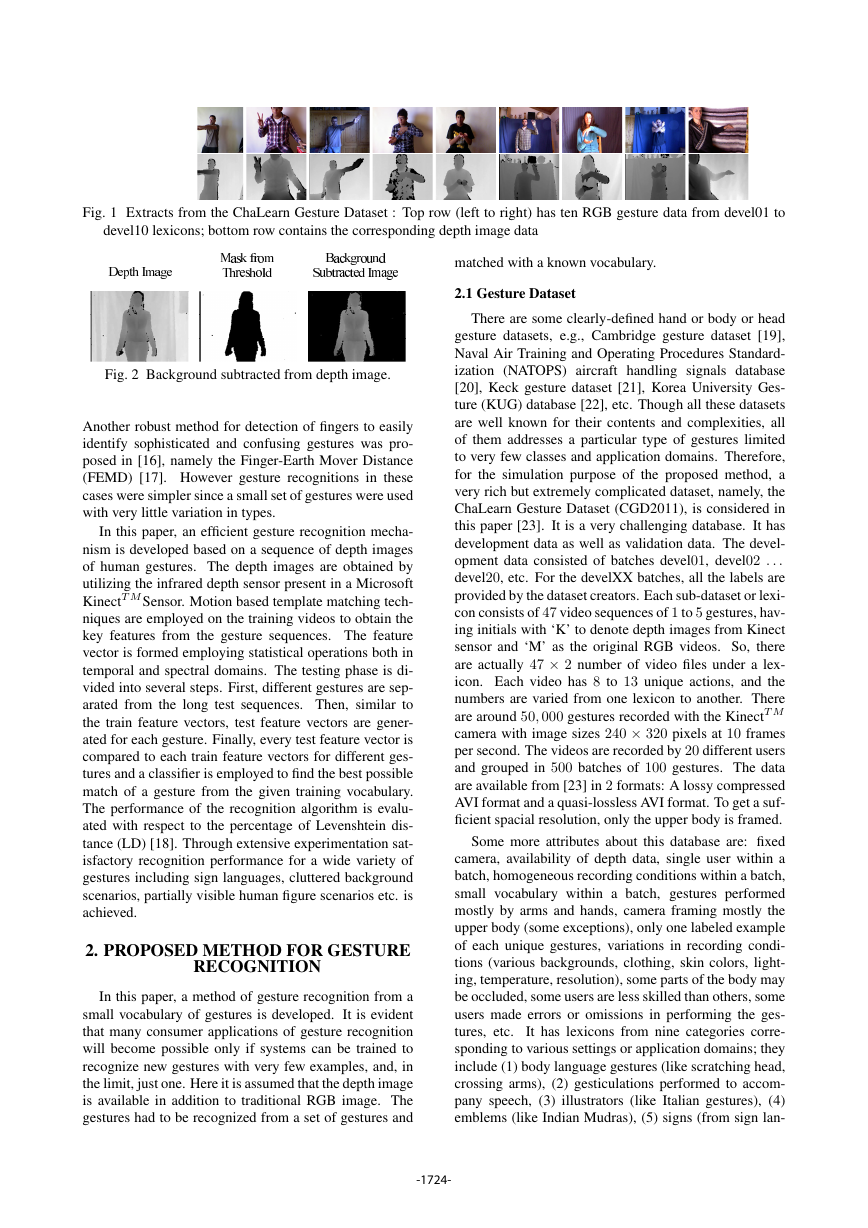

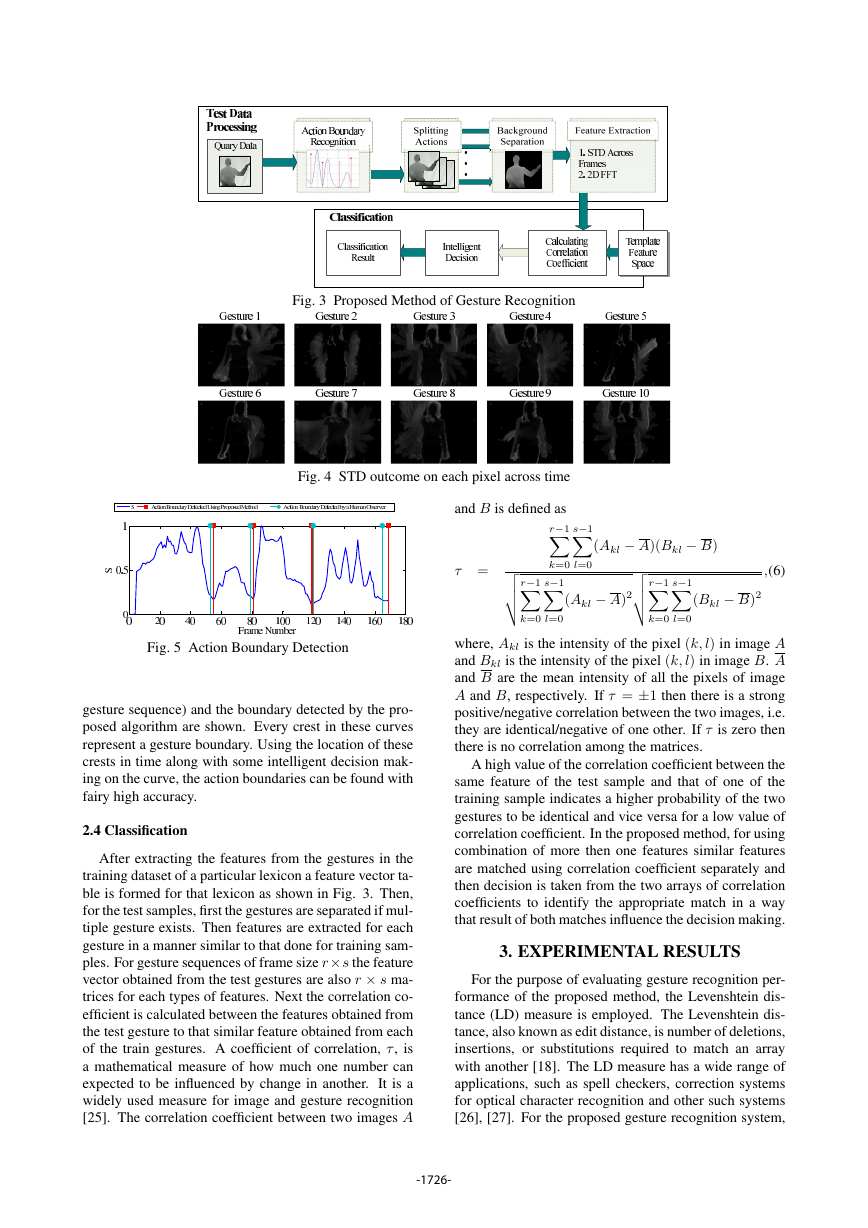

Fig.1ExtractsfromtheChaLearnGestureDataset:Toprow(lefttoright)hastenRGBgesturedatafromdevel01todevel10lexicons;bottomrowcontainsthecorrespondingdepthimagedataFig.2Backgroundsubtractedfromdepthimage.Anotherrobustmethodfordetectionoffingerstoeasilyidentifysophisticatedandconfusinggestureswaspro-posedin[16],namelytheFinger-EarthMoverDistance(FEMD)[17].Howevergesturerecognitionsinthesecasesweresimplersinceasmallsetofgestureswereusedwithverylittlevariationintypes.Inthispaper,anefficientgesturerecognitionmecha-nismisdevelopedbasedonasequenceofdepthimagesofhumangestures.ThedepthimagesareobtainedbyutilizingtheinfrareddepthsensorpresentinaMicrosoftKinectTMSensor.Motionbasedtemplatematchingtech-niquesareemployedonthetrainingvideostoobtainthekeyfeaturesfromthegesturesequences.Thefeaturevectorisformedemployingstatisticaloperationsbothintemporalandspectraldomains.Thetestingphaseisdi-videdintoseveralsteps.First,differentgesturesaresep-aratedfromthelongtestsequences.Then,similartothetrainfeaturevectors,testfeaturevectorsaregener-atedforeachgesture.Finally,everytestfeaturevectoriscomparedtoeachtrainfeaturevectorsfordifferentges-turesandaclassifierisemployedtofindthebestpossiblematchofagesturefromthegiventrainingvocabulary.Theperformanceoftherecognitionalgorithmisevalu-atedwithrespecttothepercentageofLevenshteindis-tance(LD)[18].Throughextensiveexperimentationsat-isfactoryrecognitionperformanceforawidevarietyofgesturesincludingsignlanguages,clutteredbackgroundscenarios,partiallyvisiblehumanfigurescenariosetc.isachieved.2.PROPOSEDMETHODFORGESTURERECOGNITIONInthispaper,amethodofgesturerecognitionfromasmallvocabularyofgesturesisdeveloped.Itisevidentthatmanyconsumerapplicationsofgesturerecognitionwillbecomepossibleonlyifsystemscanbetrainedtorecognizenewgestureswithveryfewexamples,and,inthelimit,justone.HereitisassumedthatthedepthimageisavailableinadditiontotraditionalRGBimage.Thegestureshadtoberecognizedfromasetofgesturesandmatchedwithaknownvocabulary.2.1GestureDatasetTherearesomeclearly-definedhandorbodyorheadgesturedatasets,e.g.,Cambridgegesturedataset[19],NavalAirTrainingandOperatingProceduresStandard-ization(NATOPS)aircrafthandlingsignalsdatabase[20],Keckgesturedataset[21],KoreaUniversityGes-ture(KUG)database[22],etc.Thoughallthesedatasetsarewellknownfortheircontentsandcomplexities,allofthemaddressesaparticulartypeofgestureslimitedtoveryfewclassesandapplicationdomains.Therefore,forthesimulationpurposeoftheproposedmethod,averyrichbutextremelycomplicateddataset,namely,theChaLearnGestureDataset(CGD2011),isconsideredinthispaper[23].Itisaverychallengingdatabase.Ithasdevelopmentdataaswellasvalidationdata.Thedevel-opmentdataconsistedofbatchesdevel01,devel02:::devel20,etc.ForthedevelXXbatches,allthelabelsareprovidedbythedatasetcreators.Eachsub-datasetorlexi-conconsistsof47videosequencesof1to5gestures,hav-inginitialswith‘K’todenotedepthimagesfromKinectsensorand‘M’astheoriginalRGBvideos.So,thereareactually472numberofvideofilesunderalex-icon.Eachvideohas8to13uniqueactions,andthenumbersarevariedfromonelexicontoanother.Therearearound50;000gesturesrecordedwiththeKinectTMcamerawithimagesizes240320pixelsat10framespersecond.Thevideosarerecordedby20differentusersandgroupedin500batchesof100gestures.Thedataareavailablefrom[23]in2formats:AlossycompressedAVIformatandaquasi-losslessAVIformat.Togetasuf-ficientspacialresolution,onlytheupperbodyisframed.Somemoreattributesaboutthisdatabaseare:fixedcamera,availabilityofdepthdata,singleuserwithinabatch,homogeneousrecordingconditionswithinabatch,smallvocabularywithinabatch,gesturesperformedmostlybyarmsandhands,cameraframingmostlytheupperbody(someexceptions),onlyonelabeledexampleofeachuniquegestures,variationsinrecordingcondi-tions(variousbackgrounds,clothing,skincolors,light-ing,temperature,resolution),somepartsofthebodymaybeoccluded,someusersarelessskilledthanothers,someusersmadeerrorsoromissionsinperformingtheges-tures,etc.Ithaslexiconsfromninecategoriescorre-spondingtovarioussettingsorapplicationdomains;theyinclude(1)bodylanguagegestures(likescratchinghead,crossingarms),(2)gesticulationsperformedtoaccom-panyspeech,(3)illustrators(likeItaliangestures),(4)emblems(likeIndianMudras),(5)signs(fromsignlan-�

-1725-

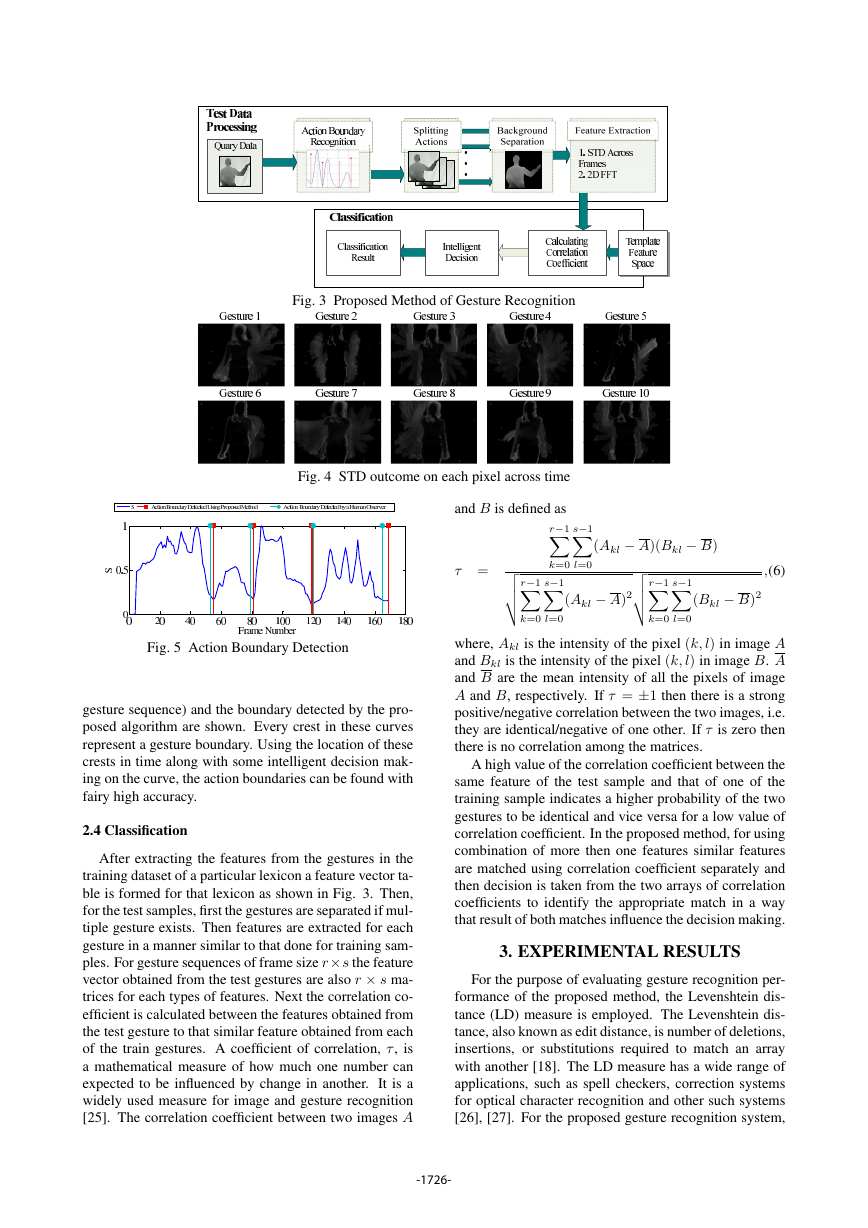

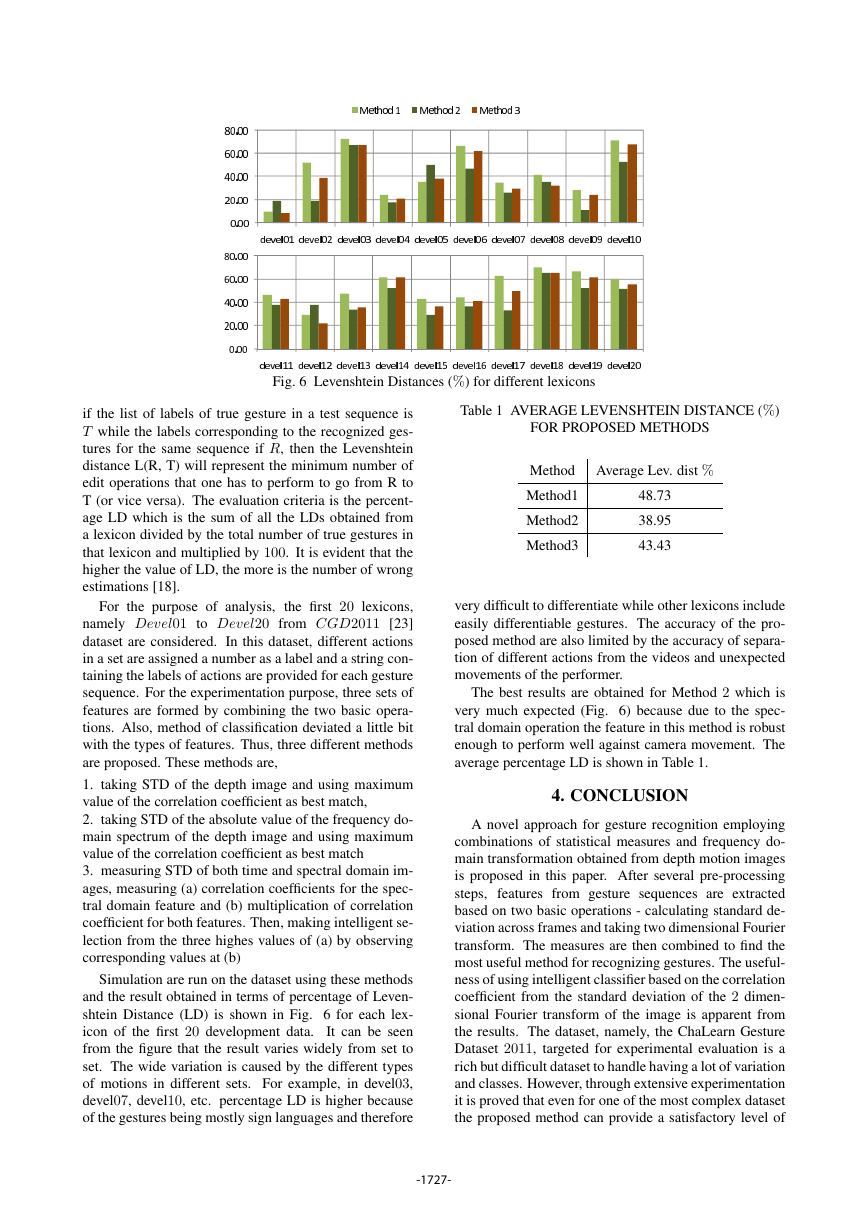

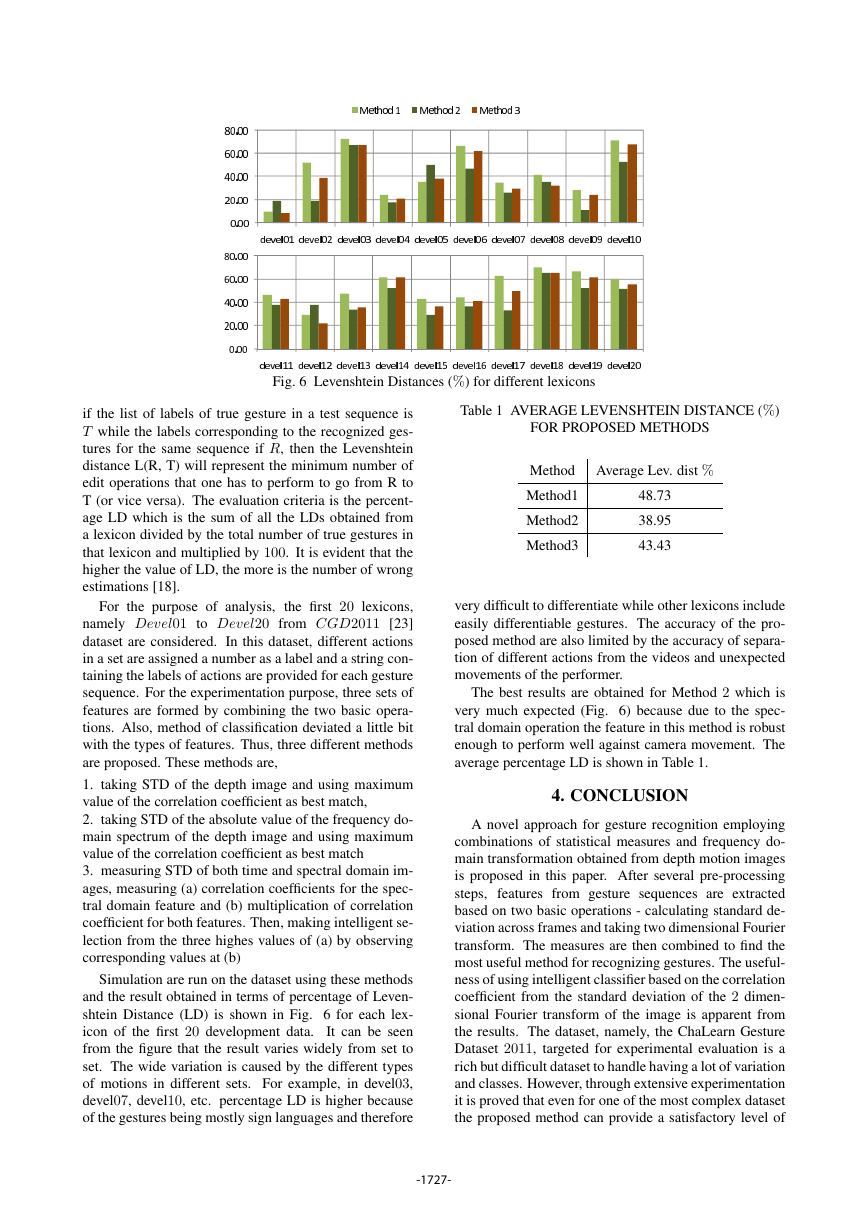

guagesforthedeaf),(6)signals(likerefereesignals,div-ingsignals,ormarshalingsignalstoguidemachineryorvehicle),(7)actions(likedrinkingorwriting),(8)pan-tomimes(gesturesmadetomimicactions),and(9)dancepostures.SampleframesofbothRGBanddepthdataofthefirst10lexiconsofthedevelopmentdataareshowninFig.1.2.2FeatureExtractionandTrainingThedepthdataprovidedinthedatasetareinRGBfor-matandthereforeneedtobeconvertedtograyscalebe-foreprocessing.Thegrayscaledepthdataisatruerepre-sentationofobjectdistancefromthecamerabyvaryingintensityofpixelsfromdarktobrightforneartofarawayobjects,respectively.NextinordertoseparatethepersonandthebackgroundofthegrayscaleimageOtsu’smethod[24]isemployed.Inthismethodathresholdischosenbasedonminimizationoftheintra-classvarianceoftheblackandwhitepixelsformakingablackandwhitebi-naryimage.Utilizingthebinaryimagethebackgroundfromthedepthimageisfilteredoutandtherebythehu-mansubjectisseparatedasshowninFig.2.Intheproposedmethodsofgesturerecognitiontwotypesofoperationsareperformedforobtainingfeaturevectorsfromtrainingsamples:a.calculatingstandarddeviation(STD)oneachpixelvaluesacrossalltheframesandb.employingtwodimensionalFouriertransform(2D-FFT)oneachframe.Theprocessoffeatureextrac-tion,trainingandclassificationareshowninbriefinFig.3.Forthen-thgestureatlexiconLconsistingofDframes,thestandarddeviationD2n(x;y)ofpixel(x;y)acrosstheframesisgivenbyD2n(x;y)=∑(Ixy(d)Ixy)2D:(1)HereIxy(d)isthepixelvalueofthelocation(x;y)oftheframed.Wherex=1;2;3:::r,y=1;2;3:::sandd=1;2;3:::D.randscorrespondstothemaximumpixelvaluealongxandyaxis,respectively.Ixyistheav-erageofallIxy(d)valuesfromframes1tod.Therefore,forthewholeframe,thematrixobtainedisdefinedasD∆Ln=D2n(1;1)D2n(1;2):::D2n(1;s)D2n(2;1)D2n(2;2):::D2n(2;s)............D2n(r;1)D2n(r;2):::D2n(r;s)(2)Thesilhouettesobtainedbyperformingstandardde-viationacrossframesonthetrainingsamplesofdevel-opmentdatalexicon1oftheChaLearngesturedatasetisshowninFig.4.Itisevidentfromthefigurethatperform-ingstandarddeviationacrossframesenhancestheinfor-mationofmovementacrosstheframeswhilesuppressesthestaticparts.Therefore,informationaboutthepathofmotionflowandtypeofthegestureiseasilyobservable.Anotherfeaturecanbeobtainedfromthegesturesam-plesbytakingtheabsolutevalueofthetwo-dimensionalDiscreteFourierTransform(2DDFT)usingfastFourierTransform(FFT)oneachbackgroundsubtracteddepthimageframeandthencalculatingthestandarddeviationofeachpointatthespectraldomainacrossframes.Foranimageofsizersthe2DdiscreteFouriertransformisgivenbyFkl(d)=1rsr1∑x=0s1∑y=0Ixy(d)ej2(kxr+lys);(3)where,theexponentialtermisthebasisfunctioncorre-spondingtoeachpointFkl(d)intheFourierspaceandIxy(d)isthevalueofthe(x;y)pixeloftheimagegiveninspatialdomainforthed-thframe[25].PerformingFouriertransformpriortotakingSTDhascertainadvan-tages.Whenthedepthvaluesofthepersonaretakentothefrequencydomain,thepositionofthepersonbecomesirrelevant,i.e.anyslightmovementofthecameraverti-callyorhorizontallywouldbenullifiedinthefrequencyresponse.Forexample,ifthecameraissteadyinthetrainingsamplesbutmovesalittlebitinthetestsamples,theSTDontemporalvaluesofthetestdatawouldbedif-ficulttoprojectonthatofthetrainingdataforfindingamatch.However,spectraldomaintransformationofthedatabeforetakingstandarddeviationacrossframeswillsuppressthetimeresolutionandtherebyreducetheeffectofcameramovement[4].2.3FindingActionBoundaryWhiletesting,thetestdatasequencescontainingoneoneormoregesturesareneededtobeseparatedfordif-ferentgestures.ThegesturesprovidedintheChaLearngesturedatasetareseparatedbyreturningtoarestingpo-sition[23].Thus,subtractingtheinitialframefromeachframeofthemoviecanbeagoodmethodforfindingthegestureboundary.ForarsdepthimageifIxy(d)isthepixelvalue(depth)ofposition(x;y)atthed-thframe,thenthefollowingformulacanbeusedtogenerateavari-ablePdwhichgivesanamplitudeofchangefromtheref-erenceframep1=(I11(d)I11(1))2++(I1s(t)I1s(1))2p2=(I21(d)I21(1))2++(I2s(t)I2s(1))2p3=(I31(d)I31(1))2++(I3s(t)I3s(1))2::::::::::::::::::pr=(Ir1(d)Ir1(1))2++(Irs(t)Irs(1))2(4)Thus,thesumsquaredifferenceofthecurrentframefromthereferenceframecanbeobtainedbyPd=r∑k=0pk(5)ThevalueofPdisthennormalized.ThesmoothedgraphofnormalizedPdvs.disshowninFig.5.aand5.bfortwodifferentdevelopmentdatasamples.Inthefigure,boththeactualboundary(determinedbyobservingthe�

-1726-

Fig.3ProposedMethodofGestureRecognitionFig.4STDoutcomeoneachpixelacrosstimeFig.5ActionBoundaryDetectiongesturesequence)andtheboundarydetectedbythepro-posedalgorithmareshown.Everycrestinthesecurvesrepresentagestureboundary.Usingthelocationofthesecrestsintimealongwithsomeintelligentdecisionmak-ingonthecurve,theactionboundariescanbefoundwithfairyhighaccuracy.2.4ClassificationAfterextractingthefeaturesfromthegesturesinthetrainingdatasetofaparticularlexiconafeaturevectorta-bleisformedforthatlexiconasshowninFig.3.Then,forthetestsamples,firstthegesturesareseparatedifmul-tiplegestureexists.Thenfeaturesareextractedforeachgestureinamannersimilartothatdonefortrainingsam-ples.Forgesturesequencesofframesizersthefeaturevectorobtainedfromthetestgesturesarealsorsma-tricesforeachtypesoffeatures.Nextthecorrelationco-efficientiscalculatedbetweenthefeaturesobtainedfromthetestgesturetothatsimilarfeatureobtainedfromeachofthetraingestures.Acoefficientofcorrelation,,isamathematicalmeasureofhowmuchonenumbercanexpectedtobeinfluencedbychangeinanother.Itisawidelyusedmeasureforimageandgesturerecognition[25].ThecorrelationcoefficientbetweentwoimagesAandBisdefinedas=r1∑k=0s1∑l=0(AklA)(BklB)vuutr1∑k=0s1∑l=0(AklA)2vuutr1∑k=0s1∑l=0(BklB)2;(6)where,Aklistheintensityofthepixel(k;l)inimageAandBklistheintensityofthepixel(k;l)inimageB.AandBarethemeanintensityofallthepixelsofimageAandB,respectively.If=1thenthereisastrongpositive/negativecorrelationbetweenthetwoimages,i.e.theyareidentical/negativeofoneother.Ifiszerothenthereisnocorrelationamongthematrices.Ahighvalueofthecorrelationcoefficientbetweenthesamefeatureofthetestsampleandthatofoneofthetrainingsampleindicatesahigherprobabilityofthetwogesturestobeidenticalandviceversaforalowvalueofcorrelationcoefficient.Intheproposedmethod,forusingcombinationofmorethenonefeaturessimilarfeaturesarematchedusingcorrelationcoefficientseparatelyandthendecisionistakenfromthetwoarraysofcorrelationcoefficientstoidentifytheappropriatematchinawaythatresultofbothmatchesinfluencethedecisionmaking.3.EXPERIMENTALRESULTSForthepurposeofevaluatinggesturerecognitionper-formanceoftheproposedmethod,theLevenshteindis-tance(LD)measureisemployed.TheLevenshteindis-tance,alsoknownaseditdistance,isnumberofdeletions,insertions,orsubstitutionsrequiredtomatchanarraywithanother[18].TheLDmeasurehasawiderangeofapplications,suchasspellcheckers,correctionsystemsforopticalcharacterrecognitionandothersuchsystems[26],[27].Fortheproposedgesturerecognitionsystem,�

-1727-

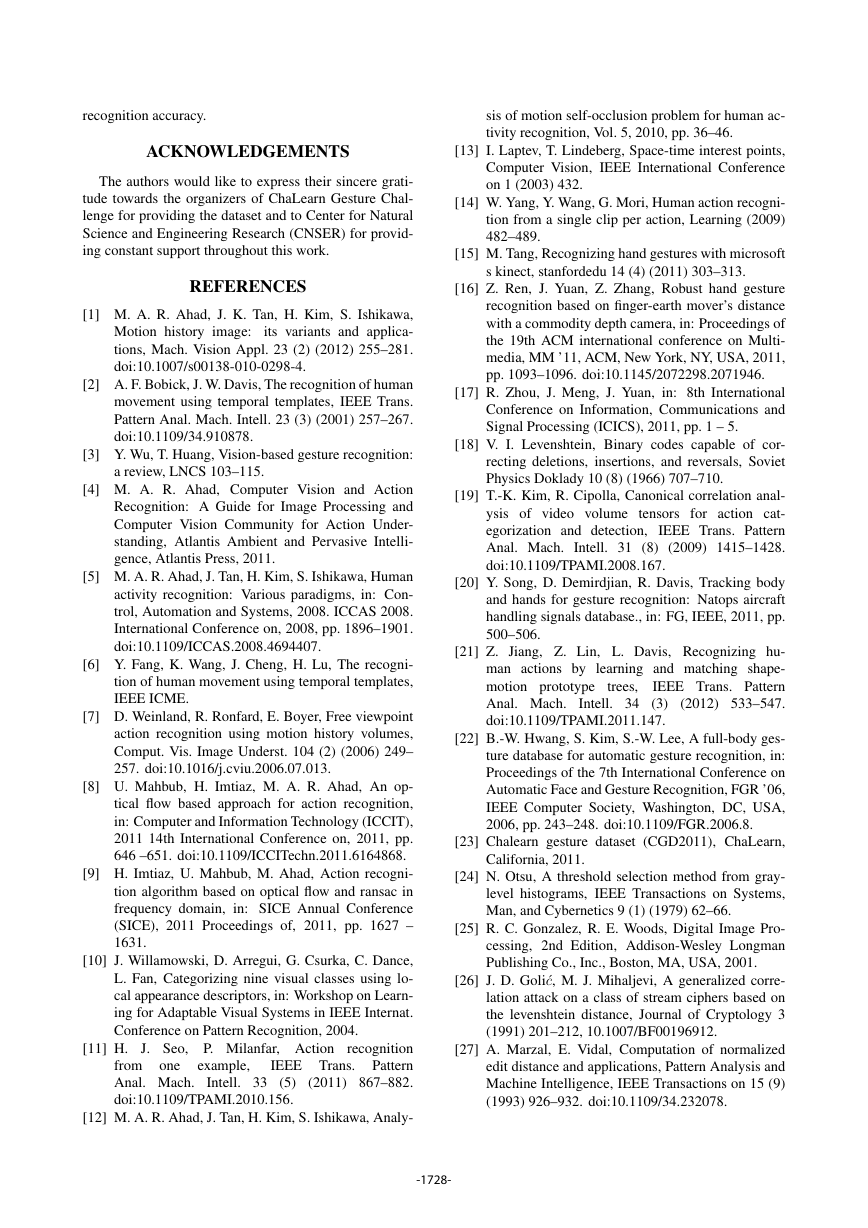

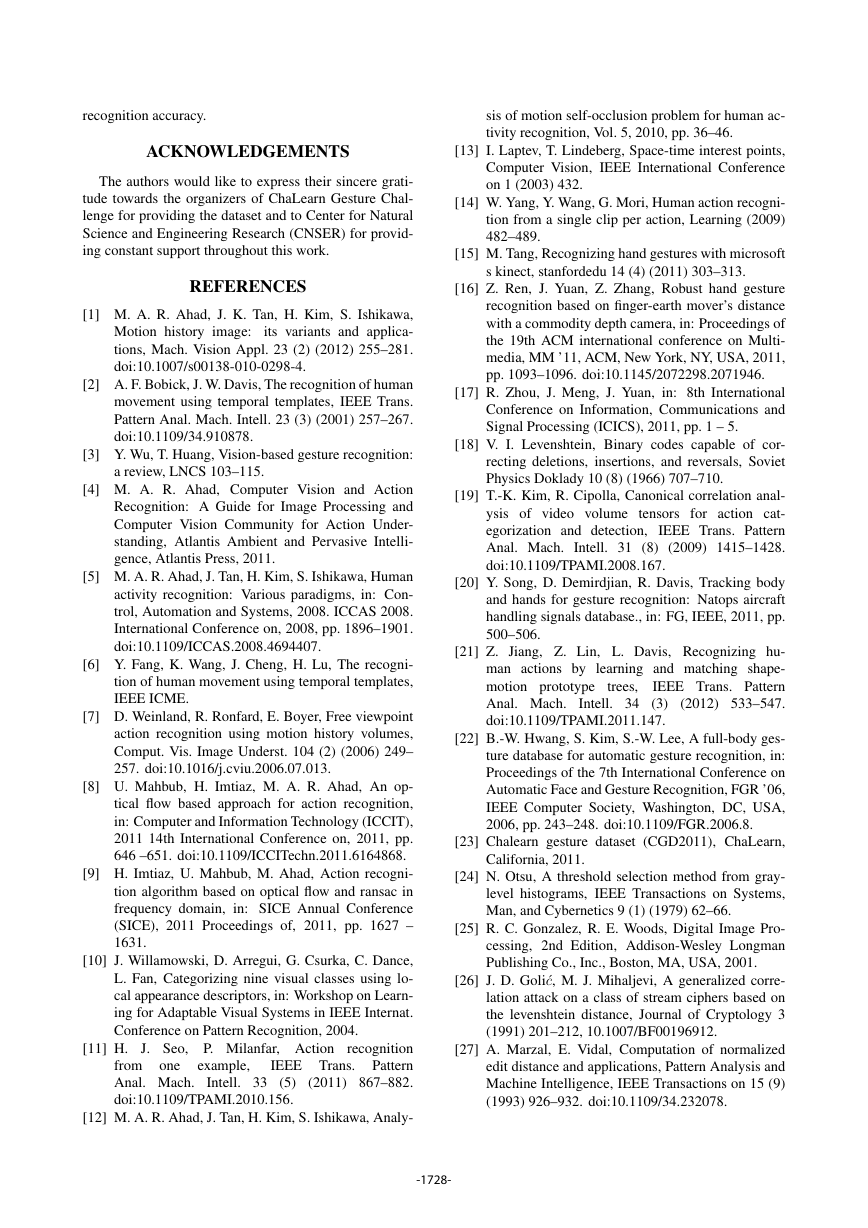

Fig.6LevenshteinDistances(%)fordifferentlexiconsifthelistoflabelsoftruegestureinatestsequenceisTwhilethelabelscorrespondingtotherecognizedges-turesforthesamesequenceifR,thentheLevenshteindistanceL(R,T)willrepresenttheminimumnumberofeditoperationsthatonehastoperformtogofromRtoT(orviceversa).Theevaluationcriteriaisthepercent-ageLDwhichisthesumofalltheLDsobtainedfromalexicondividedbythetotalnumberoftruegesturesinthatlexiconandmultipliedby100.ItisevidentthatthehigherthevalueofLD,themoreisthenumberofwrongestimations[18].Forthepurposeofanalysis,thefirst20lexicons,namelyDevel01toDevel20fromCGD2011[23]datasetareconsidered.Inthisdataset,differentactionsinasetareassignedanumberasalabelandastringcon-tainingthelabelsofactionsareprovidedforeachgesturesequence.Fortheexperimentationpurpose,threesetsoffeaturesareformedbycombiningthetwobasicopera-tions.Also,methodofclassificationdeviatedalittlebitwiththetypesoffeatures.Thus,threedifferentmethodsareproposed.Thesemethodsare,1.takingSTDofthedepthimageandusingmaximumvalueofthecorrelationcoefficientasbestmatch,2.takingSTDoftheabsolutevalueofthefrequencydo-mainspectrumofthedepthimageandusingmaximumvalueofthecorrelationcoefficientasbestmatch3.measuringSTDofbothtimeandspectraldomainim-ages,measuring(a)correlationcoefficientsforthespec-traldomainfeatureand(b)multiplicationofcorrelationcoefficientforbothfeatures.Then,makingintelligentse-lectionfromthethreehighesvaluesof(a)byobservingcorrespondingvaluesat(b)SimulationarerunonthedatasetusingthesemethodsandtheresultobtainedintermsofpercentageofLeven-shteinDistance(LD)isshowninFig.6foreachlex-iconofthefirst20developmentdata.Itcanbeseenfromthefigurethattheresultvarieswidelyfromsettoset.Thewidevariationiscausedbythedifferenttypesofmotionsindifferentsets.Forexample,indevel03,devel07,devel10,etc.percentageLDishigherbecauseofthegesturesbeingmostlysignlanguagesandthereforeTable1AVERAGELEVENSHTEINDISTANCE(%)FORPROPOSEDMETHODSMethodAverageLev.dist%Method148.73Method238.95Method343.43verydifficulttodifferentiatewhileotherlexiconsincludeeasilydifferentiablegestures.Theaccuracyofthepro-posedmethodarealsolimitedbytheaccuracyofsepara-tionofdifferentactionsfromthevideosandunexpectedmovementsoftheperformer.ThebestresultsareobtainedforMethod2whichisverymuchexpected(Fig.6)becauseduetothespec-traldomainoperationthefeatureinthismethodisrobustenoughtoperformwellagainstcameramovement.TheaveragepercentageLDisshowninTable1.4.CONCLUSIONAnovelapproachforgesturerecognitionemployingcombinationsofstatisticalmeasuresandfrequencydo-maintransformationobtainedfromdepthmotionimagesisproposedinthispaper.Afterseveralpre-processingsteps,featuresfromgesturesequencesareextractedbasedontwobasicoperations-calculatingstandardde-viationacrossframesandtakingtwodimensionalFouriertransform.Themeasuresarethencombinedtofindthemostusefulmethodforrecognizinggestures.Theuseful-nessofusingintelligentclassifierbasedonthecorrelationcoefficientfromthestandarddeviationofthe2dimen-sionalFouriertransformoftheimageisapparentfromtheresults.Thedataset,namely,theChaLearnGestureDataset2011,targetedforexperimentalevaluationisarichbutdifficultdatasettohandlehavingalotofvariationandclasses.However,throughextensiveexperimentationitisprovedthatevenforoneofthemostcomplexdatasettheproposedmethodcanprovideasatisfactorylevelof�

-1728-

recognitionaccuracy.ACKNOWLEDGEMENTSTheauthorswouldliketoexpresstheirsinceregrati-tudetowardstheorganizersofChaLearnGestureChal-lengeforprovidingthedatasetandtoCenterforNaturalScienceandEngineeringResearch(CNSER)forprovid-ingconstantsupportthroughoutthiswork.REFERENCES[1]M.A.R.Ahad,J.K.Tan,H.Kim,S.Ishikawa,Motionhistoryimage:itsvariantsandapplica-tions,Mach.VisionAppl.23(2)(2012)255–281.doi:10.1007/s00138-010-0298-4.[2]A.F.Bobick,J.W.Davis,Therecognitionofhumanmovementusingtemporaltemplates,IEEETrans.PatternAnal.Mach.Intell.23(3)(2001)257–267.doi:10.1109/34.910878.[3]Y.Wu,T.Huang,Vision-basedgesturerecognition:areview,LNCS103–115.[4]M.A.R.Ahad,ComputerVisionandActionRecognition:AGuideforImageProcessingandComputerVisionCommunityforActionUnder-standing,AtlantisAmbientandPervasiveIntelli-gence,AtlantisPress,2011.[5]M.A.R.Ahad,J.Tan,H.Kim,S.Ishikawa,Humanactivityrecognition:Variousparadigms,in:Con-trol,AutomationandSystems,2008.ICCAS2008.InternationalConferenceon,2008,pp.1896–1901.doi:10.1109/ICCAS.2008.4694407.[6]Y.Fang,K.Wang,J.Cheng,H.Lu,Therecogni-tionofhumanmovementusingtemporaltemplates,IEEEICME.[7]D.Weinland,R.Ronfard,E.Boyer,Freeviewpointactionrecognitionusingmotionhistoryvolumes,Comput.Vis.ImageUnderst.104(2)(2006)249–257.doi:10.1016/j.cviu.2006.07.013.[8]U.Mahbub,H.Imtiaz,M.A.R.Ahad,Anop-ticalflowbasedapproachforactionrecognition,in:ComputerandInformationTechnology(ICCIT),201114thInternationalConferenceon,2011,pp.646–651.doi:10.1109/ICCITechn.2011.6164868.[9]H.Imtiaz,U.Mahbub,M.Ahad,Actionrecogni-tionalgorithmbasedonopticalflowandransacinfrequencydomain,in:SICEAnnualConference(SICE),2011Proceedingsof,2011,pp.1627–1631.[10]J.Willamowski,D.Arregui,G.Csurka,C.Dance,L.Fan,Categorizingninevisualclassesusinglo-calappearancedescriptors,in:WorkshoponLearn-ingforAdaptableVisualSystemsinIEEEInternat.ConferenceonPatternRecognition,2004.[11]H.J.Seo,P.Milanfar,Actionrecognitionfromoneexample,IEEETrans.PatternAnal.Mach.Intell.33(5)(2011)867–882.doi:10.1109/TPAMI.2010.156.[12]M.A.R.Ahad,J.Tan,H.Kim,S.Ishikawa,Analy-sisofmotionself-occlusionproblemforhumanac-tivityrecognition,Vol.5,2010,pp.36–46.[13]I.Laptev,T.Lindeberg,Space-timeinterestpoints,ComputerVision,IEEEInternationalConferenceon1(2003)432.[14]W.Yang,Y.Wang,G.Mori,Humanactionrecogni-tionfromasingleclipperaction,Learning(2009)482–489.[15]M.Tang,Recognizinghandgestureswithmicrosoftskinect,stanfordedu14(4)(2011)303–313.[16]Z.Ren,J.Yuan,Z.Zhang,Robusthandgesturerecognitionbasedonfinger-earthmover’sdistancewithacommoditydepthcamera,in:Proceedingsofthe19thACMinternationalconferenceonMulti-media,MM’11,ACM,NewYork,NY,USA,2011,pp.1093–1096.doi:10.1145/2072298.2071946.[17]R.Zhou,J.Meng,J.Yuan,in:8thInternationalConferenceonInformation,CommunicationsandSignalProcessing(ICICS),2011,pp.1–5.[18]V.I.Levenshtein,Binarycodescapableofcor-rectingdeletions,insertions,andreversals,SovietPhysicsDoklady10(8)(1966)707–710.[19]T.-K.Kim,R.Cipolla,Canonicalcorrelationanal-ysisofvideovolumetensorsforactioncat-egorizationanddetection,IEEETrans.PatternAnal.Mach.Intell.31(8)(2009)1415–1428.doi:10.1109/TPAMI.2008.167.[20]Y.Song,D.Demirdjian,R.Davis,Trackingbodyandhandsforgesturerecognition:Natopsaircrafthandlingsignalsdatabase.,in:FG,IEEE,2011,pp.500–506.[21]Z.Jiang,Z.Lin,L.Davis,Recognizinghu-manactionsbylearningandmatchingshape-motionprototypetrees,IEEETrans.PatternAnal.Mach.Intell.34(3)(2012)533–547.doi:10.1109/TPAMI.2011.147.[22]B.-W.Hwang,S.Kim,S.-W.Lee,Afull-bodyges-turedatabaseforautomaticgesturerecognition,in:Proceedingsofthe7thInternationalConferenceonAutomaticFaceandGestureRecognition,FGR’06,IEEEComputerSociety,Washington,DC,USA,2006,pp.243–248.doi:10.1109/FGR.2006.8.[23]Chalearngesturedataset(CGD2011),ChaLearn,California,2011.[24]N.Otsu,Athresholdselectionmethodfromgray-levelhistograms,IEEETransactionsonSystems,Man,andCybernetics9(1)(1979)62–66.[25]R.C.Gonzalez,R.E.Woods,DigitalImagePro-cessing,2ndEdition,Addison-WesleyLongmanPublishingCo.,Inc.,Boston,MA,USA,2001.[26]J.D.Goli´c,M.J.Mihaljevi,Ageneralizedcorre-lationattackonaclassofstreamciphersbasedonthelevenshteindistance,JournalofCryptology3(1991)201–212,10.1007/BF00196912.[27]A.Marzal,E.Vidal,Computationofnormalizededitdistanceandapplications,PatternAnalysisandMachineIntelligence,IEEETransactionson15(9)(1993)926–932.doi:10.1109/34.232078.�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc