The Anatomy of the Grid

Enabling Scalable Virtual Organizations *

Ian Foster •¶ Carl Kesselman §

Steven Tuecke •

{foster, tuecke}@mcs.anl.gov, carl@isi.edu

Abstract

“Grid” computing has emerged as an important new field, distinguished from conventional

distributed computing by its focus on large-scale resource sharing, innovative applications, and,

in some cases, high-performance orientation. In this article, we define this new field. First, we

review the “Grid problem,” which we define as flexible, secure, coordinated resource sharing

among dynamic collections of individuals, institutions, and resources—what we refer to as virtual

organizations. In such settings, we encounter unique authentication, authorization, resource

access, resource discovery, and other challenges. It is this class of problem that is addressed by

Grid technologies. Next, we present an extensible and open Grid architecture, in which

protocols, services, application programming interfaces, and software development kits are

categorized according to their roles in enabling resource sharing. We describe requirements that

we believe any such mechanisms must satisfy and we discuss the importance of defining a

compact set of intergrid protocols to enable interoperability among different Grid systems.

Finally, we discuss how Grid technologies relate to other contemporary technologies, including

enterprise integration, application service provider, storage service provider, and peer-to-peer

computing. We maintain that Grid concepts and technologies complement and have much to

contribute to these other approaches.

1 Introduction

The term “the Grid” was coined in the mid1990s to denote a proposed distributed computing

infrastructure for advanced science and engineering [34]. Considerable progress has since been

made on the construction of such an infrastructure (e.g., [10, 16, 46, 59]), but the term “Grid” has

also been conflated, at least in popular perception, to embrace everything from advanced

networking to artificial intelligence. One might wonder whether the term has any real substance

and meaning. Is there really a distinct “Grid problem” and hence a need for new “Grid

technologies”? If so, what is the nature of these technologies, and what is their domain of

applicability? While numerous groups have interest in Grid concepts and share, to a significant

extent, a common vision of Grid architecture, we do not see consensus on the answers to these

questions.

Our purpose in this article is to argue that the Grid concept is indeed motivated by a real and

specific problem and that there is an emerging, well-defined Grid technology base that addresses

significant aspects of this problem. In the process, we develop a detailed architecture and

roadmap for current and future Grid technologies. Furthermore, we assert that while Grid

technologies are currently distinct from other major technology trends, such as Internet,

enterprise, distributed, and peer-to-peer computing, these other trends can benefit significantly

from growing into the problem space addressed by Grid technologies.

• Mathematics and Computer Science Division, Argonne National Laboratory, Argonne, IL 60439.

¶ Department of Computer Science, The University of Chicago, Chicago, IL 60657.

§ Information Sciences Institute, The University of Southern California, Marina del Rey, CA 90292.

* To appear: Intl J. Supercomputer Applications, 2001.

�

The Anatomy of the Grid

2

The real and specific problem that underlies the Grid concept is coordinated resource sharing

and problem solving in dynamic, multi-institutional virtual organizations. The sharing that we

are concerned with is not primarily file exchange but rather direct access to computers, software,

data, and other resources, as is required by a range of collaborative problem-solving and resource-

brokering strategies emerging in industry, science, and engineering. This sharing is, necessarily,

highly controlled, with resource providers and consumers defining clearly and carefully just what

is shared, who is allowed to share, and the conditions under which sharing occurs. A set of

individuals and/or institutions defined by such sharing rules form what we call a virtual

organization (VO).

The following are examples of VOs: the application service providers, storage service providers,

cycle providers, and consultants engaged by a car manufacturer to perform scenario evaluation

during planning for a new factory; members of an industrial consortium bidding on a new

aircraft; a crisis management team and the databases and simulation systems that they use to plan

a response to an emergency situation; and members of a large, international, multiyear high-

energy physics collaboration. Each of these examples represents an approach to computing and

problem solving based on collaboration in computation- and data-rich environments.

As these examples show, VOs vary tremendously in their purpose, scope, size, duration,

structure, community, and sociology. Nevertheless, careful study of underlying technology

requirements leads us to identify a broad set of common concerns and requirements. In

particular, we see a need for highly flexible sharing relationships, ranging from client-server to

peer-to-peer; for sophisticated and precise levels of control over how shared resources are used,

including fine-grained and multi-stakeholder access control, delegation, and application of local

and global policies; for sharing of varied resources, ranging from programs, files, and data to

computers, sensors, and networks; and for diverse usage modes, ranging from single user to

multi-user and from performance sensitive to cost-sensitive and hence embracing issues of quality

of service, scheduling, co-allocation, and accounting.

Current distributed computing technologies do not address the concerns and requirements just

listed. For example, current Internet technologies address communication and information

exchange among computers but do not provide integrated approaches to the coordinated use of

resources at multiple sites for computation. Business-to-business exchanges [57] focus on

information sharing (often via centralized servers). So do virtual enterprise technologies,

although here sharing may eventually extend to applications and physical devices (e.g., [8]).

Enterprise distributed computing technologies such as CORBA and Enterprise Java enable

resource sharing within a single organization. The Open Group’s Distributed Computing

Environment (DCE) supports secure resource sharing across sites, but most VOs would find it too

burdensome and inflexible. Storage service providers (SSPs) and application service providers

(ASPs) allow organizations to outsource storage and computing requirements to other parties, but

only in constrained ways: for example, SSP resources are typically linked to a customer via a

virtual private network (VPN). Emerging “Distributed computing” companies seek to harness

idle computers on an international scale [31] but, to date, support only highly centralized access

to those resources. In summary, current technology either does not accommodate the range of

resource types or does not provide the flexibility and control on sharing relationships needed to

establish VOs.

It is here that Grid technologies enter the picture. Over the past five years, research and

development efforts within the Grid community have produced protocols, services, and tools that

address precisely the challenges that arise when we seek to build scalable VOs. These

technologies include security solutions that support management of credentials and policies when

computations span multiple institutions; resource management protocols and services that support

secure remote access to computing and data resources and the co-allocation of multiple resources;

�

The Anatomy of the Grid

3

information query protocols and services that provide configuration and status information about

resources, organizations, and services; and data management services that locate and transport

datasets between storage systems and applications.

Because of their focus on dynamic, cross-organizational sharing, Grid technologies complement

rather than compete with existing distributed computing technologies. For example, enterprise

distributed computing systems can use Grid technologies to achieve resource sharing across

institutional boundaries; in the ASP/SSP space, Grid technologies can be used to establish

dynamic markets for computing and storage resources, hence overcoming the limitations of

current static configurations. We discuss the relationship between Grids and these technologies

in more detail below.

In the rest of this article, we expand upon each of these points in turn. Our objectives are to (1)

clarify the nature of VOs and Grid computing for those unfamiliar with the area; (2) contribute to

the emergence of Grid computing as a discipline by establishing a standard vocabulary and

defining an overall architectural framework; and (3) define clearly how Grid technologies relate

to other technologies, explaining both why emerging technologies do not yet solve the Grid

computing problem and how these technologies can benefit from Grid technologies.

It is our belief that VOs have the potential to change dramatically the way we use computers to

solve problems, much as the web has changed how we exchange information. As the examples

presented here illustrate, the need to engage in collaborative processes is fundamental to many

diverse disciplines and activities: it is not limited to science, engineering and business activities.

It is because of this broad applicability of VO concepts that Grid technology is important.

2 The Emergence of Virtual Organizations

Consider the following four scenarios:

1. A company needing to reach a decision on the placement of a new factory invokes a

sophisticated financial forecasting model from an ASP, providing it with access to

appropriate proprietary historical data from a corporate database on storage systems

operated by an SSP. During the decision-making meeting, what-if scenarios are run

collaboratively and interactively, even though the division heads participating in the

decision are located in different cities. The ASP itself contracts with a cycle provider for

additional “oomph” during particularly demanding scenarios, requiring of course that

cycles meet desired security and performance requirements.

2. An industrial consortium formed to develop a feasibility study for a next-generation

supersonic aircraft undertakes a highly accurate multidisciplinary simulation of the entire

aircraft. This simulation integrates proprietary software components developed by

different participants, with each component operating on that participant’s computers and

having access to appropriate design databases and other data made available to the

consortium by its members.

3. A crisis management team responds to a chemical spill by using local weather and soil

models to estimate the spread of the spill, determining the impact based on population

location as well as geographic features such as rivers and water supplies, creating a short-

term mitigation plan (perhaps based on chemical reaction models), and tasking

emergency response personnel by planning and coordinating evacuation, notifying

hospitals, and so forth.

4. Thousands of physicists at hundreds of laboratories and universities worldwide come

together to design, create, operate, and analyze the products of a major detector at CERN,

�

The Anatomy of the Grid

4

the European high energy physics laboratory. During the analysis phase, they pool their

computing, storage, and networking resources to create a “Data Grid” capable of

analyzing petabytes of data [22, 44, 53].

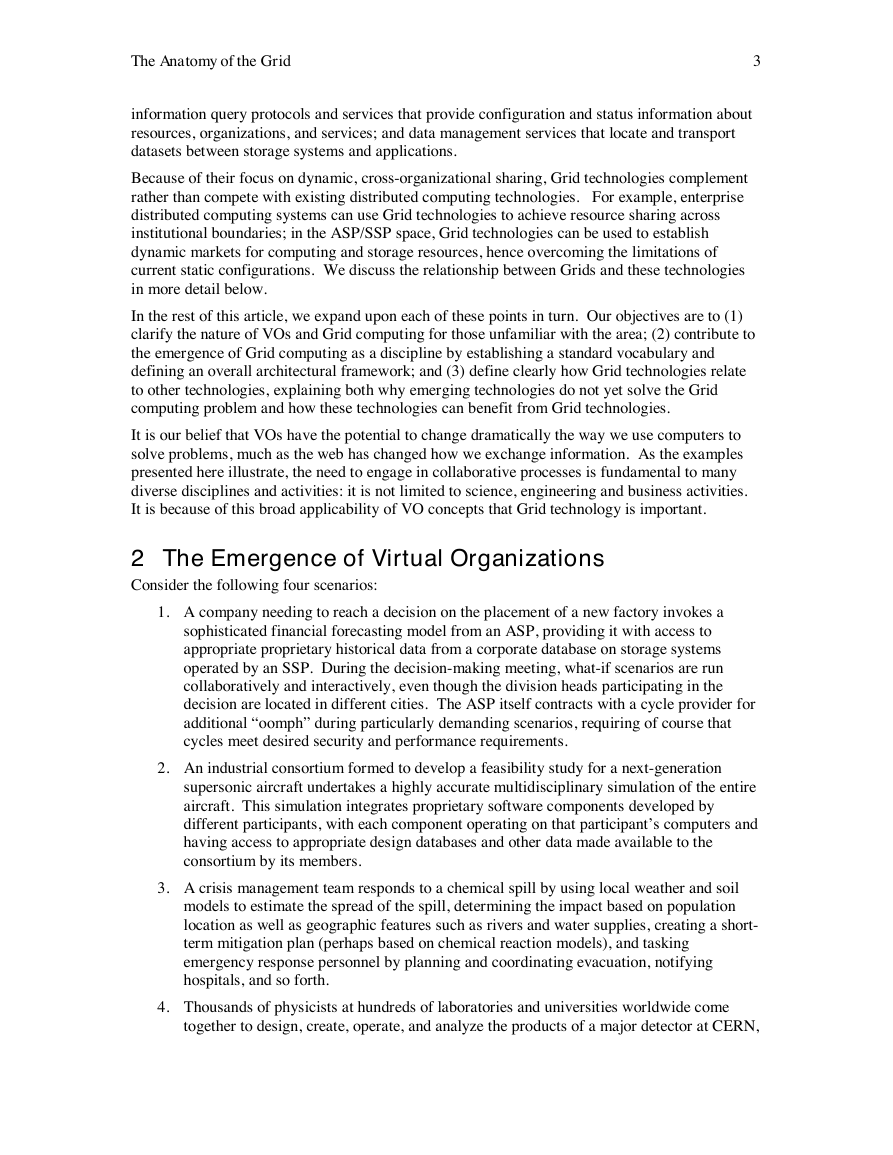

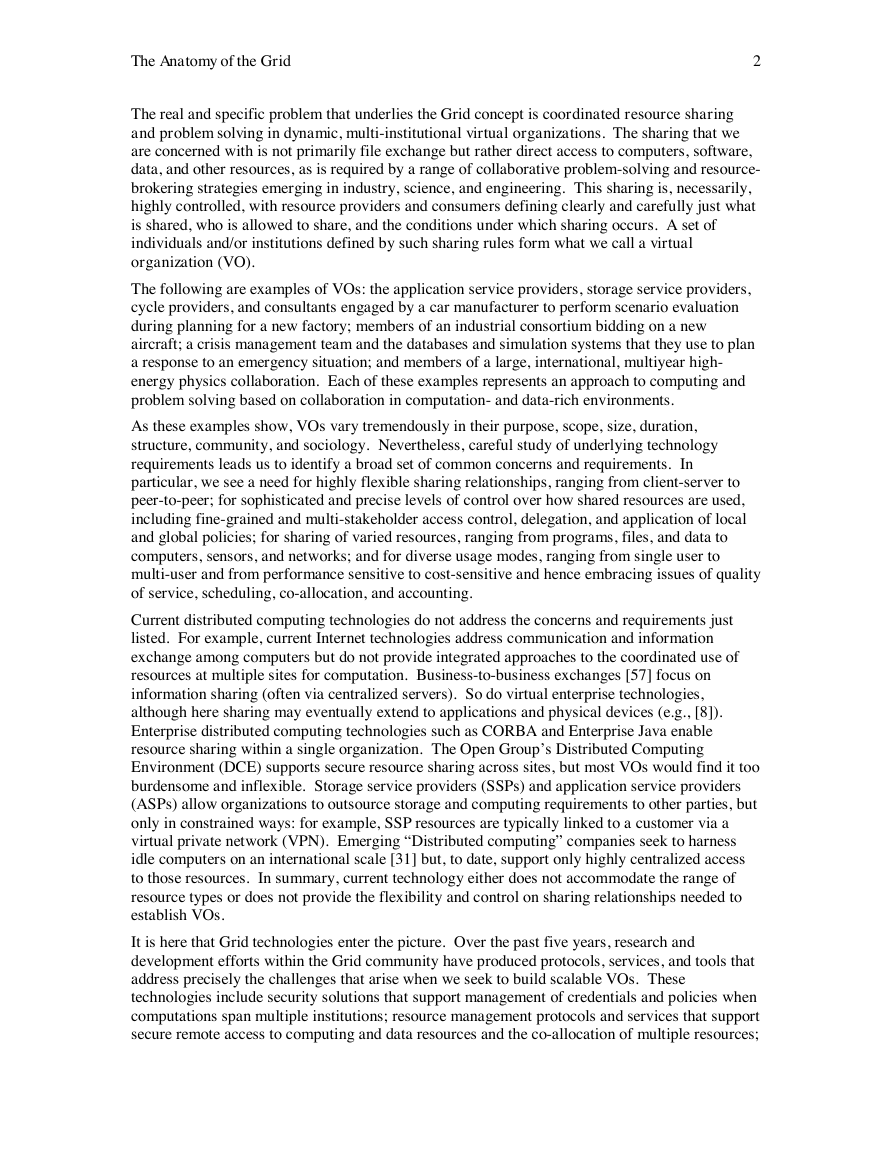

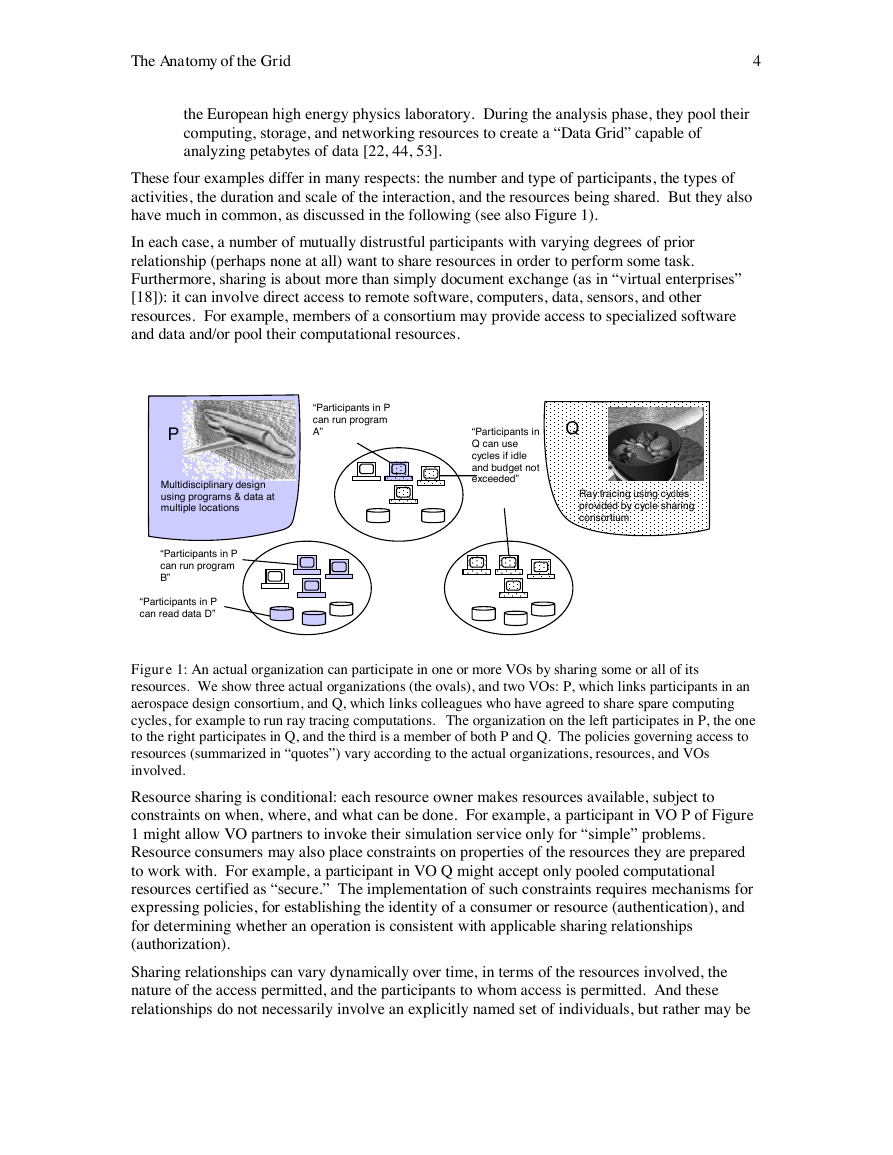

These four examples differ in many respects: the number and type of participants, the types of

activities, the duration and scale of the interaction, and the resources being shared. But they also

have much in common, as discussed in the following (see also Figure 1).

In each case, a number of mutually distrustful participants with varying degrees of prior

relationship (perhaps none at all) want to share resources in order to perform some task.

Furthermore, sharing is about more than simply document exchange (as in “virtual enterprises”

[18]): it can involve direct access to remote software, computers, data, sensors, and other

resources. For example, members of a consortium may provide access to specialized software

and data and/or pool their computational resources.

“Participants in P

can run program

A”

Q

“Participants in

Q can use

cycles if idle

and budget not

exceeded”

Ray tracing using cycles

provided by cycle sharing

consortium

P

Multidisciplinary design

using programs & data at

multiple locations

“Participants in P

can run program

B”

“Participants in P

can read data D”

Figure 1: An actual organization can participate in one or more VOs by sharing some or all of its

resources. We show three actual organizations (the ovals), and two VOs: P, which links participants in an

aerospace design consortium, and Q, which links colleagues who have agreed to share spare computing

cycles, for example to run ray tracing computations. The organization on the left participates in P, the one

to the right participates in Q, and the third is a member of both P and Q. The policies governing access to

resources (summarized in “quotes”) vary according to the actual organizations, resources, and VOs

involved.

Resource sharing is conditional: each resource owner makes resources available, subject to

constraints on when, where, and what can be done. For example, a participant in VO P of Figure

1 might allow VO partners to invoke their simulation service only for “simple” problems.

Resource consumers may also place constraints on properties of the resources they are prepared

to work with. For example, a participant in VO Q might accept only pooled computational

resources certified as “secure.” The implementation of such constraints requires mechanisms for

expressing policies, for establishing the identity of a consumer or resource (authentication), and

for determining whether an operation is consistent with applicable sharing relationships

(authorization).

Sharing relationships can vary dynamically over time, in terms of the resources involved, the

nature of the access permitted, and the participants to whom access is permitted. And these

relationships do not necessarily involve an explicitly named set of individuals, but rather may be

�

The Anatomy of the Grid

5

defined implicitly by the policies that govern access to resources. For example, an organization

might enable access by anyone who can demonstrate that they are a “customer” or a “student.”

The dynamic nature of sharing relationships means that we require mechanisms for discovering

and characterizing the nature of the relationships that exist at a particular point in time. For

example, a new participant joining VO Q must be able to determine what resources it is able to

access, the “quality” of these resources, and the policies that govern access.

Sharing relationships are often not simply client-server, but peer to peer: providers can be

consumers, and sharing relationships can exist among any subset of participants. Sharing

relationships may be combined to coordinate use across many resources, each owned by different

organizations. For example, in VO Q, a computation started on one pooled computational

resource may subsequently access data or initiate subcomputations elsewhere. The ability to

delegate authority in controlled ways becomes important in such situations, as do mechanisms for

coordinating operations across multiple resources (e.g., coscheduling).

The same resource may be used in different ways, depending on the restrictions placed on the

sharing and the goal of the sharing. For example, a computer may be used only to run a specific

piece of software in one sharing arrangement, while it may provide generic compute cycles in

another. Because of the lack of a priori knowledge about how a resource may be used,

performance metrics, expectations, and limitations (i.e., quality of service) may be part of the

conditions placed on resource sharing or usage.

These characteristics and requirements define what we term a virtual organization, a concept that

we believe is becoming fundamental to much of modern computing. VOs enable disparate

groups of organizations and/or individuals to share resources in a controlled fashion, so that

members may collaborate to achieve a shared goal.

3 The Nature of Grid Architecture

The establishment, management, and exploitation of dynamic, cross-organizational VO sharing

relationships require new technology. We structure our discussion of this technology in terms of

a Grid architecture that identifies fundamental system components, specifies the purpose and

function of these components, and indicates how these components interact with one another.

In defining a Grid architecture, we start from the perspective that effective VO operation requires

that we be able to establish sharing relationships among any potential participants.

Interoperability is thus the central issue to be addressed. In a networked environment,

interoperability means common protocols. Hence, our Grid architecture is first and foremost a

protocol architecture, with protocols defining the basic mechanisms by which VO users and

resources negotiate, establish, manage, and exploit sharing relationships. A standards-based open

architecture facilitates extensibility, interoperability, portability, and code sharing; standard

protocols make it easy to define standard services that provide enhanced capabilities. We can

also construct Application Programming Interfaces and Software Development Kits (see

Appendix for definitions) to provide the programming abstractions required to create a usable

Grid. Together, this technology and architecture constitute what is often termed middleware

(“the services needed to support a common set of applications in a distributed network

environment” [3]), although we avoid that term here due to its vagueness. We discuss each of

these points in the following.

Why is interoperability such a fundamental concern? At issue is our need to ensure that sharing

relationships can be initiated among arbitrary parties, accommodating new participants

dynamically, across different platforms, languages, and programming environments. In this

context, mechanisms serve little purpose if they are not defined and implemented so as to be

�

The Anatomy of the Grid

6

interoperable across organizational boundaries, operational policies, and resource types. Without

interoperability, VO applications and participants are forced to enter into bilateral sharing

arrangements, as there is no assurance that the mechanisms used between any two parties will

extend to any other parties. Without such assurance, dynamic VO formation is all but impossible,

and the types of VOs that can be formed are severely limited. Just as the Web revolutionized

information sharing by providing a universal protocol and syntax (HTTP and HTML) for

information exchange, so we require standard protocols and syntaxes for general resource

sharing.

Why are protocols critical to interoperability? A protocol definition specifies how distributed

system elements interact with one another in order to achieve a specified behavior, and the

structure of the information exchanged during this interaction. This focus on externals

(interactions) rather than internals (software, resource characteristics) has important pragmatic

benefits. VOs tend to be fluid; hence, the mechanisms used to discover resources, establish

identity, determine authorization, and initiate sharing must be flexible and lightweight, so that

resource-sharing arrangements can be established and changed quickly. Because VOs

complement rather than replace existing institutions, sharing mechanisms cannot require

substantial changes to local policies and must allow individual institutions to maintain ultimate

control over their own resources. Since protocols govern the interaction between components,

and not the implementation of the components, local control is preserved.

Why are services important? A service (see Appendix) is defined solely by the protocol that it

speaks and the behaviors that it implements. The definition of standard services—for access to

computation, access to data, resource discovery, coscheduling, data replication, and so forth—

allows us to enhance the services offered to VO participants and also to abstract away resource-

specific details that would otherwise hinder the development of VO applications.

Why do we also consider Application Programming Interfaces (APIs) and Software Development

Kits (SDKs)? There is, of course, more to VOs than interoperability, protocols, and services.

Developers must be able to develop sophisticated applications in complex and dynamic execution

environments. Users must be able to operate these applications. Application robustness,

correctness, development costs, and maintenance costs are all important concerns. Standard

abstractions, APIs, and SDKs can accelerate code development, enable code sharing, and enhance

application portability. APIs and SDKs are an adjunct to, not an alternative to, protocols.

Without standard protocols, interoperability can be achieved at the API level only by using a

single implementation everywhere—infeasible in many interesting VOs—or by having every

implementation know the details of every other implementation. (The Jini approach [6] of

downloading protocol code to a remote site does not circumvent this requirement.)

In summary, our approach to Grid architecture emphasizes the identification and definition of

protocols and services, first; and APIs and SDKs, second.

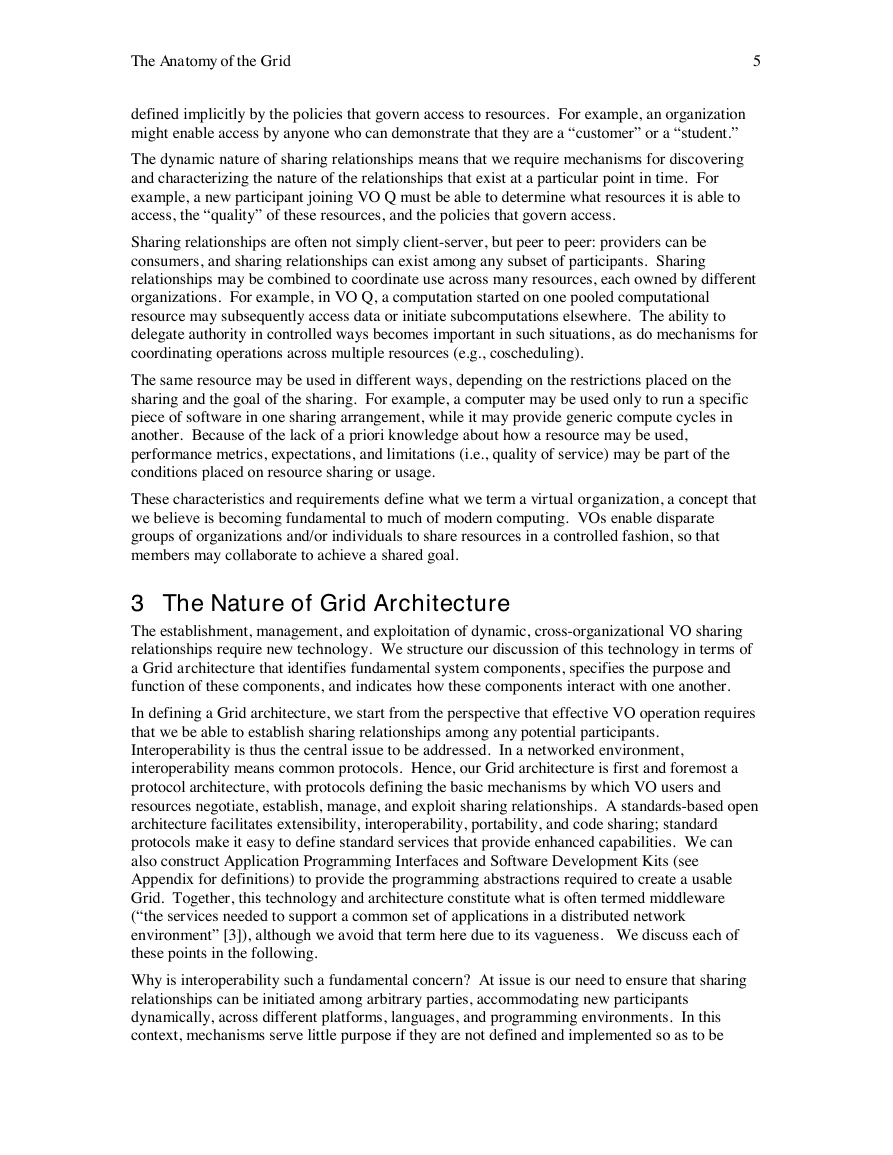

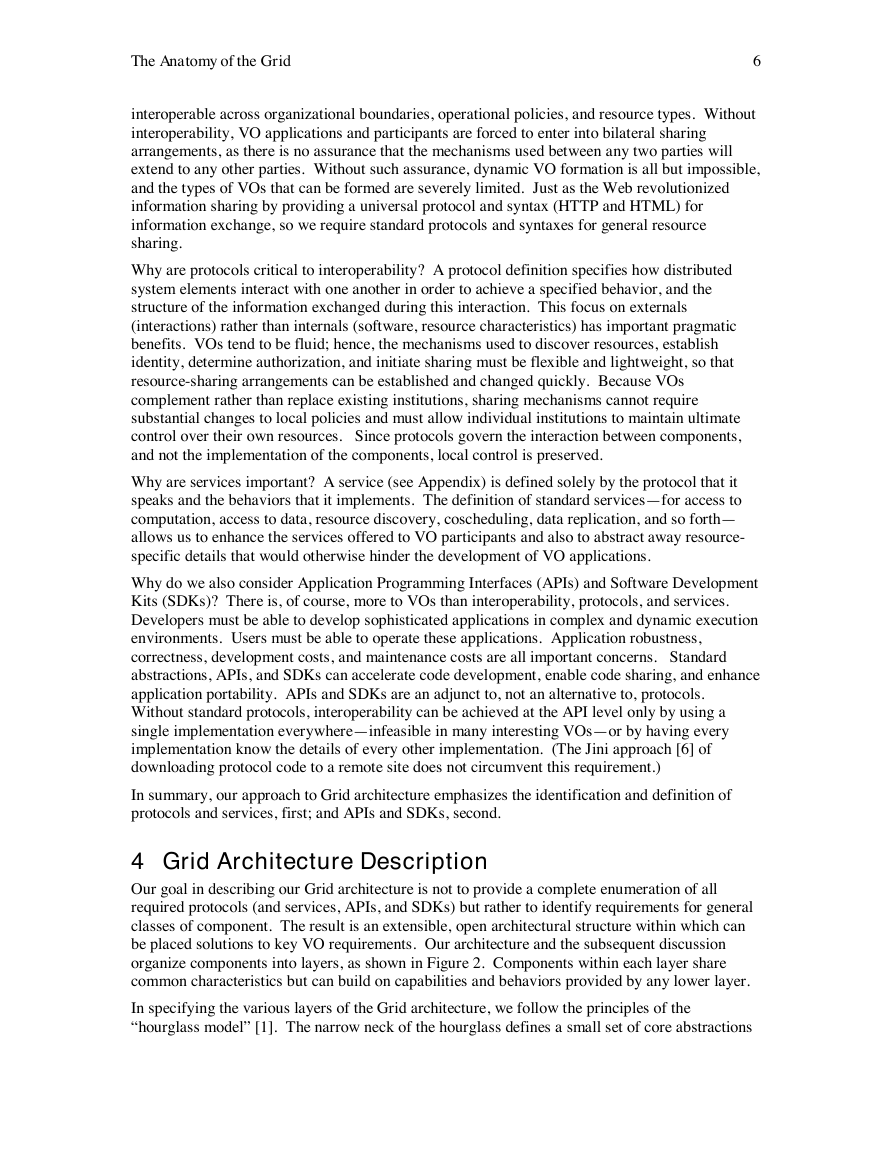

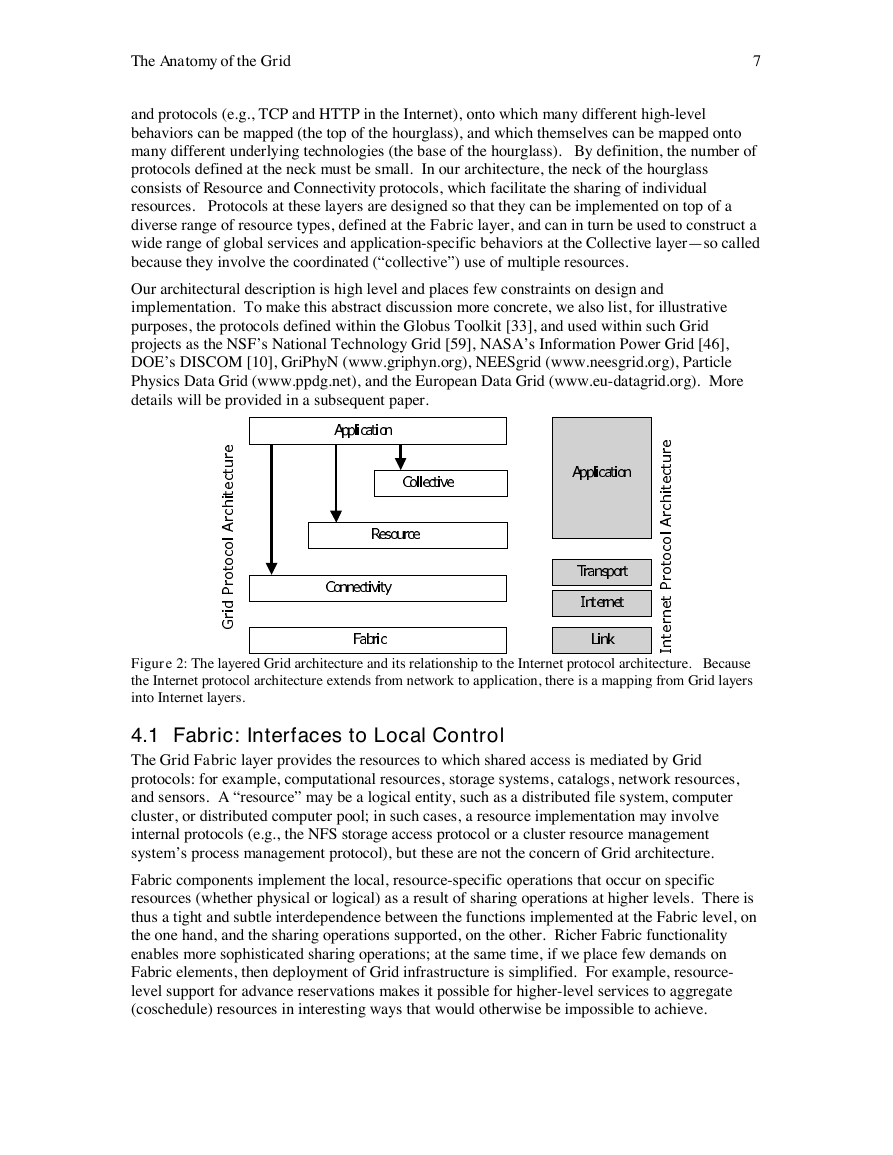

4 Grid Architecture Description

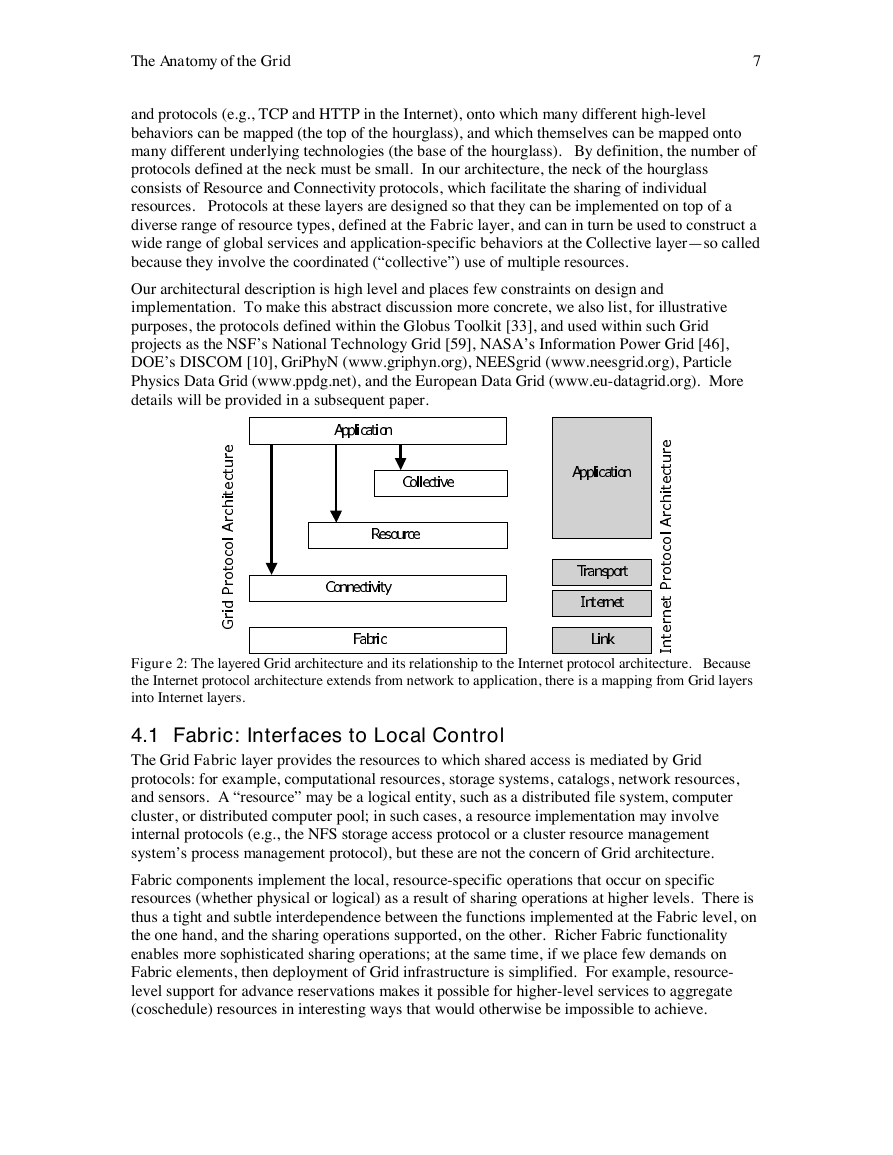

Our goal in describing our Grid architecture is not to provide a complete enumeration of all

required protocols (and services, APIs, and SDKs) but rather to identify requirements for general

classes of component. The result is an extensible, open architectural structure within which can

be placed solutions to key VO requirements. Our architecture and the subsequent discussion

organize components into layers, as shown in Figure 2. Components within each layer share

common characteristics but can build on capabilities and behaviors provided by any lower layer.

In specifying the various layers of the Grid architecture, we follow the principles of the

“hourglass model” [1]. The narrow neck of the hourglass defines a small set of core abstractions

�

The Anatomy of the Grid

7

and protocols (e.g., TCP and HTTP in the Internet), onto which many different high-level

behaviors can be mapped (the top of the hourglass), and which themselves can be mapped onto

many different underlying technologies (the base of the hourglass). By definition, the number of

protocols defined at the neck must be small. In our architecture, the neck of the hourglass

consists of Resource and Connectivity protocols, which facilitate the sharing of individual

resources. Protocols at these layers are designed so that they can be implemented on top of a

diverse range of resource types, defined at the Fabric layer, and can in turn be used to construct a

wide range of global services and application-specific behaviors at the Collective layer—so called

because they involve the coordinated (“collective”) use of multiple resources.

Our architectural description is high level and places few constraints on design and

implementation. To make this abstract discussion more concrete, we also list, for illustrative

purposes, the protocols defined within the Globus Toolkit [33], and used within such Grid

projects as the NSF’s National Technology Grid [59], NASA’s Information Power Grid [46],

DOE’s DISCOM [10], GriPhyN (www.griphyn.org), NEESgrid (www.neesgrid.org), Particle

Physics Data Grid (www.ppdg.net), and the European Data Grid (www.eu-datagrid.org). More

details will be provided in a subsequent paper.

e

r

u

t

c

e

it

h

c

r

l A

o

c

o

t

o

r

P

id

r

G

Application

Collective

Resource

Connectivity

Fabric

Application

Transport

Internet

Link

e

r

u

t

c

e

it

h

c

r

l A

o

c

o

t

o

r

P

t

e

n

r

e

t

n

I

Figure 2: The layered Grid architecture and its relationship to the Internet protocol architecture. Because

the Internet protocol architecture extends from network to application, there is a mapping from Grid layers

into Internet layers.

4.1 Fabric: Interfaces to Local Control

The Grid Fabric layer provides the resources to which shared access is mediated by Grid

protocols: for example, computational resources, storage systems, catalogs, network resources,

and sensors. A “resource” may be a logical entity, such as a distributed file system, computer

cluster, or distributed computer pool; in such cases, a resource implementation may involve

internal protocols (e.g., the NFS storage access protocol or a cluster resource management

system’s process management protocol), but these are not the concern of Grid architecture.

Fabric components implement the local, resource-specific operations that occur on specific

resources (whether physical or logical) as a result of sharing operations at higher levels. There is

thus a tight and subtle interdependence between the functions implemented at the Fabric level, on

the one hand, and the sharing operations supported, on the other. Richer Fabric functionality

enables more sophisticated sharing operations; at the same time, if we place few demands on

Fabric elements, then deployment of Grid infrastructure is simplified. For example, resource-

level support for advance reservations makes it possible for higher-level services to aggregate

(coschedule) resources in interesting ways that would otherwise be impossible to achieve.

�

The Anatomy of the Grid

8

However, as in practice few resources support advance reservation “out of the box,” a

requirement for advance reservation increases the cost of incorporating new resources into a Grid.

issue / significance of building large, integrated systems, just-in-time by aggregation (=co-

scheduling and co-management) is a significant new capability provided by these Grid services.

Experience suggests that at a minimum, resources should implement enquiry mechanisms that

permit discovery of their structure, state, and capabilities (e.g., whether they support advance

reservation) on the one hand, and resource management mechanisms that provide some control of

delivered quality of service, on the other. The following brief and partial list provides a resource-

specific characterization of capabilities.

• Computational resources: Mechanisms are required for starting programs and for

monitoring and controlling the execution of the resulting processes. Management

mechanisms that allow control over the resources allocated to processes are useful, as are

advance reservation mechanisms. Enquiry functions are needed for determining

hardware and software characteristics as well as relevant state information such as current

load and queue state in the case of scheduler-managed resources.

•

Storage resources: Mechanisms are required for putting and getting files. Third-party

and high-performance (e.g., striped) transfers are useful [61]. So are mechanisms for

reading and writing subsets of a file and/or executing remote data selection or reduction

functions [14]. Management mechanisms that allow control over the resources allocated

to data transfers (space, disk bandwidth, network bandwidth, CPU) are useful, as are

advance reservation mechanisms. Enquiry functions are needed for determining

hardware and software characteristics as well as relevant load information such as

available space and bandwidth utilization.

• Network resources: Management mechanisms that provide control over the resources

allocated to network transfers (e.g., prioritization, reservation) can be useful. Enquiry

functions should be provided to determine network characteristics and load.

• Code repositories: This specialized form of storage resource requires mechanisms for

managing versioned source and object code: for example, a control system such as CVS.

• Catalogs: This specialized form of storage resource requires mechanisms for

implementing catalog query and update operations: for example, a relational database [9].

Globus Toolkit: The Globus Toolkit has been designed to use (primarily) existing fabric

components, including vendor-supplied protocols and interfaces. However, if a vendor does not

provide the necessary Fabric-level behavior, the Globus Toolkit includes the missing

functionality. For example, enquiry software is provided for discovering structure and state

information for various common resource types, such as computers (e.g., OS version, hardware

configuration, load [30], scheduler queue status), storage systems (e.g., available space), and

networks (e.g., current and predicted future load [52, 63]), and for packaging this information in a

form that facilitates the implementation of higher-level protocols, specifically at the Resource

layer. Resource management, on the other hand, is generally assumed to be the domain of local

resource managers. One exception is the General-purpose Architecture for Reservation and

Allocation (GARA) [36], which provides a “slot manager” that can be used to implement advance

reservation for resources that do not support this capability. Others have developed

enhancements to the Portable Batch System (PBS) [56] and Condor [49, 50] that support advance

reservation capabilities.

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc