Information Theory and Statistical Learning

�

Frank Emmert-Streib Matthias Dehmer

Information Theory

and Statistical Learning

ABC

�

Frank Emmert-Streib

University of Washington

Department of Biostatistics

and Department of Genome Sciences

1705 NE Pacific St.,

Box 357730

Seattle WA 98195, USA

and

Queen’s University Belfast

Computational Biology

and Machine Learning

Center for Cancer Research

and Cell Biology

School of Biomedical Sciences

97 Lisburn Road, Belfast BT9 7BL, UK

v@bio-complexity.com

Matthias Dehmer

Vienna University of Technology

Institute of Discrete Mathematics

and Geometry

Wiedner Hauptstr. 8–10

1040 Vienna, Austria

and

University of Coimbra

Center for Mathematics

Probability and Statistics

Apartado 3008, 3001–454

Coimbra, Portugal

matthias@dehmer.org

ISBN: 978-0-387-84815-0

DOI: 10.1007/978-0-387-84816-7

e-ISBN: 978-0-387-84816-7

Library of Congress Control Number: 2008932107

c Springer Science+Business Media, LLC 2009

All rights reserved. This work may not be translated or copied in whole or in part without the written

permission of the publisher (Springer Science+Business Media, LLC, 233 Spring Street, New York,

NY 10013, USA), except for brief excerpts in connection with reviews or scholarly analysis. Use in

connection with any form of information storage and retrieval, electronic adaptation, computer software,

or by similar or dissimilar methodology now known or hereafter developed is forbidden.

The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are

not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject

to proprietary rights.

Printed on acid-free paper

springer.com

�

Preface

This book presents theoretical and practical results of information theoretic methods

used in the context of statistical learning. Its major goal is to advocate and promote

the importance and usefulness of information theoretic concepts for understanding

and developing the sophisticated machine learning methods necessary not only to

cope with the challenges of modern data analysis but also to gain further insights

into their theoretical foundations. Here Statistical Learning is loosely defined as a

synonym, for, e.g., Applied Statistics, Artificial Intelligence or Machine Learning.

Over the last decades, many approaches and algorithms have been suggested in the

fields mentioned above, for which information theoretic concepts constitute core

ingredients. For this reason we present a selected collection of some of the finest

concepts and applications thereof from the perspective of information theory as the

underlying guiding principles. We consider such a perspective as very insightful and

expect an even greater appreciation for this perspective over the next years.

The book is intended for interdisciplinary use, ranging from Applied Statistics,

Artificial Intelligence, Applied Discrete Mathematics, Computer Science, Infor-

mation Theory, Machine Learning to Physics. In addition, people working in the

hybrid fields of Bioinformatics, Biostatistics, Computational Biology, Computa-

tional Linguistics, Medical Bioinformatics, Neuroinformatics or Web Mining might

profit tremendously from the presented results because these data-driven areas are

in permanent need of new approaches to cope with the increasing flood of high-

dimensional, noisy data that possess seemingly never ending challenges for their

analysis.

Many colleagues, whether consciously or unconsciously, have provided us with

input, help and support before and during the writing of this book. In particular we

would like to thank Shun-ichi Amari, Hamid Arabnia, G¨okhan Bakır, Alexandru T.

Balaban, Teodor Silviu Balaban, Frank J. Balbach, Jo˜ao Barros, Igor Bass, Matthias

Beck, Danail Bonchev, Stefan Borgert, Mieczyslaw Borowiecki, Rudi L. Cilibrasi,

Mike Coleman, Malcolm Cook, Pham Dinh-Tuan, Michael Drmota, Shinto Eguchi,

B. Roy Frieden, Bernhard Gittenberger, Galina Glazko, Martin Grabner, Earl

Glynn, Peter Grassberger, Peter Hamilton, Kateˇrina Hlav´aˇckov´a-Schindler, Lucas

R. Hope, Jinjie Huang, Robert Jenssen, Attila Kert´esz-Farkas, Andr´as Kocsor,

v

�

vi

Preface

Elena Konstantinova, Kevin B. Korb, Alexander Kraskov, Tyll Kr¨uger, Ming Li, J.F.

McCann, Alexander Mehler, Marco M¨oller, Abbe Mowshowitz, Max M¨uhlh¨auser,

Markus M¨uller, Noboru Murata, Arcady Mushegian, Erik P. Nyberg, Paulo Eduardo

Oliveira, Hyeyoung Park, Judea Pearl, Daniel Polani, S´andor Pongor, William

Reeves, Jorma Rissanen, Panxiang Rong, Reuven Rubinstein, Rainer Siegmund

Schulze, Heinz Georg Schuster, Helmut Schwegler, Chris Seidel, Fred Sobik, Ray

J. Solomonoff, Doru Stefanescu, Thomas Stoll, John Storey, Milan Studeny, Ulrich

Tamm, Naftali Tishby, Paul M.B. Vit´anyi, Jos´e Miguel Urbano, Kazuho Watanabe,

Dongxiao Zhu, Vadim Zverovich and apologize to all those who have been missed

inadvertently. We would like also to thank our editor Amy Brais from Springer who

has always been available and helpful. Last but not least we would like to thank our

families for support and encouragement during all the time of preparing the book

for publication.

We hope this book will help to spread the enthusiasm we have for this field and

inspire people to tackle their own practical or theoretical research problems.

Belfast and Coimbra

June 2008

Frank Emmert-Streib

Matthias Dehmer

�

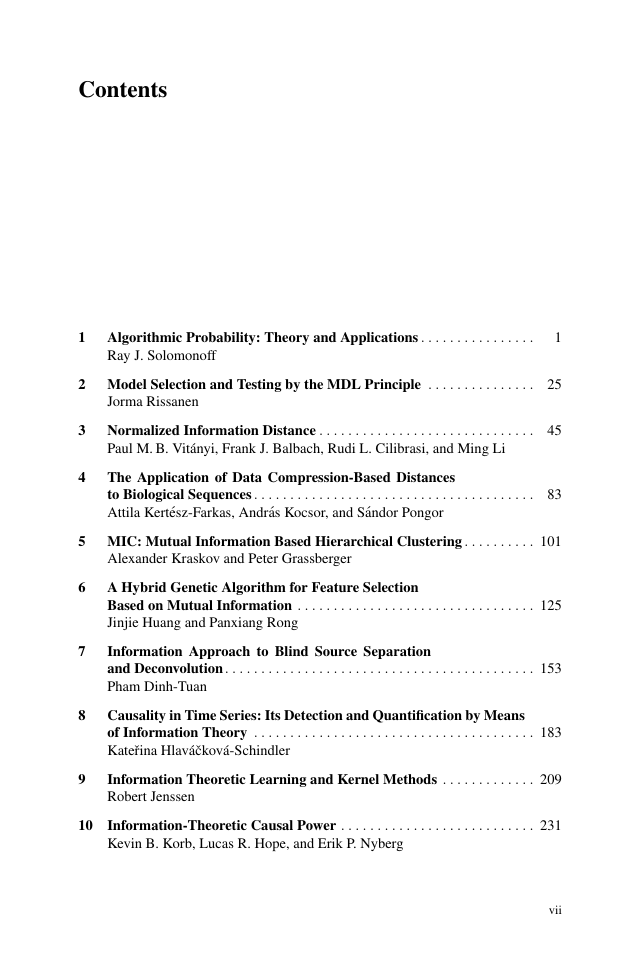

Contents

1

Algorithmic Probability: Theory and Applications . . . . . . . . . . . . . . . .

Ray J. Solomonoff

1

2 Model Selection and Testing by the MDL Principle . . . . . . . . . . . . . . . 25

Jorma Rissanen

3

4

Normalized Information Distance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Paul M. B. Vit´anyi, Frank J. Balbach, Rudi L. Cilibrasi, and Ming Li

The Application of Data Compression-Based Distances

to Biological Sequences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Attila Kert´esz-Farkas, Andr´as Kocsor, and S´andor Pongor

5 MIC: Mutual Information Based Hierarchical Clustering . . . . . . . . . . 101

Alexander Kraskov and Peter Grassberger

6

7

8

9

A Hybrid Genetic Algorithm for Feature Selection

Based on Mutual Information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 125

Jinjie Huang and Panxiang Rong

Information Approach to Blind Source Separation

and Deconvolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153

Pham Dinh-Tuan

Causality in Time Series: Its Detection and Quantification by Means

of Information Theory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

Kateˇrina Hlav´aˇckov´a-Schindler

Information Theoretic Learning and Kernel Methods . . . . . . . . . . . . . 209

Robert Jenssen

10

Information-Theoretic Causal Power . . . . . . . . . . . . . . . . . . . . . . . . . . . 231

Kevin B. Korb, Lucas R. Hope, and Erik P. Nyberg

vii

�

viii

11

Information Flows in Complex Networks . . . . . . . . . . . . . . . . . . . . . . . . 267

Jo˜ao Barros

Contents

12 Models of Information Processing in the Sensorimotor Loop . . . . . . . 289

Daniel Polani and Marco M¨oller

13

Information Divergence Geometry and the Application to Statistical

Machine Learning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 309

Shinto Eguchi

14 Model Selection and Information Criterion . . . . . . . . . . . . . . . . . . . . . . 333

Noboru Murata and Hyeyoung Park

15 Extreme Physical Information as a Principle of Universal Stability . . 355

B. Roy Frieden

16 Entropy and Cloning Methods for Combinatorial Optimization,

Sampling and Counting Using the Gibbs Sampler . . . . . . . . . . . . . . . . 385

Reuven Rubinstein

Index . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 435

�

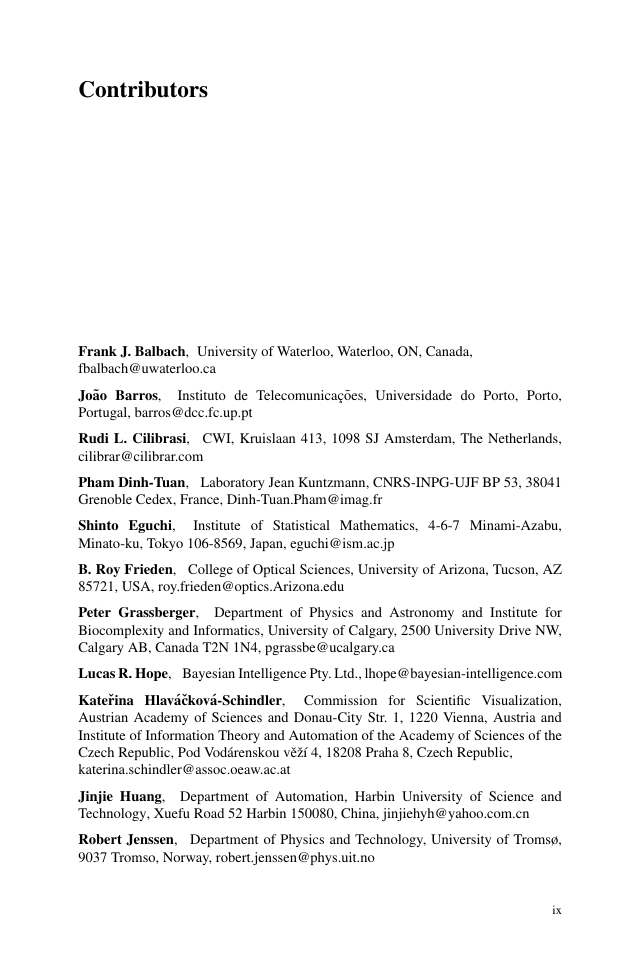

Contributors

Institute of Statistical Mathematics, 4-6-7 Minami-Azabu,

Instituto de Telecomunicac¸ ˜oes, Universidade do Porto, Porto,

Frank J. Balbach, University of Waterloo, Waterloo, ON, Canada,

fbalbach@uwaterloo.ca

Jo˜ao Barros,

Portugal, barros@dcc.fc.up.pt

Rudi L. Cilibrasi, CWI, Kruislaan 413, 1098 SJ Amsterdam, The Netherlands,

cilibrar@cilibrar.com

Pham Dinh-Tuan, Laboratory Jean Kuntzmann, CNRS-INPG-UJF BP 53, 38041

Grenoble Cedex, France, Dinh-Tuan.Pham@imag.fr

Shinto Eguchi,

Minato-ku, Tokyo 106-8569, Japan, eguchi@ism.ac.jp

B. Roy Frieden, College of Optical Sciences, University of Arizona, Tucson, AZ

85721, USA, roy.frieden@optics.Arizona.edu

Peter Grassberger, Department of Physics and Astronomy and Institute for

Biocomplexity and Informatics, University of Calgary, 2500 University Drive NW,

Calgary AB, Canada T2N 1N4, pgrassbe@ucalgary.ca

Lucas R. Hope, Bayesian Intelligence Pty. Ltd., lhope@bayesian-intelligence.com

Kateˇrina Hlav´aˇckov´a-Schindler, Commission for Scientific Visualization,

Austrian Academy of Sciences and Donau-City Str. 1, 1220 Vienna, Austria and

Institute of Information Theory and Automation of the Academy of Sciences of the

Czech Republic, Pod Vod´arenskou vˇeˇz´ı 4, 18208 Praha 8, Czech Republic,

katerina.schindler@assoc.oeaw.ac.at

Jinjie Huang, Department of Automation, Harbin University of Science and

Technology, Xuefu Road 52 Harbin 150080, China, jinjiehyh@yahoo.com.cn

Robert Jenssen, Department of Physics and Technology, University of Tromsø,

9037 Tromso, Norway, robert.jenssen@phys.uit.no

ix

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc