Recursive Function Approximat ion with Applications to Wavelet

Orthogonal Matching Pursuit:

Decomposition

Y. C. PATI

Information Systems Laboratory

Dept. of Electrical Engineering

Stanford University, Stanford, CA 94305

R. REZAIIFAR AND P. s. KRISHNAPRASAD

Institute for Systems Research

Dept. of Electrical Engineering

University of Maryland, College Park, MD 20742

Abstract

In this paper we describe a recursive algorithm to

compute representations of functions with respect to

nonorthogonal and possibly overcomplete dictionaries

of elementary building blocks e.g.

affine (wavelet)

frames. We propose a modification to the Matching

Pursuit algorithm of Mallat and Zhang (1992) that

maintains full backward orthogonality of the residual

(error) at every step and thereby leads to improved

convergence. We refer to this modified algorithm as

Orthogonal Matching Pursuit (OMP). It is shown that

all additional computation required for the OMP al-

gorithm may be performed recursively.

1 Introduction and Background

Given a collection of vectors D = {xi} in a Hilbert

space R, let us define

V=Span{z,},

and W = V '

(inR).

We shall refer to D as a dictionary, and will assume

the vectors xn, are normalized (llznII = 1). In [3] Mal-

lat and Zhang proposed an iterative algorithm that

they termed Matching Pursuit (MP) to construct rep-

resentations of the form

Pvf = C a n z n ,

n

(1)

where PV is the orthogonal projection operator onto

V. Each iteration of the MP algorithm results in an

intermediate representation of the form

where fk is the current approximation, and Rk f the

current residual (error). Using initial values of Ro f =

f , fo = 0, and k = 1 , the M P algorithm is comprised

of the following steps,

(I) Compute the inner-products {(Rkf, Zn)},.

(11) Find nktl such that

I(Rkf,

' n k + I ) l 2 asYp I(Rkf, zj)I 1

where 0 < a 5 1.

(111) Set,

(IV) Increment k, (k t IC + l), and repeat steps (1)-

(IV) until some convergence criterion has been

satisfied.

The proof of convergence [3] of MP relies essentially on

= 0. This orthogonality

the fact that (Rk+lf, z ~ ~ + ~ )

of the residual to the last vector selected leads to the

following "energy conservation" equation.

IIRkfl12 = llRk+lf112 + I(Rkf12nk+1)12'

(2)

It has been noted that the MP algorithm may be de-

rived as a special case of a technique known as Pro-

jection Pursuit (c.f, [2]) in the statistics literature.

A shortcoming of the Matching Pursuit algorithm

in its originally proposed form is that although asymp

totic convergence is guaranteed, the resulting approxi-

mation after any finite number of iterations will in gen-

eral be suboptimal in the following sense. Let N < 00,

1058-6393193 $03.00 Q 1993 IEEE

40

�

gives the optimal approximation with respect to the

selected subset of the dictionary. This is achieved by

ensuring full backward orthogonality of the error i.e.

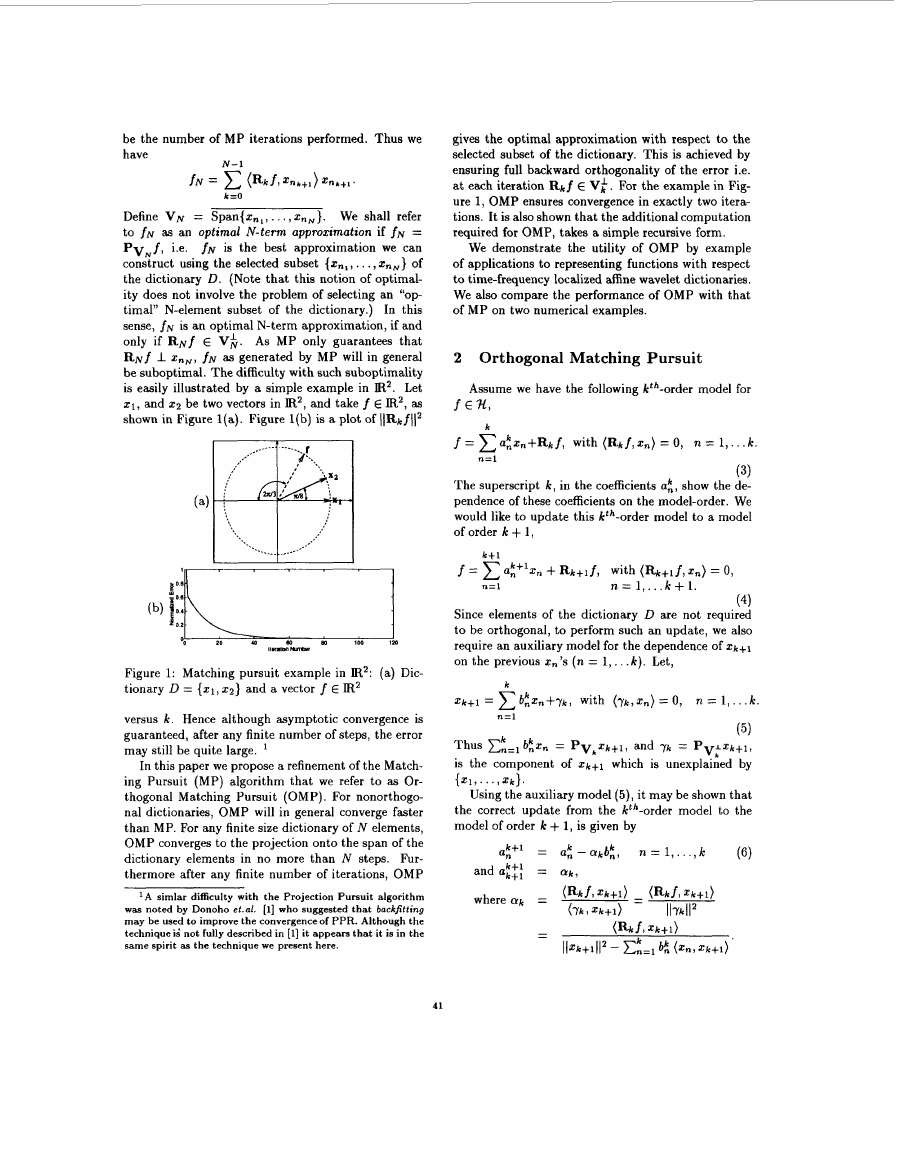

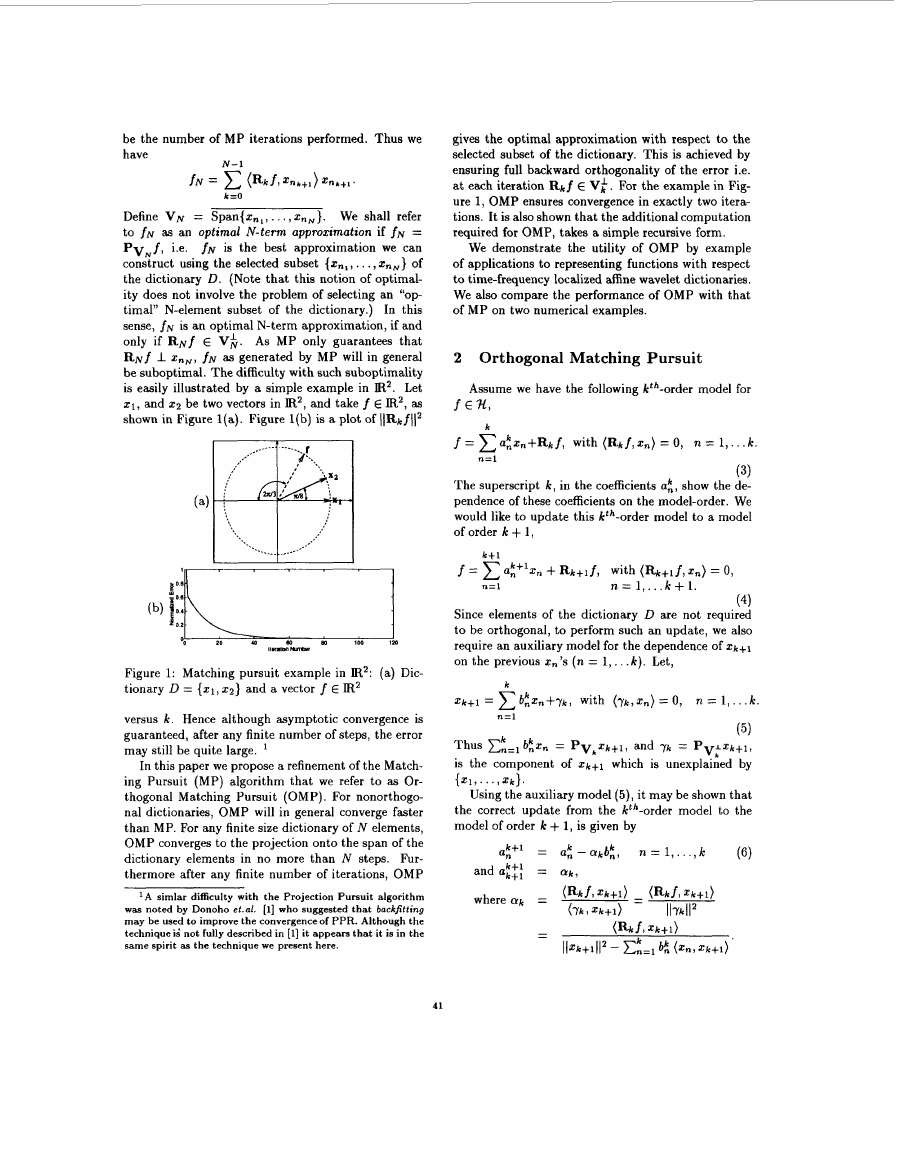

at each iteration Rkf E Vi. For the example in Fig-

ure 1, OMP ensures convergence in exactly two itera-

tions. It is also shown that the additional computation

required for OMP, takes a simple recursive form.

We demonstrate the utility of OMP by example

of applications to representing functions with respect

to time-frequency localized affine wavelet dictionaries.

We also compare the performance of OMP with that

of MP on two numerical examples.

2 Orthogonal Matching Pursuit

Assume we have the following kth-order model for

f EN,

k

f = x Q a f n + R k f , with (Rk f , f n ) = 0, 71 = 1,. . .k.

n=l

(3)

The superscript k, in the coefficients a t , show the de-

pendence of these coefficients on the model-order. We

would like to update this kth-order model to a model

of order k + 1,

f = Qk+lz, -I- & + i f , with (&+if, fn) = 0,

n = l , ... k + l .

k+l

n = l

(4)

Since elements of the dictionary D are not required

to be orthogonal, to perform such an update, we also

require an auxiliary model for the dependence of Xk+l

on the previous t,’~ ( n = 1,. . . k). Let,

k

fk+1 =

b;fn+7k, with (yk,xn) = 0,

72 = 1,. . .k.

n = l

(5)

Thus ~ “ , l b ~ z , = PVkzk+l, and 7 k = p vk L f k + l ,

is the component of z k + l which is unexplained by

( 2 1 , . . . , f k } .

Using the auxiliary model (5), it may be shown that

the correct update from the kth-order model to the

model of order k + 1, is given by

be the number of MP iterations performed. Thus we

have

N-1

f N =

(Rkf, f n k + , )

k=O

Define VN = Span{znl,.. . , xnN}. We shall refer

to fN as an optimal N-term approximation if fN =

PVNf’ i.e.

fN is the best approximation we can

construct using the selected subset {2n1, . . . , Z n N } of

the dictionary D. (Note that this notion of optimal-

ity does not involve the problem of selecting an “op

timal” N-element subset of the dictionary.) In this

sense, f~ is an optimal N-term approximation, if and

only if RNf E V;.

As MP only guarantees that

RN f 1 f n N , fN as generated by MP will in general

be suboptimal. The difficulty with such suboptimality

is easily illustrated by a simple example in IR2. Let

z1, and 2 2 be two vectors in IR2, and take f E Et2, as

shown in Figure l(a). Figure l(b) is a plot of llRkfllz

Figure 1: Matching pursuit example in IR2: (a) Dic-

tionary D = ( 1 1 , Q} and a vector f E Et2

versus k. Hence although asymptotic convergence is

guaranteed, after any finite number of steps, the error

may still be quite large.

In this paper we propose a refinement of the Match-

ing Pursuit (MP) algorithm that we refer to as Or-

thogonal Matching Pursuit (OMP). For nonorthogo-

nal dictionaries, OMP will in general converge faster

than MP. For any finite size dictionary of N elements,

OMP converges to the projection onto the span of the

dictionary elements in no more than N steps. Fur-

thermore after any finite number of iterations, OMP

A simlar difficulty with the Projection Pursuit algorithm

was noted by Donoho et.ol. [l] who suggested that bockfitting

may be used to improve the convergenceof PPR. Although the

techniqueii not fully described in [l] it appears that it is in the

same spirit as the technique we present here.

41

�

It also follows that the residual &+If

R k f = R k + l f -k O k y k , and

satisfies,

Theorem 2.1 For f E 3, let R k f be the residuals

genemted by OMP. Then

2.1 The OMP Algorithm

The results of the previous section may be used to

construct the following algorithm that we will refer to

as Orthogonal Matching Pursuit (OMP).

Initialization:

fo = 0, Rof=f, Do = { 1

20 = 0, a: = 0,

k = O

:III) If I ( R k f , z n r + l ) l < 6, (6 > 0) then stop.

:IV) Reorder the dictionary D, by applying the per-

mutation k + 1 C) n k + l .

(V) Compute {bk,}k=l, such that,

z k + 1 = zn=l bkzn -k yk

k

and (Cyk,Z,) = 0,

= Qk = llykll-2 ( R k f , Z k + l ) ,

n = I , . . .,k.

n = 1,. ..,k,

[VI) Set,

Oi+l = k

k

0, - Q k b n ,

and update the model,

k + l

a

~

"

n = l

fk+l = ~

R k + l f = f - f k + l

D k + l = D k U { z k + l } .

VII) Set k t k + 1, and repeat (1)-(VU).

~

n

2.2 Some Properties of OMP

As in the case of MP, convergence of OMP relies

on an energy conservation equation that now takes

the form (7). The following theorem summarizes the

convergence properties of OMP.

(il) fN = PvN f, N = 0, I, 2,. . ..

Proof: The proof of convergence parallels the proof

of Theorem 1 in [3]. The proof of the the second prop

erty follows immediately from the orthogonality con-

ditions of Equation (3).

Remarks:

The key difference between MP and OMP lies in P r o p

erty (iii) of Theorem 2.1. Property (iii) implies that

at the N t h step we have the best approximation we

can get using the N vectors we have selected from

the dictionary. Therefore in the case of finite dictio-

naries of size M, OMP converges in no more than M

iterations to the projection of f onto the span of the

dictionary elements. As mentioned earlier, Matching

Pursuit does not possess this property.

2.3 Some Computational Details

in

As

the case of MP,

inner products

{ ( R k f, zcj)} may be computed recursively. For OMP

we may express these recursions implicitly in the for-

mula

the

( R k f y z j ) = ( f - f k , z j ) = (f,zj) -

k

(%,zj).

n = l

(8)

The only additional computation required for OMP,

arises in determining the bi's of the auxiliary model

(5). To compute the hi's we rewrite the normal equa-

tions associated with ( 5 ) as a system of k linear equa-

tions,

v k = A k b k ,

(9)

where

and

42

�

Note that the positive constant 6 used in Step (111)

of OMP guarantees nonsingularity of the matrix Ak,

hence we may write

bk = Ak'Vk.

(10)

However, since Ak+l may be written as

(where * denotes conjugate transpose) it may be

shown using the block matrix inversion formula that

100,

I

,

,

,

,

,

,

,

,

1

where P = 1/(1 - vibk). Hence

and therefore

bktl, may be computed recursively using A;', and

bk from the previous step.

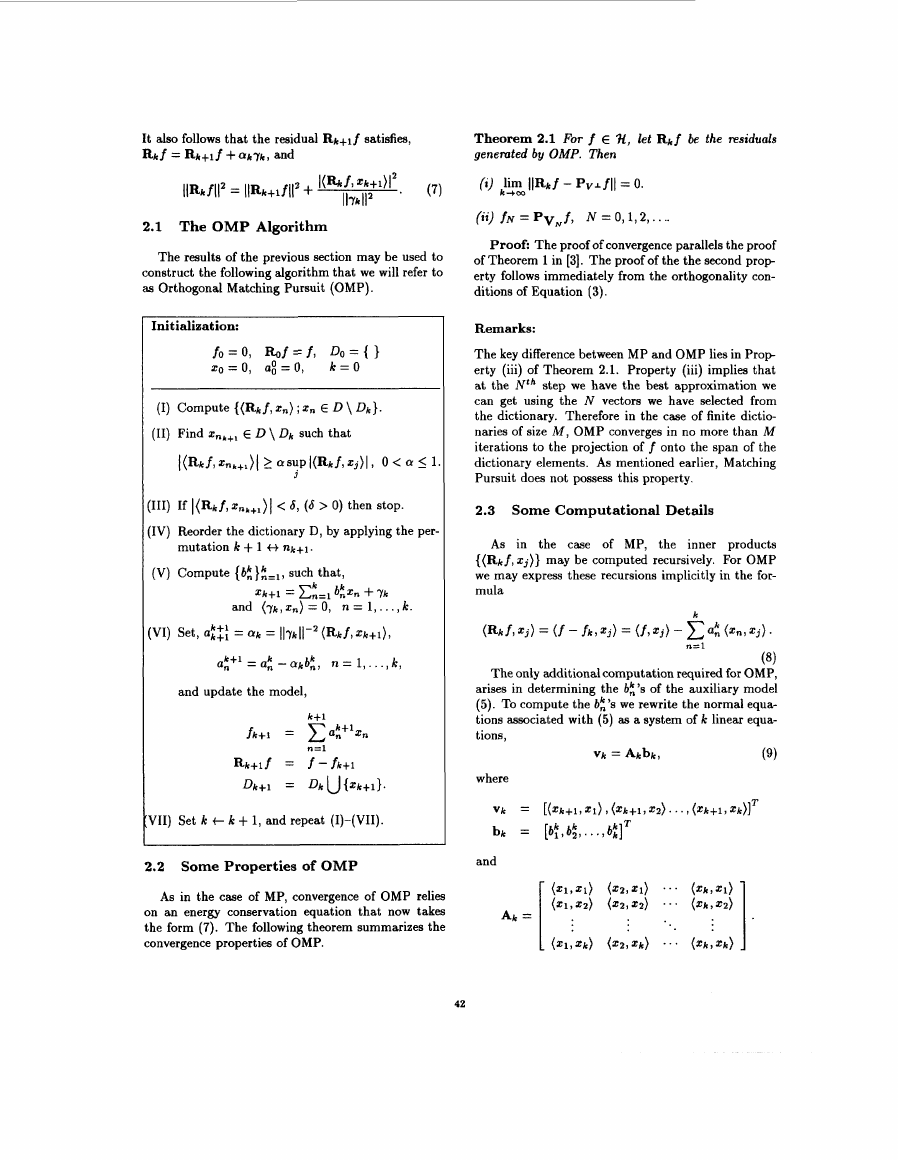

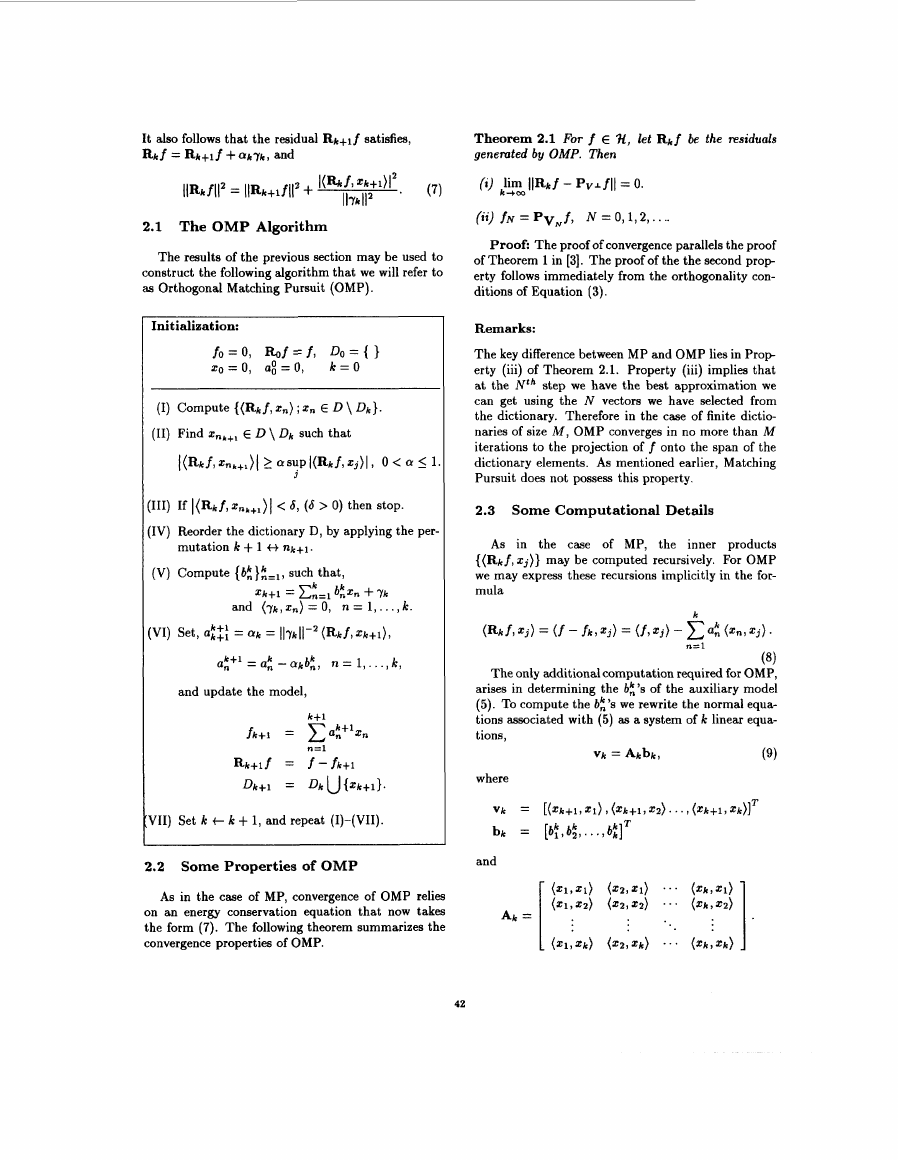

Figure 2: Example I : (a) Original signal f, with OMP

approximation superimposed, (b) Squared L2 norm of

residual Rkf versus iteration number k, for both OMP

(solid line) and MP (dashed line).

3 Examples

In the following examples we consider represen-

tations with repect to an affine wavelet frame con-

structed from dilates and translates of the second

derivate of a Gaussian, i.e. D = {$m,", m,n E Z}

where,

$m,"(C) = 242$(2% - n),

and the analyzing wavelet $J

is given by,

Note that for wavelet dictionaries, the initial set of in-

ner products {(f, $m,n)}, are readily computed by one

convolution followed by sampling at each dilation level

m. The dictionary used in these examples consists of

a total of 351 vectors.

In our first example, both OMP and MP were a p

plied to the signal shown in Figure 2(a). We see from

Figure 2(b) that OMP clearly converges in far fewer

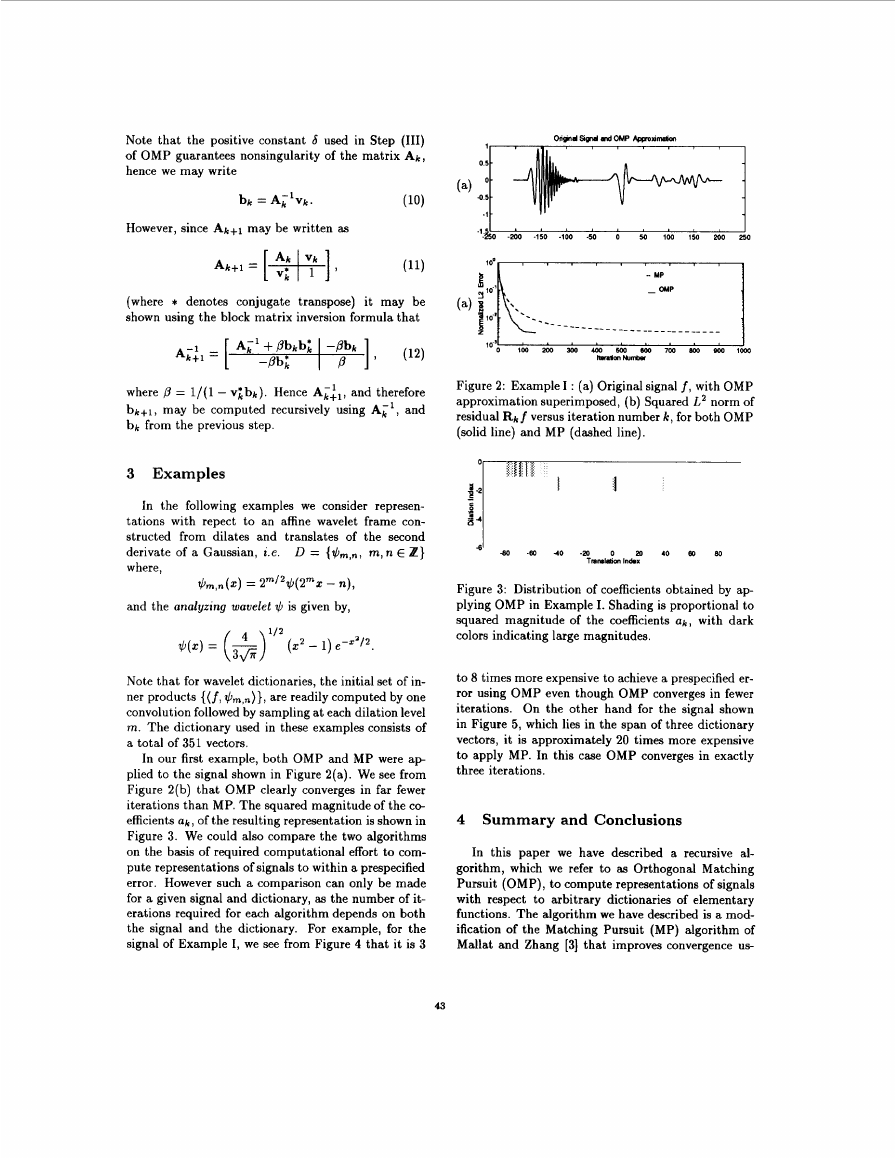

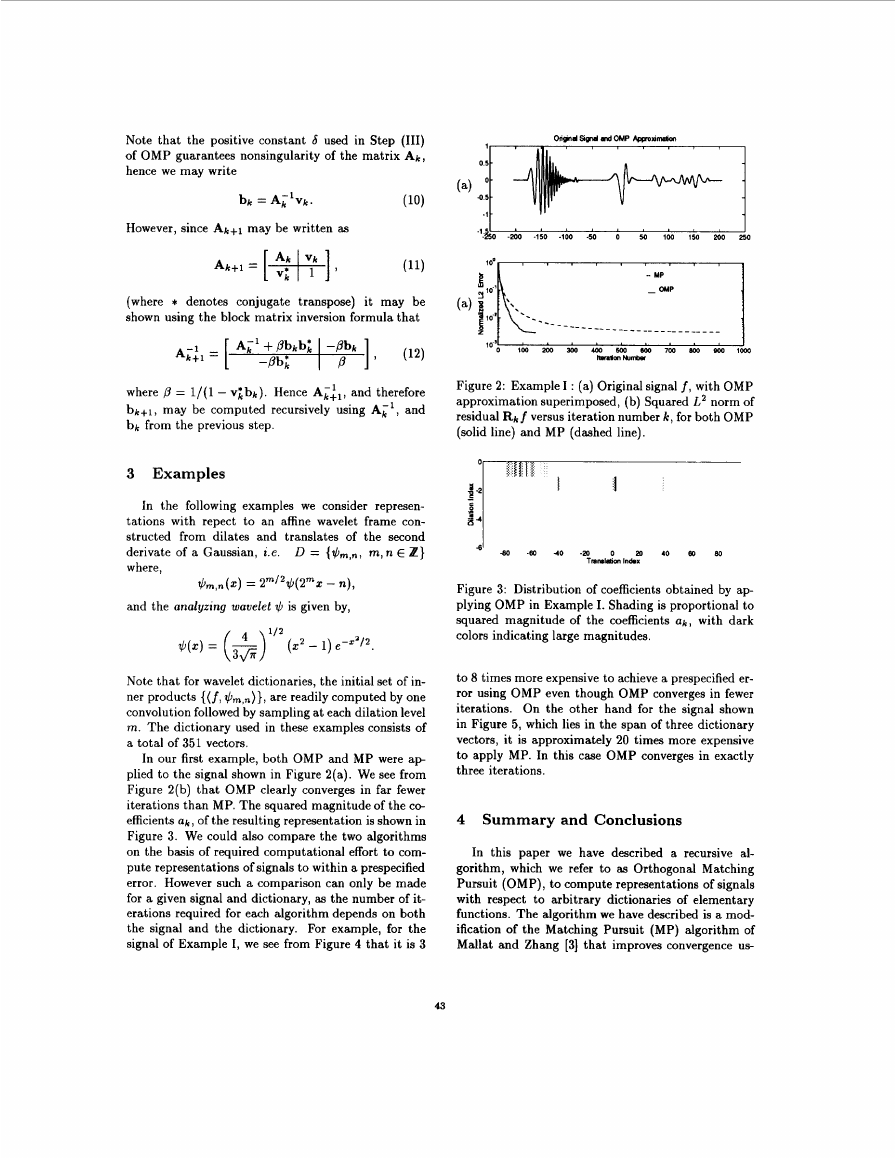

iterations than MP. The squared magnitude of the co-

efficients o k , of the resulting representation is shown in

Figure 3. We could also compare the two algorithms

on the basis of required computational effort to com-

pute representations of signals to within a prespecified

error. However such a comparison can only be made

for a given signal and dictionary, as the number of it-

erations required for each algorithm depends on both

the signal and the dictionary. For example, for the

signal of Example I, we see from Figure 4 that it is 3

43

-80

-60 4 0 -P 0

a0

Tnnl.icn Indm

40

80

80

Figure 3: Distribution of coefficients obtained by ap-

plying OMP in Example I. Shading is proportional to

squared magnitude of the coefficients ah, with dark

colors indicating large magnitudes.

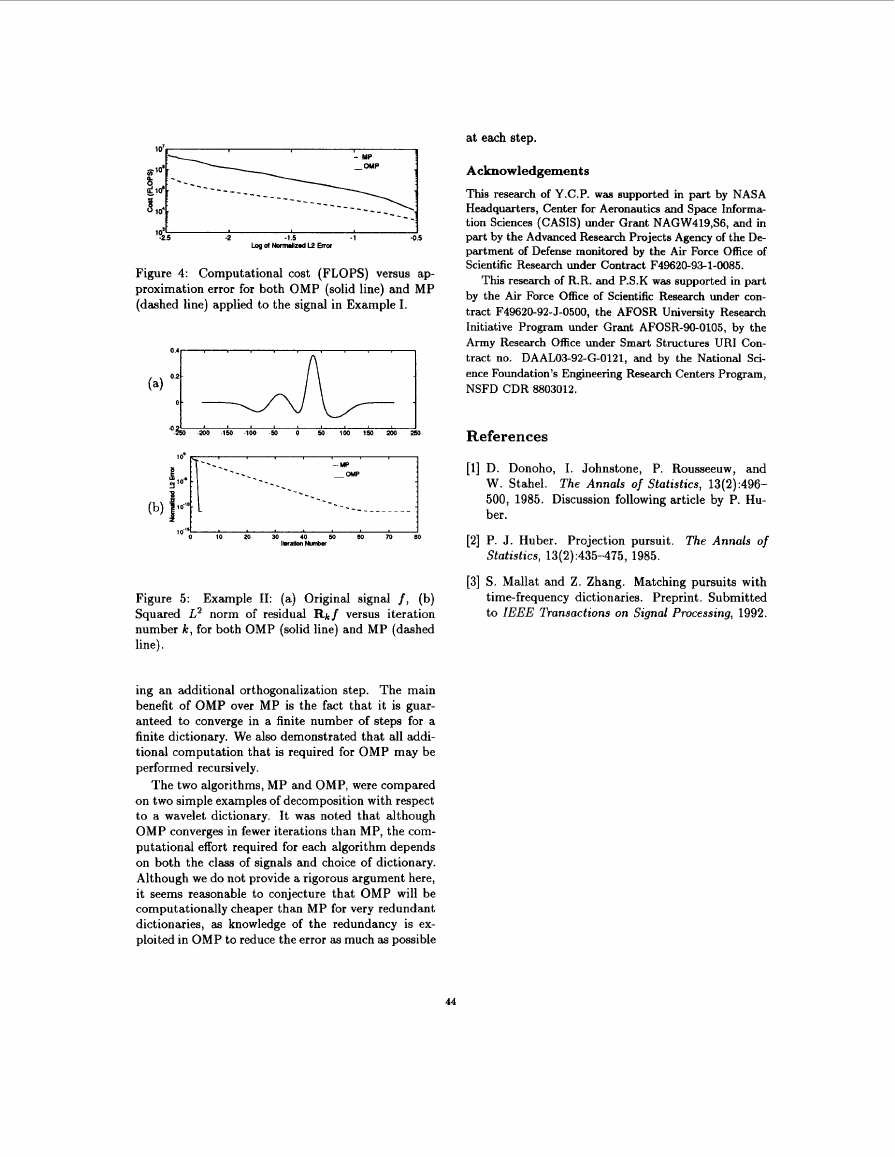

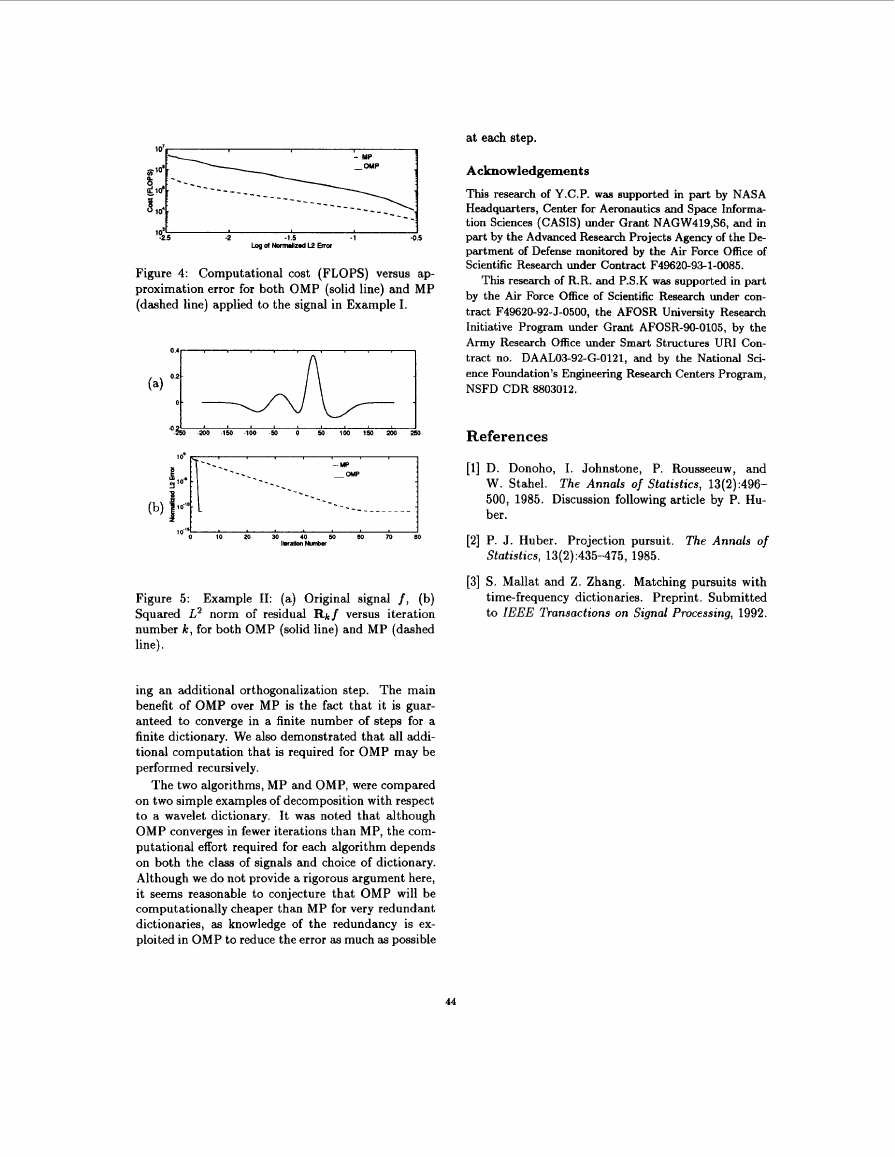

to 8 times more expensive to achieve a prespecified er-

ror using OMP even though OMP converges in fewer

iterations. On the other hand for the signal shown

in Figure 5, which lies in the span of three dictionary

vectors, it is approximately 20 times more expensive

to apply MP. In this case OMP converges in exactly

three iterations.

4 Summary and Conclusions

In this paper we have described a recursive al-

gorithm, which we refer to as Orthogonal Matching

Pursuit (OMP) , to compute representations of signals

with respect to arbitrary dictionaries of elementary

functions. The algorithm we have described is a mod-

ification of the Matching Pursuit (MP) algorithm of

Mallat and Zhang [3] that improves convergence us-

�

at each step.

Acknowledgements

This research of Y.C.P. was supported in part by NASA

Headquarters, Center for Aeronautics and Space Informa-

tion Sciences (CASK) under Grant NAGW419,S6, and in

part by the Advanced Research Projects Agency of the De-

partment of Defense monitored by the Air Force Office of

Scientific Research under Contract F49620-93-1-0085.

This research of R.R. and P.S.K was supported in part

by the Air Force Office of Scientific Research under con-

tract F49620-92-J-0500, the AFOSR University Research

Initiative Program under Grant AFOSR-90-0105, by the

Army Research Office under Smart Structures URI Con-

tract no. DAAL03-92-G-0121, and by the National Sci-

ence Foundation's Engineering Research Centers Program,

NSFD CDR 8803012.

References

[l] D. Donoho, I. Johnstone, P. Rousseeuw, and

W. Stahel. The Annals of Statistics, 13(2):496-

500, 1985. Discussion following article by P. Hu-

ber.

[2] P. J . Huber. Projection pursuit. The Annals of

Statistics, 13(2):435-475, 1985.

[3] S. Mallat and Z. Zhang. Matching pursuits with

time-frequency dictionaries. Preprint. Submitted

to IEEE Transactions on Signal Processing, 1992.

I 0'

- HP

1

- - - - I

Figure 4: Computational cost (FLOPS) versus ap-

proximation error for both OMP (solid line) and MP

(dashed line) applied to the signal in Example I.

Figure 5: Example 11: (a) Original signal f , (b)

Squared L2 norm of residual Rkf versus iteration

number IC, for both OMP (solid line) and MP (dashed

line).

ing an additional orthogonalization step. The main

benefit of OMP over MP is the fact that it is guar-

anteed to converge in a finite number of steps for a

finite dictionary. We also demonstrated that all addi-

tional computation that is required for OMP may be

performed recursively.

The two algorithms, MP and OMP, were compared

on two simple examples of decomposition with respect

to a wavelet dictionary. It was noted that although

OMP converges in fewer iterations than MP, the com-

putational effort required for each algorithm depends

on both the class of signals and choice of dictionary.

Although we do not provide a rigorous argument here,

it seems reasonable to conjecture that OMP will be

computationally cheaper than MP for very redundant

dictionaries, as knowledge of the redundancy is ex-

ploited in OMP to reduce the error as much as possible

44

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc