Copyright

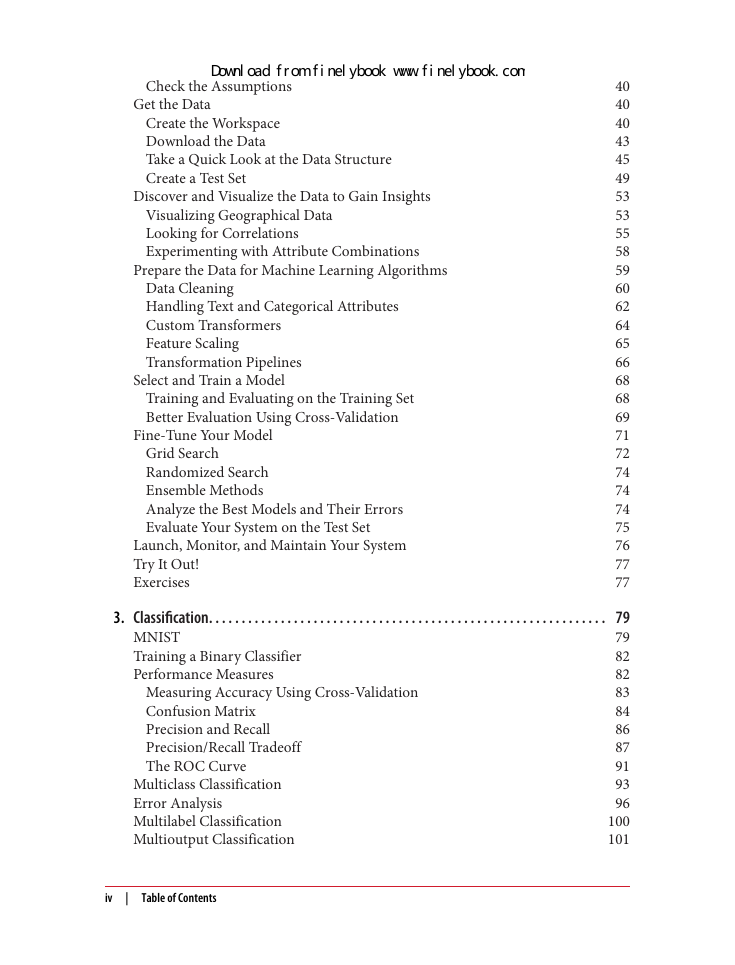

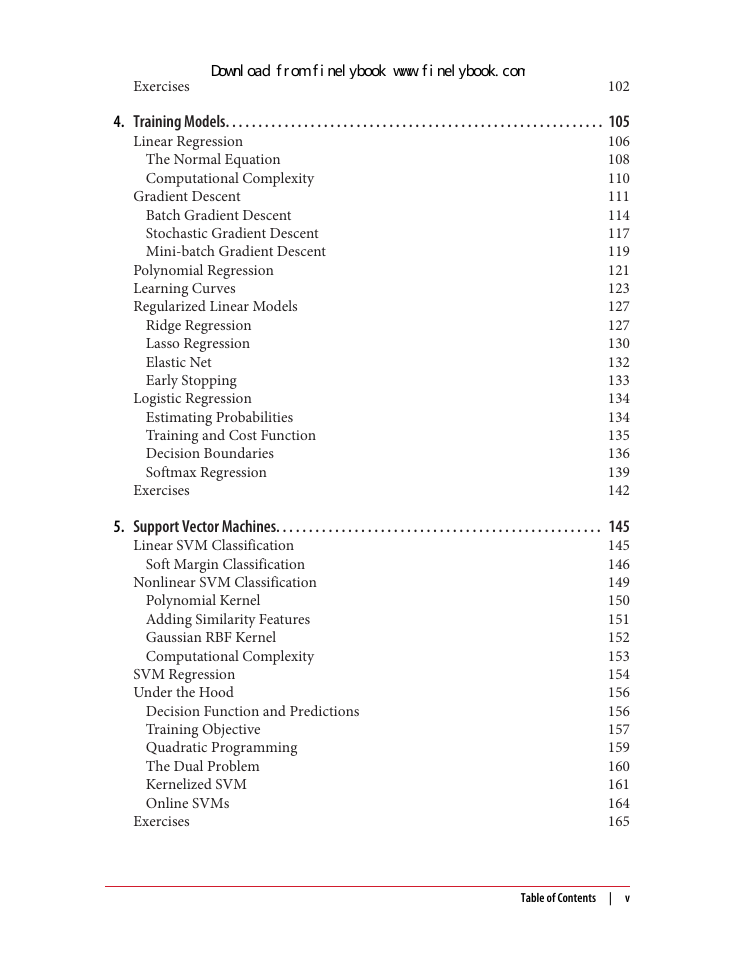

Table of Contents

Preface

The Machine Learning Tsunami

Machine Learning in Your Projects

Objective and Approach

Prerequisites

Roadmap

Other Resources

Conventions Used in This Book

Using Code Examples

O’Reilly Safari

How to Contact Us

Acknowledgments

Part I. The Fundamentals of Machine Learning

Chapter 1. The Machine Learning Landscape

What Is Machine Learning?

Why Use Machine Learning?

Types of Machine Learning Systems

Supervised/Unsupervised Learning

Batch and Online Learning

Instance-Based Versus Model-Based Learning

Main Challenges of Machine Learning

Insufficient Quantity of Training Data

Nonrepresentative Training Data

Poor-Quality Data

Irrelevant Features

Overfitting the Training Data

Underfitting the Training Data

Stepping Back

Testing and Validating

Exercises

Chapter 2. End-to-End Machine Learning Project

Working with Real Data

Look at the Big Picture

Frame the Problem

Select a Performance Measure

Check the Assumptions

Get the Data

Create the Workspace

Download the Data

Take a Quick Look at the Data Structure

Create a Test Set

Discover and Visualize the Data to Gain Insights

Visualizing Geographical Data

Looking for Correlations

Experimenting with Attribute Combinations

Prepare the Data for Machine Learning Algorithms

Data Cleaning

Handling Text and Categorical Attributes

Custom Transformers

Feature Scaling

Transformation Pipelines

Select and Train a Model

Training and Evaluating on the Training Set

Better Evaluation Using Cross-Validation

Fine-Tune Your Model

Grid Search

Randomized Search

Ensemble Methods

Analyze the Best Models and Their Errors

Evaluate Your System on the Test Set

Launch, Monitor, and Maintain Your System

Try It Out!

Exercises

Chapter 3. Classification

MNIST

Training a Binary Classifier

Performance Measures

Measuring Accuracy Using Cross-Validation

Confusion Matrix

Precision and Recall

Precision/Recall Tradeoff

The ROC Curve

Multiclass Classification

Error Analysis

Multilabel Classification

Multioutput Classification

Exercises

Chapter 4. Training Models

Linear Regression

The Normal Equation

Computational Complexity

Gradient Descent

Batch Gradient Descent

Stochastic Gradient Descent

Mini-batch Gradient Descent

Polynomial Regression

Learning Curves

Regularized Linear Models

Ridge Regression

Lasso Regression

Elastic Net

Early Stopping

Logistic Regression

Estimating Probabilities

Training and Cost Function

Decision Boundaries

Softmax Regression

Exercises

Chapter 5. Support Vector Machines

Linear SVM Classification

Soft Margin Classification

Nonlinear SVM Classification

Polynomial Kernel

Adding Similarity Features

Gaussian RBF Kernel

Computational Complexity

SVM Regression

Under the Hood

Decision Function and Predictions

Training Objective

Quadratic Programming

The Dual Problem

Kernelized SVM

Online SVMs

Exercises

Chapter 6. Decision Trees

Training and Visualizing a Decision Tree

Making Predictions

Estimating Class Probabilities

The CART Training Algorithm

Computational Complexity

Gini Impurity or Entropy?

Regularization Hyperparameters

Regression

Instability

Exercises

Chapter 7. Ensemble Learning and Random Forests

Voting Classifiers

Bagging and Pasting

Bagging and Pasting in Scikit-Learn

Out-of-Bag Evaluation

Random Patches and Random Subspaces

Random Forests

Extra-Trees

Feature Importance

Boosting

AdaBoost

Gradient Boosting

Stacking

Exercises

Chapter 8. Dimensionality Reduction

The Curse of Dimensionality

Main Approaches for Dimensionality Reduction

Projection

Manifold Learning

PCA

Preserving the Variance

Principal Components

Projecting Down to d Dimensions

Using Scikit-Learn

Explained Variance Ratio

Choosing the Right Number of Dimensions

PCA for Compression

Incremental PCA

Randomized PCA

Kernel PCA

Selecting a Kernel and Tuning Hyperparameters

LLE

Other Dimensionality Reduction Techniques

Exercises

Part II. Neural Networks and Deep Learning

Chapter 9. Up and Running with TensorFlow

Installation

Creating Your First Graph and Running It in a Session

Managing Graphs

Lifecycle of a Node Value

Linear Regression with TensorFlow

Implementing Gradient Descent

Manually Computing the Gradients

Using autodiff

Using an Optimizer

Feeding Data to the Training Algorithm

Saving and Restoring Models

Visualizing the Graph and Training Curves Using TensorBoard

Name Scopes

Modularity

Sharing Variables

Exercises

Chapter 10. Introduction to Artificial Neural Networks

From Biological to Artificial Neurons

Biological Neurons

Logical Computations with Neurons

The Perceptron

Multi-Layer Perceptron and Backpropagation

Training an MLP with TensorFlow’s High-Level API

Training a DNN Using Plain TensorFlow

Construction Phase

Execution Phase

Using the Neural Network

Fine-Tuning Neural Network Hyperparameters

Number of Hidden Layers

Number of Neurons per Hidden Layer

Activation Functions

Exercises

Chapter 11. Training Deep Neural Nets

Vanishing/Exploding Gradients Problems

Xavier and He Initialization

Nonsaturating Activation Functions

Batch Normalization

Gradient Clipping

Reusing Pretrained Layers

Reusing a TensorFlow Model

Reusing Models from Other Frameworks

Freezing the Lower Layers

Caching the Frozen Layers

Tweaking, Dropping, or Replacing the Upper Layers

Model Zoos

Unsupervised Pretraining

Pretraining on an Auxiliary Task

Faster Optimizers

Momentum optimization

Nesterov Accelerated Gradient

AdaGrad

RMSProp

Adam Optimization

Learning Rate Scheduling

Avoiding Overfitting Through Regularization

Early Stopping

ℓ1 and ℓ2 Regularization

Dropout

Max-Norm Regularization

Data Augmentation

Practical Guidelines

Exercises

Chapter 12. Distributing TensorFlow Across Devices and Servers

Multiple Devices on a Single Machine

Installation

Managing the GPU RAM

Placing Operations on Devices

Parallel Execution

Control Dependencies

Multiple Devices Across Multiple Servers

Opening a Session

The Master and Worker Services

Pinning Operations Across Tasks

Sharding Variables Across Multiple Parameter Servers

Sharing State Across Sessions Using Resource Containers

Asynchronous Communication Using TensorFlow Queues

Loading Data Directly from the Graph

Parallelizing Neural Networks on a TensorFlow Cluster

One Neural Network per Device

In-Graph Versus Between-Graph Replication

Model Parallelism

Data Parallelism

Exercises

Chapter 13. Convolutional Neural Networks

The Architecture of the Visual Cortex

Convolutional Layer

Filters

Stacking Multiple Feature Maps

TensorFlow Implementation

Memory Requirements

Pooling Layer

CNN Architectures

LeNet-5

AlexNet

GoogLeNet

ResNet

Exercises

Chapter 14. Recurrent Neural Networks

Recurrent Neurons

Memory Cells

Input and Output Sequences

Basic RNNs in TensorFlow

Static Unrolling Through Time

Dynamic Unrolling Through Time

Handling Variable Length Input Sequences

Handling Variable-Length Output Sequences

Training RNNs

Training a Sequence Classifier

Training to Predict Time Series

Creative RNN

Deep RNNs

Distributing a Deep RNN Across Multiple GPUs

Applying Dropout

The Difficulty of Training over Many Time Steps

LSTM Cell

Peephole Connections

GRU Cell

Natural Language Processing

Word Embeddings

An Encoder–Decoder Network for Machine Translation

Exercises

Chapter 15. Autoencoders

Efficient Data Representations

Performing PCA with an Undercomplete Linear Autoencoder

Stacked Autoencoders

TensorFlow Implementation

Tying Weights

Training One Autoencoder at a Time

Visualizing the Reconstructions

Visualizing Features

Unsupervised Pretraining Using Stacked Autoencoders

Denoising Autoencoders

TensorFlow Implementation

Sparse Autoencoders

TensorFlow Implementation

Variational Autoencoders

Generating Digits

Other Autoencoders

Exercises

Chapter 16. Reinforcement Learning

Learning to Optimize Rewards

Policy Search

Introduction to OpenAI Gym

Neural Network Policies

Evaluating Actions: The Credit Assignment Problem

Policy Gradients

Markov Decision Processes

Temporal Difference Learning and Q-Learning

Exploration Policies

Approximate Q-Learning

Learning to Play Ms. Pac-Man Using Deep Q-Learning

Exercises

Thank You!

Appendix A. Exercise Solutions

Chapter 1: The Machine Learning Landscape

Chapter 2: End-to-End Machine Learning Project

Chapter 3: Classification

Chapter 4: Training Linear Models

Chapter 5: Support Vector Machines

Chapter 6: Decision Trees

Chapter 7: Ensemble Learning and Random Forests

Chapter 8: Dimensionality Reduction

Chapter 9: Up and Running with TensorFlow

Chapter 10: Introduction to Artificial Neural Networks

Chapter 11: Training Deep Neural Nets

Chapter 12: Distributing TensorFlow Across Devices and Servers

Chapter 13: Convolutional Neural Networks

Chapter 14: Recurrent Neural Networks

Chapter 15: Autoencoders

Chapter 16: Reinforcement Learning

Appendix B. Machine Learning Project Checklist

Frame the Problem and Look at the Big Picture

Get the Data

Explore the Data

Prepare the Data

Short-List Promising Models

Fine-Tune the System

Present Your Solution

Launch!

Appendix C. SVM Dual Problem

Appendix D. Autodiff

Manual Differentiation

Symbolic Differentiation

Numerical Differentiation

Forward-Mode Autodiff

Reverse-Mode Autodiff

Appendix E. Other Popular ANN Architectures

Hopfield Networks

Boltzmann Machines

Restricted Boltzmann Machines

Deep Belief Nets

Self-Organizing Maps

Index

About the Author

Colophon

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc