2019/9/23

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

通天塔 (/)

作者\标题\内容

LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and

Mapping on Variable Terrain

LeGO-LOAM:轻型和地面优化的激光雷达测距和可变地形测绘

论文:http://static.tongtianta.site/paper_pdf/1f7896c2-08b7-11e9-b8e4-00163e08bb86.pdf

(http://static.tongtianta.site/paper_pdf/1f7896c2-08b7-11e9-b8e4-00163e08bb86.pdf)

报错 申请删除

Tixiao Shan and Brendan Englot

Tixiao Shan and Brendan Englot

Abstract— We propose a lightweight and ground-optimized lidar odometry and mapping method, LeGO-LOAM, for realtime six

degree-of-freedom pose estimation with ground vehicles. LeGO-LOAM is lightweight, as it can achieve realtime pose estimation

on a low-power embedded system. LeGOLOAM is ground-optimized, as it leverages the presence of a ground plane in its

segmentation and optimization steps. We first apply point cloud segmentation to filter out noise, and feature extraction to obtain

distinctive planar and edge features. A two-step Levenberg-Marquardt optimization method then uses the planar and edge

features to solve different components of the six degree-of-freedom transformation across consecutive scans. We compare the

performance of LeGO-LOAM with a state-of-the-art method, LOAM, using datasets gathered from variable-terrain environments

with ground vehicles, and show that LeGO-LOAM achieves similar or better accuracy with reduced computational expense. We

also integrate LeGO-LOAM into a SLAM framework to eliminate the pose estimation error caused by drift, which is tested using

the KITTI dataset.

摘要 - 我们提出了一种轻量级和地面优化的激光雷达测距和测绘方法LeGO-LOAM,用于与地面车辆进行实时六自由度

姿态估计。LeGO-LOAM重量轻,因为它可以在低功耗嵌入式系统上实现实时姿态估计。LeGOLOAM经过地面优化,因

为它在分割和优化步骤中利用了地平面的存在。我们首先应用点云分割来滤除噪声,并进行特征提取以获得独特的平面

和边缘特征。然后,两步Levenberg-Marquardt优化方法使用平面和边缘特征来解决连续扫描中六自由度变换的不同分

量。我们使用从地形车辆的变地形环境收集的数据集,将LeGO-LOAM的性能与最先进的方法LOAM进行比较,并表明

LeGO-LOAM在降低计算成本的同时实现了类似或更好的准确性。我们还将LeGO-LOAM集成到SLAM框架中,以消除由

漂移引起的姿态估计误差,该误差使用KITTI数据集进行测试。

I. INTRODUCTION

一,导言

Among the capabilities of an intelligent robot, mapbuilding and state estimation are among the most fundamental prerequisites.

Great efforts have been devoted to achieving real-time 6 degree-of-freedom simultaneous localization and mapping (SLAM) with

vision-based and lidar-based methods. Although vision-based methods have advantages in loop-closure detection, their sensitivity

http://tongtianta.site/paper/8117

1/24

搜索�

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

2019/9/23

to illumination and viewpoint change may make such capabilities unreliable if used as the sole navigation sensor. On the other

hand, lidarbased methods will function even at night, and the high resolution of many 3D lidars permits the capture of the fine

details of an environment at long ranges, over a wide aperture. Therefore, this paper focuses on using 3D lidar to support real-

time state estimation and mapping.

在智能机器人的功能中,地图构建和状态估计是最基本的先决条件。我们致力于通过基于视觉和基于激光雷达的方法实

现实时6自由度同时定位和制图(SLAM)。虽然基于视觉的方法在闭环检测中具有优势,但是如果用作唯一的导航传感

器,它们对照明和视点变化的敏感性可能使这些能力不可靠。另一方面,基于激光雷达的方法即使在夜晚也能发挥作

用,并且许多3D激光雷达的高分辨率允许在很宽的光圈范围内捕捉远距离环境的细节。因此,本文重点介绍如何使用

3D激光雷达来支持实时状态估计和映射。

The typical approach for finding the transformation between two lidar scans is iterative closest point (ICP) [1]. By finding

correspondences at a point-wise level, ICP aligns two sets of points iteratively until stopping criteria are satisfied. When the

scans include large quantities of points, ICP may suffer from prohibitive computational cost. Many variants of ICP have been

proposed to improve its efficiency and accuracy [2]. [3] introduces a point-to-plane ICP variant that matches points to local

planar patches. Generalized-ICP [4] proposes a method that matches local planar patches from both scans. In addition, several

ICP variants have leveraged parallel computing for improved efficiency [5]–[8].

在两个激光雷达扫描之间进行转换的典型方法是迭代最近点(ICP)[1]。通过在逐点水平上找到对应关系,ICP迭代地

对齐两组点,直到满足停止标准。当扫描包括大量点时,ICP可能遭受过高的计算成本。已经提出许多ICP的变体来提高

其效率和准确度[2]。 [3]介绍了一种点到平面的ICP变体,它将点与局部平面斑块相匹配。Generalized-ICP [4]提出了一

种匹配两次扫描的局部平面贴片的方法。此外,一些ICP变体利用并行计算来提高效率[5] - [8]。

T. Shan and B. Englot are with the Department of Mechanical Engineering, Stevens Institute of Technology, Castle Point on

Hudson, Hoboken NJ 07030 USA,

T. Shan和B. Englot是机械工程系,史蒂文斯理工学院,Castle Point on Hudson,Hoboken NJ 07030 USA,

.

。

Feature-based matching methods are attracting more attention, as they require less computational resources by extracting

representative features from the environment. These features should be suitable for effective matching and invariant of point-of-

view. Many detectors, such as Point Feature Histograms (PFH) [9] and Viewpoint Feature Histograms (VFH) [10], have been

proposed for extracting such features from point clouds using simple and efficient techniques. A method for extracting general-

purpose features from point clouds using a Kanade-Tomasi corner detector is introduced in [11]. A framework for extracting line

and plane features from dense point clouds is discussed in [12].

基于特征的匹配方法正在吸引更多关注,因为它们通过从环境中提取代表性特征而需要较少的计算资源。这些特征应该

适用于有效匹配和视点不变。已经提出了许多检测器,例如点特征直方图(PFH)[9]和视点特征直方图(VFH)[10],

用于使用简单有效的技术从点云中提取这些特征。在[11]中介绍了使用Kanade-Tomasi角点检测器从点云中提取通用特征

的方法。在[12]中讨论了从密集点云中提取线和平面特征的框架。

Many algorithms that use features for point cloud registration have also been proposed. [13] and [14] present a keypoint

selection algorithm that performs point curvature calculations in a local cluster. The selected keypoints are then used to perform

matching and place recognition. By projecting a point cloud onto a range image and analyzing the second derivative of the depth

values, [15] selects features from points that have high curvature for matching and place recognition. Assuming the environment

is composed of planes, a plane-based registration algorithm is proposed in [16]. An outdoor environment, e.g., a forest, may limit

the application of such a method. A collar line segments (CLS) method, which is especially designed for Velodyne lidar, is

presented in [17]. CLS randomly generates lines using points from two consecutive “rings” of a scan. Thus two line clouds are

generated and used for registration. However, this method suffers from challenges arising from the random generation of lines. A

http://tongtianta.site/paper/8117

2/24

�

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

2019/9/23

segmentation-based registration algorithm is proposed in [18]. SegMatch first applies segmentation to a point cloud. Then a

feature vector is calculated for each segment based on its eigenvalues and shape histograms. A random forest is used to match

the segments from two scans. Though this method can be used for online pose estimation, it can only provide localization

updates at about 1Hz.

还提出了许多使用点云注册功能的算法。 [13]和[14]提出了一种关键点选择算法,该算法在本地集群中执行点曲率计

算。然后使用所选关键点来执行匹配和位置识别。通过将点云投影到距离图像上并分析深度值的二阶导数,[15]从具有

高曲率的点中选择特征以进行匹配和位置识别。假设环境由平面组成,在[16]中提出了基于平面的配准算法。室外环境

(例如森林)可能限制这种方法的应用。在[17]中提出了一种专为Velodyne激光雷达设计的领线段(CLS)方法。CLS使

用来自扫描的两个连续“环”的点随机生成线。因此,生成两个线云并用于注册。然而,该方法遭受由随机生成线产生的

挑战。在[18]中提出了基于分割的配准算法。SegMatch首先将分段应用于点云。然后基于其特征值和形状直方图为每个

片段计算特征向量。随机森林用于匹配来自两次扫描的片段。虽然这种方法可以用于在线姿态估计,但它只能提供大约

1Hz的定位更新。

A low-drift and real-time lidar odometry and mapping (LOAM) method is proposed in [19] and [20]. LOAM performs point

feature to edge/plane scan-matching to find correspondences between scans. Features are extracted by calculating the roughness

of a point in its local region. The points with high roughness values are selected as edge features. Similarly, the points with low

roughness values are designated planar features. Real-time performance is achieved by novelly dividing the estimation problem

across two individual algorithms. One algorithm runs at high frequency and estimates sensor velocity at low accuracy. The other

algorithm runs at low frequency but returns high accuracy motion estimation. The two estimates are fused together to produce a

single motion estimate at both high frequency and high accuracy. LOAM’s resulting accuracy is the best achieved by a lidar-only

estimation method on the KITTI odometry benchmark site [21].

在[19]和[20]中提出了一种低漂移和实时激光雷达测距和测绘(LOAM)方法。LOAM对边缘/平面扫描匹配执行点特征以

匹配扫描之间的对应关系。通过计算其局部区域中的点的粗糙度来提取特征。选择具有高粗糙度值的点作为边缘特征。

类似地,具有低粗糙度值的点被指定为平面特征。通过在两个单独的算法之间新颖地划分估计问题来实现实时性能。一

种算法以高频率运行并以低精度估计传感器速度。另一种算法以低频运行但返回高精度运动估计。将两个估计值融合在

一起以产生高频率和高精度的单个运动估计。LOAM的最终精确度是KITTI测距基准站点上仅限激光雷达的估算方法所

能达到的最佳效果[21]。

In this work, we pursue reliable, real-time six degreeof-freedom pose estimation for ground vehicles equipped with 3D lidar, in a

manner that is amenable to efficient implementation on a small-scale embedded system. Such a task is non-trivial for several

reasons. Many unmanned ground vehicles (UGVs) do not have suspensions or powerful computational units due to their limited

size. Non-smooth motion is frequently encountered by small UGVs driving on variable terrain, and as a result, the acquired data

is often distorted. Reliable feature correspondences are also hard to find between two consecutive scans due to large motions

with limited overlap. Besides that, the large quantities of points received from a 3D lidar poses a challenge to real-time processing

using limited on-board computational resources. When we implement LOAM for such tasks, we can obtain low-drift motion

estimation when a UGV is operated with smooth motion admist stable features, and supported by sufficient computational

resources. However, the performance of LOAM deteriorates when resources are limited. Due to the need to compute the

roughness of every point in a dense 3D point cloud, the update frequency of feature extraction on a lightweight embedded

system cannot always keep up with the sensor update frequency. Operation of UGVs in noisy environments also poses

challenges for LOAM. Since the mounting position of a lidar is often close to the ground on a small UGV, sensor noise from the

ground may be a constant presence. For example, range returns from grass may result in high roughness values. As a

consequence, unreliable edge features may be extracted from these points. Similarly, edge or planar features may also be

http://tongtianta.site/paper/8117

3/24

�

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

2019/9/23

extracted from points returned from tree leaves. Such features are usually not reliable for scan-matching, as the same grass blade

or leaf may not be seen in two consecutive scans. Using these features may lead to inaccurate registration and large drift.

在这项工作中,我们为配备3D激光雷达的地面车辆追求可靠,实时的六自由度姿态估计,其方式适合在小规模嵌入式系

统上有效实施。由于几个原因,这样的任务非常重要。由于尺寸有限,许多无人驾驶地面车辆(UGV)没有悬架或强大

的计算单元。在不同地形上行驶的小型UGV经常遇到非平滑运动,因此,所获取的数据经常失真。由于重叠有限的大运

动,在两次连续扫描之间也很难找到可靠的特征对应关系。除此之外,从3D激光雷达接收的大量点对使用有限的机载计

算资源的实时处理提出了挑战。当我们为这些任务实现LOAM时,我们可以获得低漂移运动估计,当UGV以平滑运动提

供稳定特征运行时,并且由足够的计算资源支持。然而,当资源有限时,LOAM的性能会恶化。由于需要计算密集3D点

云中每个点的粗糙度,因此轻量级嵌入式系统上的特征提取更新频率无法始终跟上传感器更新频率。在嘈杂环境中操作

UGV也对LOAM提出了挑战。由于激光雷达的安装位置通常在小UGV上接近地面,因此来自地面的传感器噪声可能是恒

定的存在。例如,草的范围返回可能导致高粗糙度值。结果,可以从这些点提取不可靠的边缘特征。类似地,也可以从

树叶返回的点提取边缘或平面特征。这些特征对于扫描匹配通常是不可靠的,因为在两次连续扫描中可能看不到相同的

草叶或叶片。使用这些功能可能会导致注册不准确和大漂移。

during the first step. In the second step, the rest of the

is obtained by matching edge features extracted from the segmented point cloud. We also

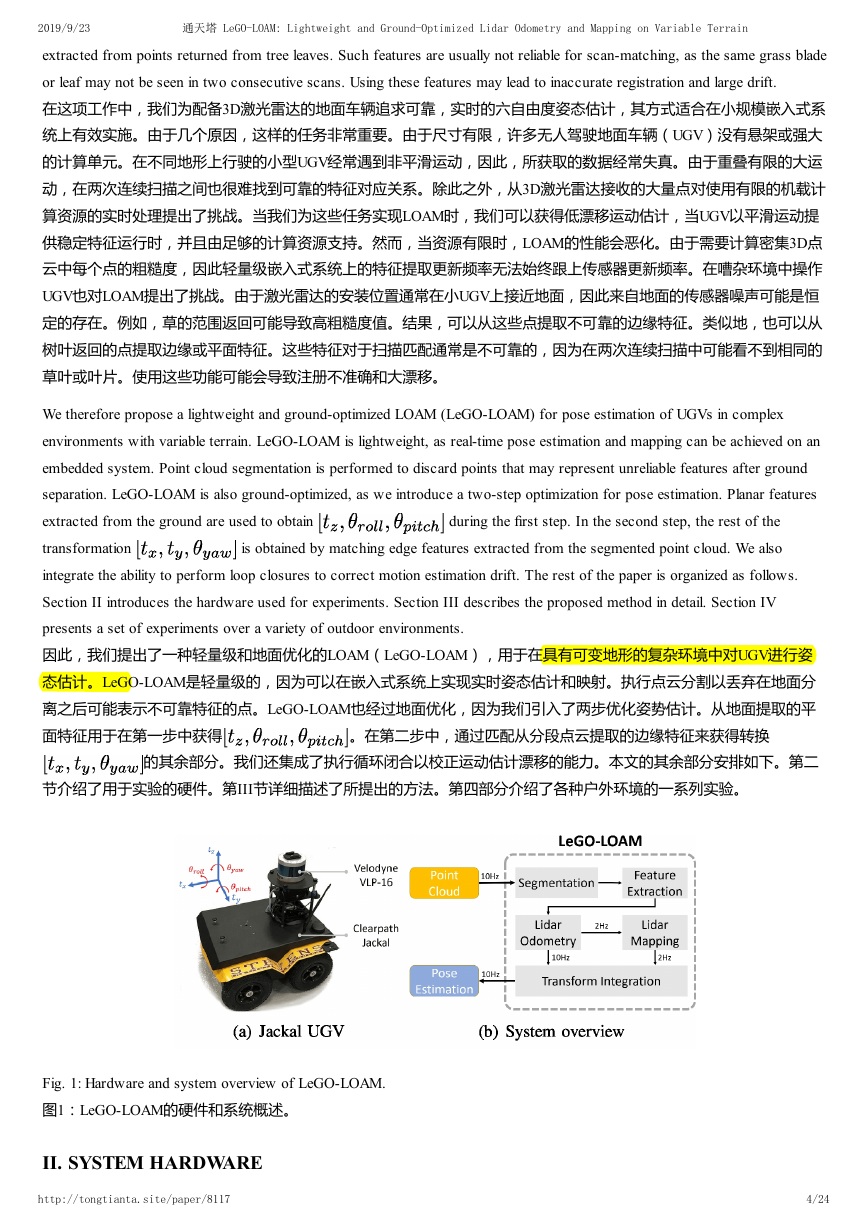

We therefore propose a lightweight and ground-optimized LOAM (LeGO-LOAM) for pose estimation of UGVs in complex

environments with variable terrain. LeGO-LOAM is lightweight, as real-time pose estimation and mapping can be achieved on an

embedded system. Point cloud segmentation is performed to discard points that may represent unreliable features after ground

separation. LeGO-LOAM is also ground-optimized, as we introduce a two-step optimization for pose estimation. Planar features

extracted from the ground are used to obtain

transformation

integrate the ability to perform loop closures to correct motion estimation drift. The rest of the paper is organized as follows.

Section II introduces the hardware used for experiments. Section III describes the proposed method in detail. Section IV

presents a set of experiments over a variety of outdoor environments.

因此,我们提出了一种轻量级和地面优化的LOAM(LeGO-LOAM),用于在具有可变地形的复杂环境中对UGV进行姿

态估计。LeGO-LOAM是轻量级的,因为可以在嵌入式系统上实现实时姿态估计和映射。执行点云分割以丢弃在地面分

离之后可能表示不可靠特征的点。LeGO-LOAM也经过地面优化,因为我们引入了两步优化姿势估计。从地面提取的平

面特征用于在第一步中获得

。在第二步中,通过匹配从分段点云提取的边缘特征来获得转换

的其余部分。我们还集成了执行循环闭合以校正运动估计漂移的能力。本文的其余部分安排如下。第二

节介绍了用于实验的硬件。第III节详细描述了所提出的方法。第四部分介绍了各种户外环境的一系列实验。

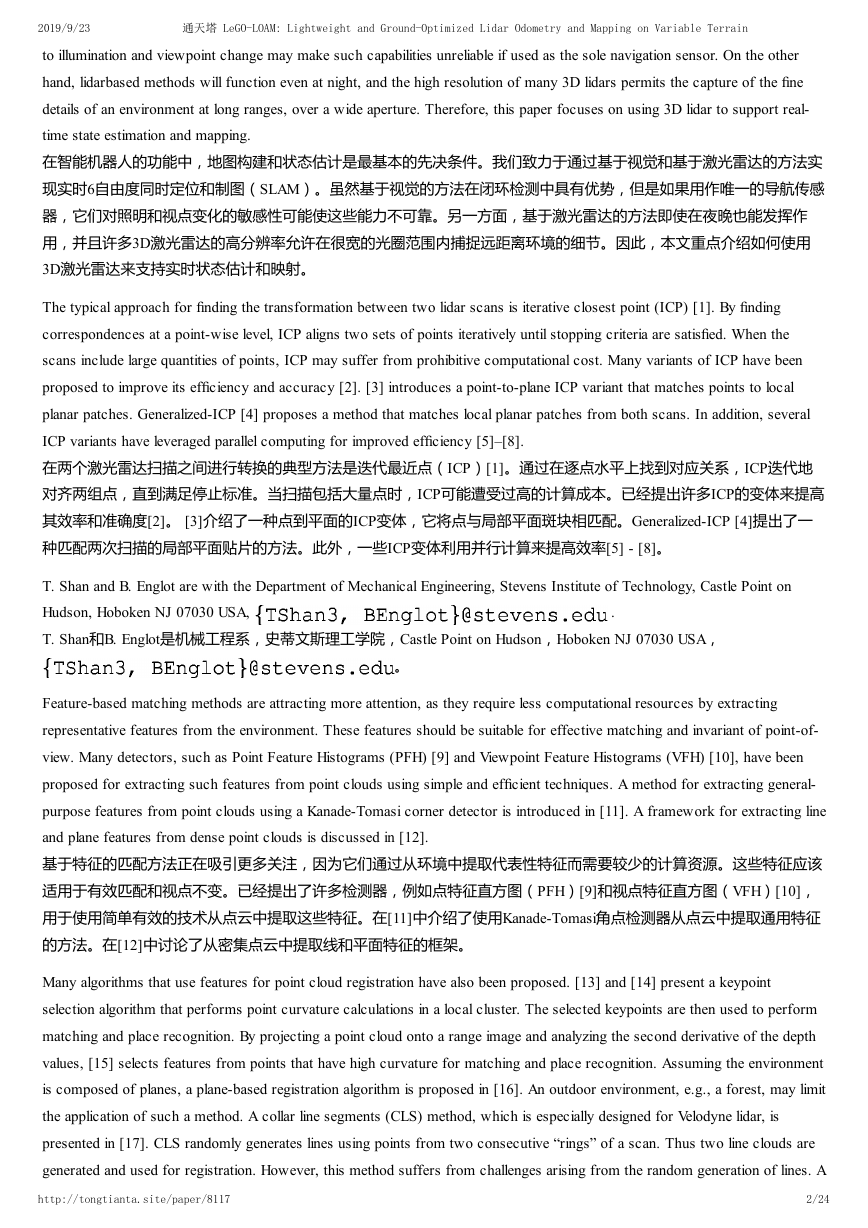

Fig. 1: Hardware and system overview of LeGO-LOAM.

图1:LeGO-LOAM的硬件和系统概述。

II. SYSTEM HARDWARE

http://tongtianta.site/paper/8117

4/24

�

2019/9/23

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

II。系统硬件

The framework proposed in this paper is validated using datasets gathered from Velodyne VLP-16 and HDL-64E 3D lidars. The

VLP-16 measurement range is up to 100m with an accuracy of ± 3cm. It has a vertical field of view (FOV) of 30◦(

) and

a horizontal FOV of 360◦. The 16-channel sensor provides a vertical angular resolution of 2◦. The horizontal angular resolution

varies from 0.1◦ to 0.4◦ based on the rotation rate. Throughout the paper, we choose a scan rate of 10Hz, which provides a

horizontal angular resolution of 0.2◦. The HDL-64E (explored in this work via the KITTI dataset) also has a horizontal FOV of

360◦ but 48 more channels. The vertical FOV of the HDL-64E is 26.9◦.

本文提出的框架使用从Velodyne VLP-16和HDL-64E 3D激光雷达收集的数据集进行验证。VLP-16测量范围高达100米,精

度为±3厘米。它的垂直视场(FOV)为30°(

),水平视场为360°。16通道传感器提供2°的垂直角分辨率。根据旋

转速率,水平角分辨率从0.1°到0.4°不等。在整篇论文中,我们选择10Hz的扫描速率,其提供0.2°的水平角分辨率。

HDL-64E(通过KITTI数据集在这项工作中探讨)也具有360°的水平FOV,但还有48个通道。 HDL-64E的垂直FOV为

26.9°。

The UGV used in this paper is the Clearpath Jackal. Powered by a 270 Watt hour Lithium battery, it has a maximum speed of

2.0m/s and maximum payload of 20kg. The Jackal is also equipped with a low-cost inertial measurement unit (IMU), the CH

Robotics UM6 Orientation Sensor.

本文中使用的UGV是Clearpath Jackal。由270瓦时的锂电池供电,最大速度为2.0米/秒,最大有效载荷为20千克。Jackal还

配备了低成本惯性测量单元(IMU),CH Robotics UM6方向传感器。

The proposed framework is validated on two computers: an Nvidia Jetson TX2 and a laptop with a 2.5GHz i74710MQ CPU. The

Jetson TX2 is an embedded computing device that is equipped with an ARM Cortex-A57 CPU. The laptop CPU was selected to

match the computing hardware used in [19] and [20]. The experiments shown in this paper use the CPUs of these systems only.

建议的框架在两台计算机上得到验证:Nvidia Jetson TX2和配备2.5GHz i74710MQ CPU的笔记本电脑。Jetson TX2是一款

嵌入式计算设备,配备ARM Cortex-A57 CPU。选择笔记本电脑CPU以匹配[19]和[20]中使用的计算硬件。本文中显示的

实验仅使用这些系统的CPU。

III. LIGHTWEIGHT LIDAR ODOMETRY AND MAPPING

III。轻量级激光雷达测量和绘图

A. System Overview

A.系统概述

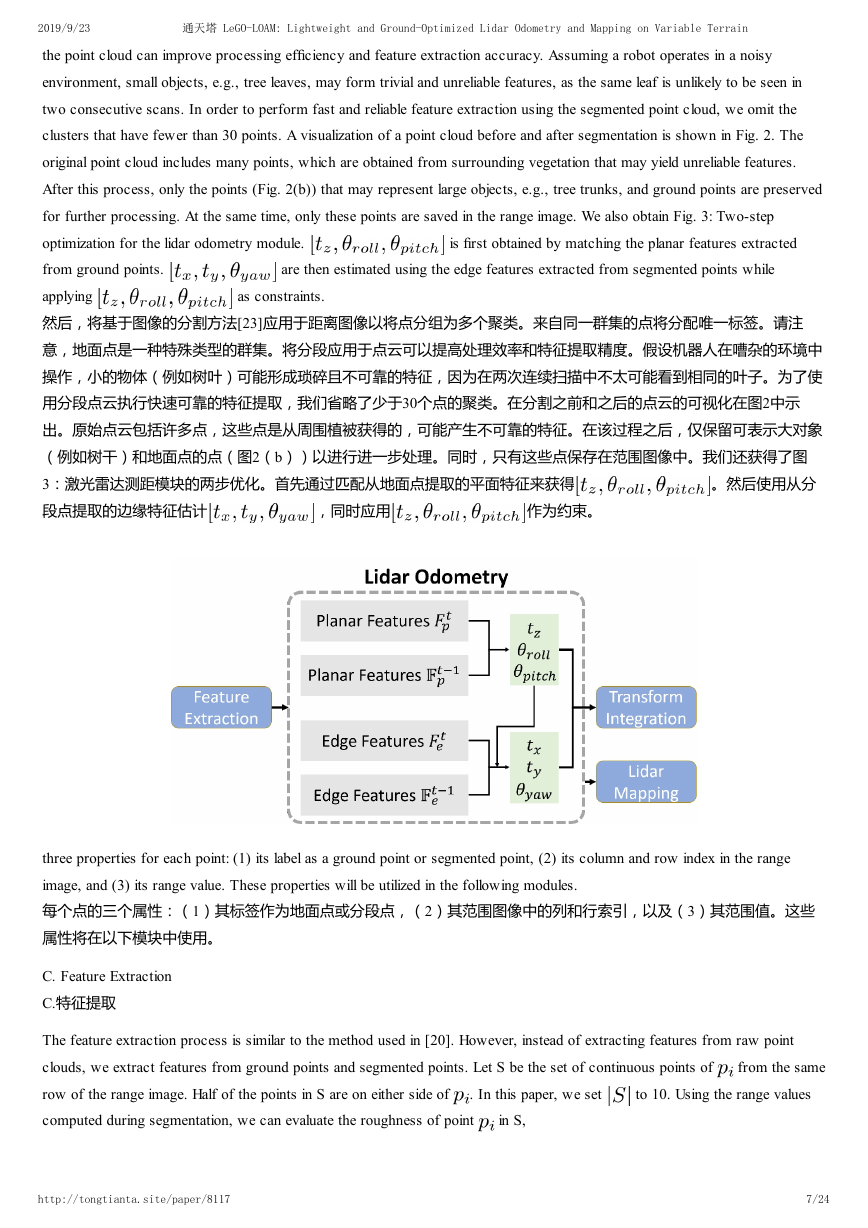

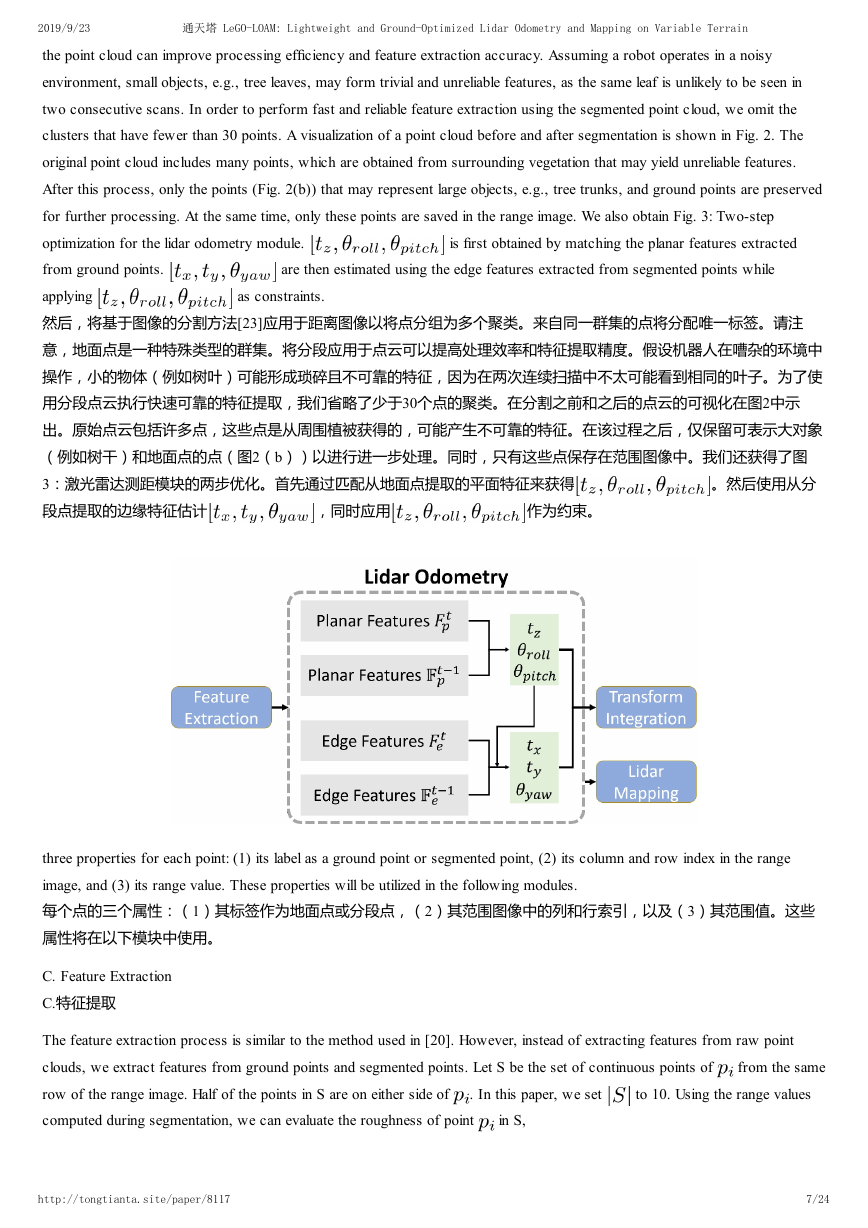

An overview of the proposed framework is shown in Figure 1. The system receives input from a 3D lidar and outputs 6 DOF

pose estimation. The overall system is divided into five modules. The first, segmentation, takes a single scan’s point cloud and

projects it onto a range image for segmentation. The segmented point cloud is then sent to the feature extraction module. Then,

lidar odometry uses features extracted from the previous module to find the transformation relating consecutive scans. The

features are further processed in lidar mapping, which registers them to a global point cloud map. At last, the transform

integration module fuses the pose estimation results from lidar odometry and lidar mapping and outputs the final pose estimate.

The proposed system seeks improved efficiency and accuracy for ground vehicles, with respect to the original, generalized

LOAM framework of [19] and [20]. The details of these modules are introduced below.

拟议框架的概述如图1所示。系统接收来自3D激光雷达的输入并输出6 DOF姿势估计。整个系统分为五个模块。第一个

分割采用单个扫描的点云,并将其投影到范围图像上进行分割。然后将分段的点云发送到特征提取模块。然后,激光雷

达测距仪使用从前一模块中提取的特征来找到与连续扫描相关的变换。这些特征在激光雷达映射中进一步处理,激光雷

达映射将它们注册到全局点云图。最后,变换积分模块融合了激光雷达测距和激光雷达测绘的姿态估计结果,并输出最

http://tongtianta.site/paper/8117

5/24

�

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

2019/9/23

终的姿态估计。与[19]和[20]的原始广义LOAM框架相比,所提出的系统寻求提高地面车辆的效率和准确性。下面介绍这

些模块的细节。

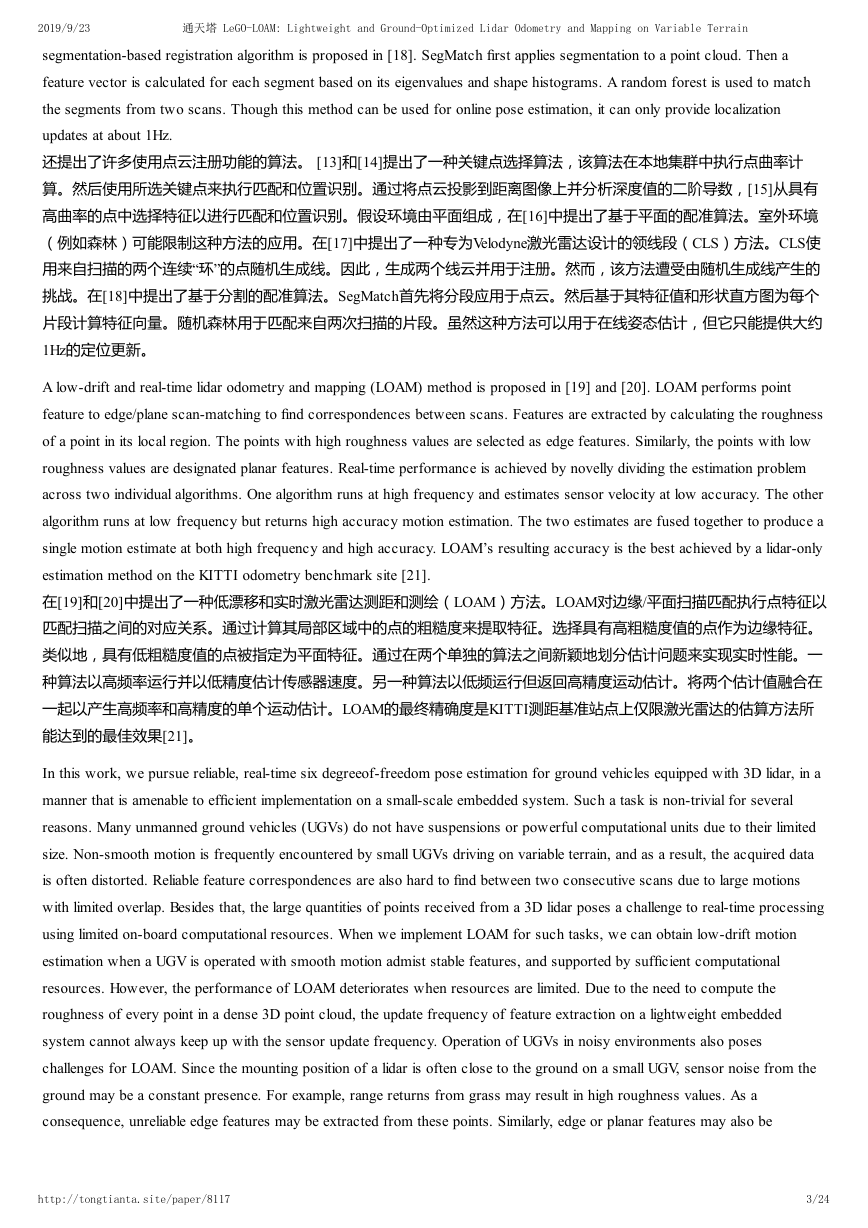

Fig. 2: Feature extraction process for a scan in noisy environment. The original point cloud is shown in (a). In (b), the red points

are labeled as ground points. The rest of the points are the points that remain after segmentation. In (c), blue and yellow points

indicate edge and planar features in

. In (d), the green and pink points represent edge and planar features in

and

and

respectively.

图2:在嘈杂环境中扫描的特征提取过程。原始点云显示在(a)中。在(b)中,红点被标记为地面点。其余的点是分

割后剩余的点。在(c)中,蓝色和黄色点表示 和 中的边缘和平面特征。在(d)中,绿色和粉红色点分别代表

和 中的边缘和平面特征。

B. Segmentation

B.细分

Let

is first projected onto a range

image. The resolution of the projected range image is 1800 by 16, since the VLP-16 has horizontal and vertical angular resolution

of 0.2◦ and 2◦ respectively. Each valid point

is now represented by a unique pixel in the range image. The range value

be the point cloud acquired at time t, where

is a point in

.

in

that is associated with

represents the Euclidean distance from the corresponding point

to the sensor. Since sloped

terrain is common in many environments, we do not assume the ground is flat. A column-wise evaluation of the range image,

which can be viewed as ground plane estimation [22], is conducted for ground point extraction before segmentation. After this

process, points that may represent the ground are labeled as ground points and not used for segmentation.

成为在时间t获取的点云,其中 是 中的一个点。 首先投影到范围图像上。由于VLP-16

让

的水平和垂直角分辨率分别为0.2°和2°,因此投影距离图像的分辨率为1800 x 16。 中的每个有效点 现在由范围图像

中的唯一像素表示。与 关联的范围值 表示从对应点 到传感器的欧几里德距离。由于倾斜的地形在许多环境中很常

见,我们并不认为地面很普遍。在分割之前进行地面图像的逐列评估,其可以被视为地平面估计[22],用于地面点提

取。在此过程之后,可能代表地面的点被标记为地面点而不用于分段。

Then, an image-based segmentation method [23] is applied to the range image to group points into many clusters. Points from

the same cluster are assigned a unique label. Note that the ground points are a special type of cluster. Applying segmentation to

http://tongtianta.site/paper/8117

6/24

�

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

2019/9/23

the point cloud can improve processing efficiency and feature extraction accuracy. Assuming a robot operates in a noisy

environment, small objects, e.g., tree leaves, may form trivial and unreliable features, as the same leaf is unlikely to be seen in

two consecutive scans. In order to perform fast and reliable feature extraction using the segmented point cloud, we omit the

clusters that have fewer than 30 points. A visualization of a point cloud before and after segmentation is shown in Fig. 2. The

original point cloud includes many points, which are obtained from surrounding vegetation that may yield unreliable features.

After this process, only the points (Fig. 2(b)) that may represent large objects, e.g., tree trunks, and ground points are preserved

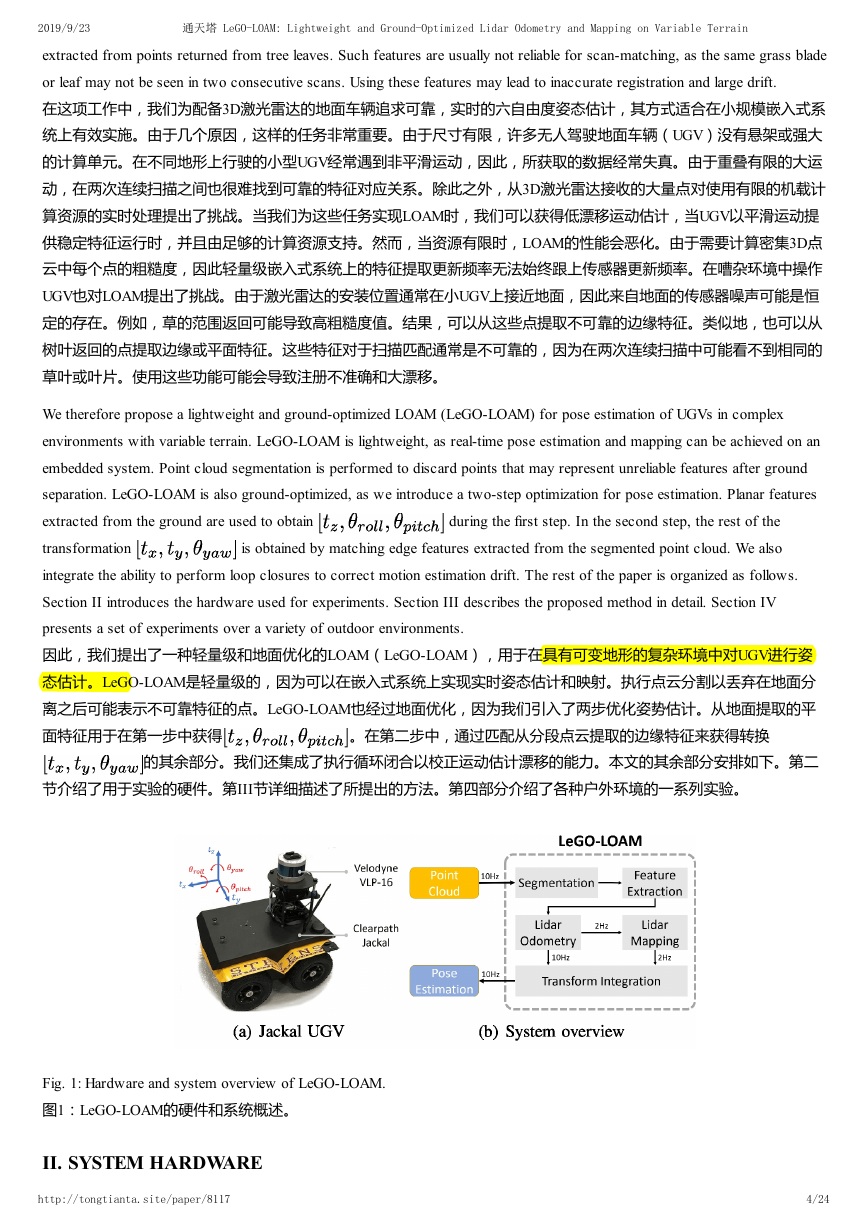

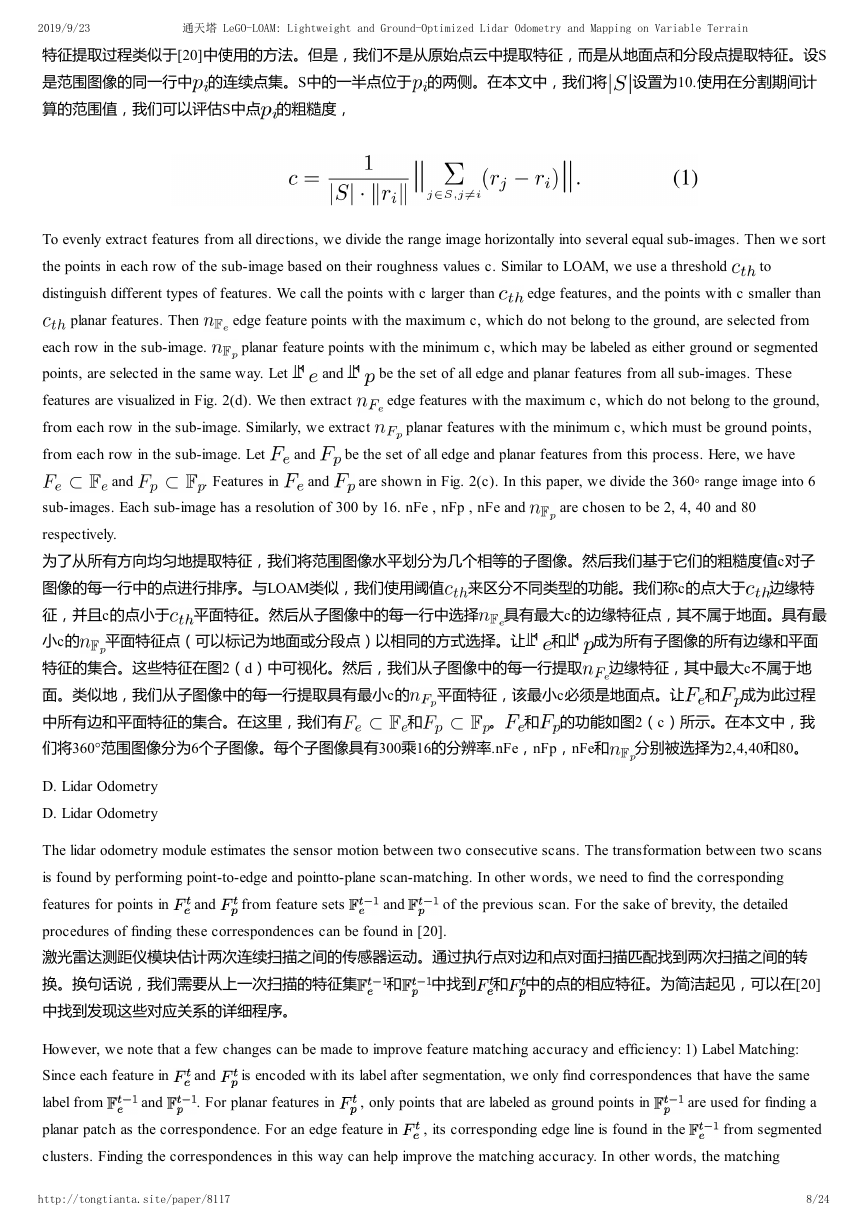

for further processing. At the same time, only these points are saved in the range image. We also obtain Fig. 3: Two-step

optimization for the lidar odometry module.

from ground points.

applying

然后,将基于图像的分割方法[23]应用于距离图像以将点分组为多个聚类。来自同一群集的点将分配唯一标签。请注

意,地面点是一种特殊类型的群集。将分段应用于点云可以提高处理效率和特征提取精度。假设机器人在嘈杂的环境中

操作,小的物体(例如树叶)可能形成琐碎且不可靠的特征,因为在两次连续扫描中不太可能看到相同的叶子。为了使

用分段点云执行快速可靠的特征提取,我们省略了少于30个点的聚类。在分割之前和之后的点云的可视化在图2中示

出。原始点云包括许多点,这些点是从周围植被获得的,可能产生不可靠的特征。在该过程之后,仅保留可表示大对象

(例如树干)和地面点的点(图2(b))以进行进一步处理。同时,只有这些点保存在范围图像中。我们还获得了图

3:激光雷达测距模块的两步优化。首先通过匹配从地面点提取的平面特征来获得

。然后使用从分

段点提取的边缘特征估计

are then estimated using the edge features extracted from segmented points while

is first obtained by matching the planar features extracted

as constraints.

,同时应用

作为约束。

three properties for each point: (1) its label as a ground point or segmented point, (2) its column and row index in the range

image, and (3) its range value. These properties will be utilized in the following modules.

每个点的三个属性:(1)其标签作为地面点或分段点,(2)其范围图像中的列和行索引,以及(3)其范围值。这些

属性将在以下模块中使用。

C. Feature Extraction

C.特征提取

The feature extraction process is similar to the method used in [20]. However, instead of extracting features from raw point

clouds, we extract features from ground points and segmented points. Let S be the set of continuous points of

row of the range image. Half of the points in S are on either side of

computed during segmentation, we can evaluate the roughness of point

. In this paper, we set

to 10. Using the range values

in S,

from the same

http://tongtianta.site/paper/8117

7/24

�

通天塔 LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain

2019/9/23

特征提取过程类似于[20]中使用的方法。但是,我们不是从原始点云中提取特征,而是从地面点和分段点提取特征。设S

是范围图像的同一行中 的连续点集。S中的一半点位于 的两侧。在本文中,我们将 设置为10.使用在分割期间计

算的范围值,我们可以评估S中点 的粗糙度,

To evenly extract features from all directions, we divide the range image horizontally into several equal sub-images. Then we sort

the points in each row of the sub-image based on their roughness values c. Similar to LOAM, we use a threshold

distinguish different types of features. We call the points with c larger than

edge features, and the points with c smaller than

to

edge feature points with the maximum c, which do not belong to the ground, are selected from

planar feature points with the minimum c, which may be labeled as either ground or segmented

planar features. Then

each row in the sub-image.

points, are selected in the same way. Let

and

features are visualized in Fig. 2(d). We then extract

from each row in the sub-image. Similarly, we extract

from each row in the sub-image. Let

and

be the set of all edge and planar features from all sub-images. These

edge features with the maximum c, which do not belong to the ground,

planar features with the minimum c, which must be ground points,

be the set of all edge and planar features from this process. Here, we have

and

. Features in

and

are shown in Fig. 2(c). In this paper, we divide the 360◦ range image into 6

are chosen to be 2, 4, 40 and 80

sub-images. Each sub-image has a resolution of 300 by 16. nFe , nFp , nFe and

respectively.

为了从所有方向均匀地提取特征,我们将范围图像水平划分为几个相等的子图像。然后我们基于它们的粗糙度值c对子

图像的每一行中的点进行排序。与LOAM类似,我们使用阈值 来区分不同类型的功能。我们称c的点大于 边缘特

征,并且c的点小于 平面特征。然后从子图像中的每一行中选择 具有最大c的边缘特征点,其不属于地面。具有最

小c的 平面特征点(可以标记为地面或分段点)以相同的方式选择。让 和 成为所有子图像的所有边缘和平面

特征的集合。这些特征在图2(d)中可视化。然后,我们从子图像中的每一行提取 边缘特征,其中最大c不属于地

面。类似地,我们从子图像中的每一行提取具有最小c的 平面特征,该最小c必须是地面点。让 和 成为此过程

。 和 的功能如图2(c)所示。在本文中,我

中所有边和平面特征的集合。在这里,我们有

们将360°范围图像分为6个子图像。每个子图像具有300乘16的分辨率.nFe,nFp,nFe和 分别被选择为2,4,40和80。

和

D. Lidar Odometry

D. Lidar Odometry

and

The lidar odometry module estimates the sensor motion between two consecutive scans. The transformation between two scans

is found by performing point-to-edge and pointto-plane scan-matching. In other words, we need to find the corresponding

features for points in

of the previous scan. For the sake of brevity, the detailed

procedures of finding these correspondences can be found in [20].

激光雷达测距仪模块估计两次连续扫描之间的传感器运动。通过执行点对边和点对面扫描匹配找到两次扫描之间的转

换。换句话说,我们需要从上一次扫描的特征集 和 中找到 和 中的点的相应特征。为简洁起见,可以在[20]

中找到发现这些对应关系的详细程序。

from feature sets

and

However, we note that a few changes can be made to improve feature matching accuracy and efficiency: 1) Label Matching:

Since each feature in

label from

and

planar patch as the correspondence. For an edge feature in

clusters. Finding the correspondences in this way can help improve the matching accuracy. In other words, the matching

is encoded with its label after segmentation, we only find correspondences that have the same

are used for finding a

from segmented

and

. For planar features in

, only points that are labeled as ground points in

, its corresponding edge line is found in the

http://tongtianta.site/paper/8117

8/24

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc