IEEETRANSACTIONSONNEURALNETWORKS,VOL.16,NO.3,MAY2005645SurveyofClusteringAlgorithmsRuiXu,StudentMember,IEEEandDonaldWunschII,Fellow,IEEEAbstract—Dataanalysisplaysanindispensableroleforun-derstandingvariousphenomena.Clusteranalysis,primitiveexplorationwithlittleornopriorknowledge,consistsofresearchdevelopedacrossawidevarietyofcommunities.Thediversity,ononehand,equipsuswithmanytools.Ontheotherhand,theprofusionofoptionscausesconfusion.Wesurveyclusteringalgorithmsfordatasetsappearinginstatistics,computerscience,andmachinelearning,andillustratetheirapplicationsinsomebenchmarkdatasets,thetravelingsalesmanproblem,andbioin-formatics,anewfieldattractingintensiveefforts.Severaltightlyrelatedtopics,proximitymeasure,andclustervalidation,arealsodiscussed.IndexTerms—Adaptiveresonancetheory(ART),clustering,clusteringalgorithm,clustervalidation,neuralnetworks,prox-imity,self-organizingfeaturemap(SOFM).I.INTRODUCTIONWEARElivinginaworldfullofdata.Everyday,peopleencounteralargeamountofinformationandstoreorrepresentitasdata,forfurtheranalysisandmanagement.Oneofthevitalmeansindealingwiththesedataistoclassifyorgroupthemintoasetofcategoriesorclusters.Actually,asoneofthemostprimitiveactivitiesofhumanbeings[14],classi-ficationplaysanimportantandindispensableroleinthelonghistoryofhumandevelopment.Inordertolearnanewobjectorunderstandanewphenomenon,peoplealwaystrytoseekthefeaturesthatcandescribeit,andfurthercompareitwithotherknownobjectsorphenomena,basedonthesimilarityordissimilarity,generalizedasproximity,accordingtosomecer-tainstandardsorrules.“Basically,classificationsystemsareei-thersupervisedorunsupervised,dependingonwhethertheyas-signnewinputstooneofafinitenumberofdiscretesupervisedclassesorunsupervisedcategories,respectively[38],[60],[75].Insupervisedclassification,themappingfromasetofinputdatavectors(,whereistheinputspacedimensionality),toafinitesetofdiscreteclasslabels(,whereisthetotalnumberofclasstypes),ismodeledintermsofsomemathematicalfunction,whereisavectorofadjustableparameters.Thevaluesoftheseparametersarede-termined(optimized)byaninductivelearningalgorithm(alsotermedinducer),whoseaimistominimizeanempiricalriskfunctional(relatedtoaninductiveprinciple)onafinitedatasetofinput–outputexamples,,whereisthefinitecardinalityoftheavailablerepresentativedataset[38],ManuscriptreceivedMarch31,2003;revisedSeptember28,2004.ThisworkwassupportedinpartbytheNationalScienceFoundationandinpartbytheM.K.FinleyMissouriEndowment.TheauthorsarewiththeDepartmentofElectricalandComputerEngineering,UniversityofMissouri-Rolla,Rolla,MO65409USA(e-mail:rxu@umr.edu;dwunsch@ece.umr.edu).DigitalObjectIdentifier10.1109/TNN.2005.845141[60],[167].Whentheinducerreachesconvergenceortermi-nates,aninducedclassifierisgenerated[167].Inunsupervisedclassification,calledclusteringorex-ploratorydataanalysis,nolabeleddataareavailable[88],[150].Thegoalofclusteringistoseparateafiniteunlabeleddatasetintoafiniteanddiscretesetof“natural,”hiddendatastructures,ratherthanprovideanaccuratecharacterizationofunobservedsamplesgeneratedfromthesameprobabilitydistribution[23],[60].Thiscanmakethetaskofclusteringfalloutsideoftheframeworkofunsupervisedpredictivelearningproblems,suchasvectorquantization[60](seeSectionII-C),probabilitydensityfunctionestimation[38](seeSectionII-D),[60],andentropymaximization[99].Itisnoteworthythatclusteringdiffersfrommultidimensionalscaling(perceptualmaps),whosegoalistodepictalltheevaluatedobjectsinawaythatminimizesthetopographicaldistortionwhileusingasfewdimensionsaspossible.Alsonotethat,inpractice,many(predictive)vectorquantizersarealsousedfor(nonpredictive)clusteringanalysis[60].Nonpredictiveclusteringisasubjectiveprocessinnature,whichprecludesanabsolutejudgmentastotherelativeeffi-cacyofallclusteringtechniques[23],[152].AspointedoutbyBackerandJain[17],“inclusteranalysisagroupofobjectsissplitupintoanumberofmoreorlesshomogeneoussubgroupsonthebasisofanoftensubjectivelychosenmeasureofsim-ilarity(i.e.,chosensubjectivelybasedonitsabilitytocreate“interesting”clusters),suchthatthesimilaritybetweenobjectswithinasubgroupislargerthanthesimilaritybetweenobjectsbelongingtodifferentsubgroups””1.Clusteringalgorithmspartitiondataintoacertainnumberofclusters(groups,subsets,orcategories).Thereisnouniver-sallyagreedupondefinition[88].Mostresearchersdescribeaclusterbyconsideringtheinternalhomogeneityandtheexternalseparation[111],[124],[150],i.e.,patternsinthesameclustershouldbesimilartoeachother,whilepatternsindifferentclus-tersshouldnot.Boththesimilarityandthedissimilarityshouldbeexaminableinaclearandmeaningfulway.Here,wegivesomesimplemathematicaldescriptionsofseveraltypesofclus-tering,basedonthedescriptionsin[124].Givenasetofinputpatterns,whereandeachmeasureissaidtobeafeature(attribute,dimension,orvariable).•(Hard)partitionalclusteringattemptstoseeka-par-titionof,suchthat1);2);3)and.1Theprecedingquoteistakenverbatimfromverbiagesuggestedbytheanonymousassociateeditor,asuggestionwhichwegratefullyacknowledge.1045-9227/$20.00©2005IEEE�

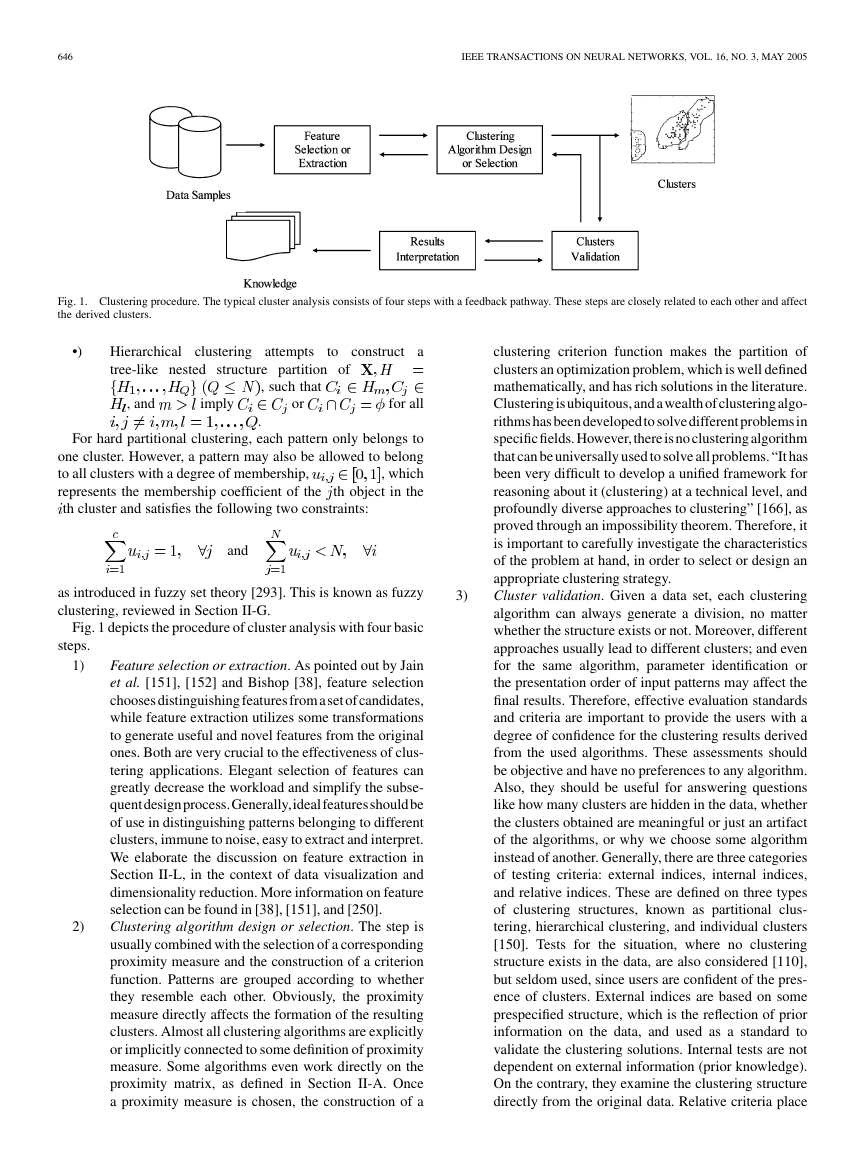

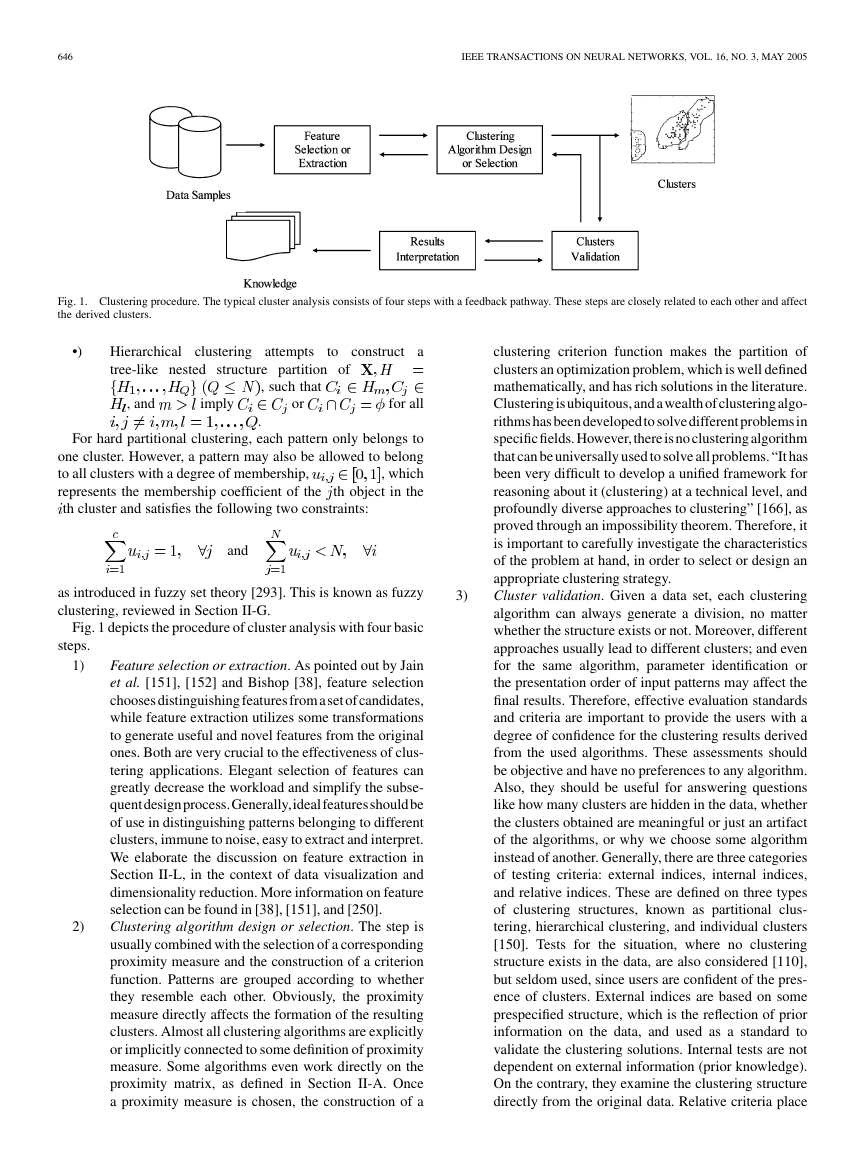

646IEEETRANSACTIONSONNEURALNETWORKS,VOL.16,NO.3,MAY2005Fig.1.Clusteringprocedure.Thetypicalclusteranalysisconsistsoffourstepswithafeedbackpathway.Thesestepsarecloselyrelatedtoeachotherandaffectthederivedclusters.•)Hierarchicalclusteringattemptstoconstructatree-likenestedstructurepartitionof,suchthat,andimplyorforall.Forhardpartitionalclustering,eachpatternonlybelongstoonecluster.However,apatternmayalsobeallowedtobelongtoallclusterswithadegreeofmembership,,whichrepresentsthemembershipcoefficientofthethobjectinthethclusterandsatisfiesthefollowingtwoconstraints:andasintroducedinfuzzysettheory[293].Thisisknownasfuzzyclustering,reviewedinSectionII-G.Fig.1depictstheprocedureofclusteranalysiswithfourbasicsteps.1)Featureselectionorextraction.AspointedoutbyJainetal.[151],[152]andBishop[38],featureselectionchoosesdistinguishingfeaturesfromasetofcandidates,whilefeatureextractionutilizessometransformationstogenerateusefulandnovelfeaturesfromtheoriginalones.Bothareverycrucialtotheeffectivenessofclus-teringapplications.Elegantselectionoffeaturescangreatlydecreasetheworkloadandsimplifythesubse-quentdesignprocess.Generally,idealfeaturesshouldbeofuseindistinguishingpatternsbelongingtodifferentclusters,immunetonoise,easytoextractandinterpret.WeelaboratethediscussiononfeatureextractioninSectionII-L,inthecontextofdatavisualizationanddimensionalityreduction.Moreinformationonfeatureselectioncanbefoundin[38],[151],and[250].2)Clusteringalgorithmdesignorselection.Thestepisusuallycombinedwiththeselectionofacorrespondingproximitymeasureandtheconstructionofacriterionfunction.Patternsaregroupedaccordingtowhethertheyresembleeachother.Obviously,theproximitymeasuredirectlyaffectstheformationoftheresultingclusters.Almostallclusteringalgorithmsareexplicitlyorimplicitlyconnectedtosomedefinitionofproximitymeasure.Somealgorithmsevenworkdirectlyontheproximitymatrix,asdefinedinSectionII-A.Onceaproximitymeasureischosen,theconstructionofaclusteringcriterionfunctionmakesthepartitionofclustersanoptimizationproblem,whichiswelldefinedmathematically,andhasrichsolutionsintheliterature.Clusteringisubiquitous,andawealthofclusteringalgo-rithmshasbeendevelopedtosolvedifferentproblemsinspecificfields.However,thereisnoclusteringalgorithmthatcanbeuniversallyusedtosolveallproblems.“Ithasbeenverydifficulttodevelopaunifiedframeworkforreasoningaboutit(clustering)atatechnicallevel,andprofoundlydiverseapproachestoclustering”[166],asprovedthroughanimpossibilitytheorem.Therefore,itisimportanttocarefullyinvestigatethecharacteristicsoftheproblemathand,inordertoselectordesignanappropriateclusteringstrategy.3)Clustervalidation.Givenadataset,eachclusteringalgorithmcanalwaysgenerateadivision,nomatterwhetherthestructureexistsornot.Moreover,differentapproachesusuallyleadtodifferentclusters;andevenforthesamealgorithm,parameteridentificationorthepresentationorderofinputpatternsmayaffectthefinalresults.Therefore,effectiveevaluationstandardsandcriteriaareimportanttoprovidetheuserswithadegreeofconfidencefortheclusteringresultsderivedfromtheusedalgorithms.Theseassessmentsshouldbeobjectiveandhavenopreferencestoanyalgorithm.Also,theyshouldbeusefulforansweringquestionslikehowmanyclustersarehiddeninthedata,whethertheclustersobtainedaremeaningfulorjustanartifactofthealgorithms,orwhywechoosesomealgorithminsteadofanother.Generally,therearethreecategoriesoftestingcriteria:externalindices,internalindices,andrelativeindices.Thesearedefinedonthreetypesofclusteringstructures,knownaspartitionalclus-tering,hierarchicalclustering,andindividualclusters[150].Testsforthesituation,wherenoclusteringstructureexistsinthedata,arealsoconsidered[110],butseldomused,sinceusersareconfidentofthepres-enceofclusters.Externalindicesarebasedonsomeprespecifiedstructure,whichisthereflectionofpriorinformationonthedata,andusedasastandardtovalidatetheclusteringsolutions.Internaltestsarenotdependentonexternalinformation(priorknowledge).Onthecontrary,theyexaminetheclusteringstructuredirectlyfromtheoriginaldata.Relativecriteriaplace�

XUANDWUNSCHII:SURVEYOFCLUSTERINGALGORITHMS647theemphasisonthecomparisonofdifferentclusteringstructures,inordertoprovideareference,todecidewhichonemaybestrevealthecharacteristicsoftheobjects.Wewillnotsurveythetopicindepthandreferinterestedreadersto[74],[110],and[150].However,wewillcovermoredetailsonhowtodeterminethenumberofclustersinSectionII-M.Somemorerecentdiscussioncanbefoundin[22],[37],[121],[180],and[181].Approachesforfuzzyclusteringvalidityarereportedin[71],[104],[123],and[220].4)Resultsinterpretation.Theultimategoalofclusteringistoprovideuserswithmeaningfulinsightsfromtheoriginaldata,sothattheycaneffectivelysolvetheproblemsencountered.Expertsintherelevantfieldsin-terpretthedatapartition.Furtheranalyzes,evenexper-iments,mayberequiredtoguaranteethereliabilityofextractedknowledge.Notethattheflowchartalsoincludesafeedbackpathway.Clusteranalysisisnotaone-shotprocess.Inmanycircumstances,itneedsaseriesoftrialsandrepetitions.Moreover,therearenouniversalandeffectivecriteriatoguidetheselectionoffeaturesandclusteringschemes.Validationcriteriaprovidesomeinsightsonthequalityofclusteringsolutions.Butevenhowtochoosetheappropriatecriterionisstillaproblemrequiringmoreefforts.Clusteringhasbeenappliedinawidevarietyoffields,rangingfromengineering(machinelearning,artificialintelli-gence,patternrecognition,mechanicalengineering,electricalengineering),computersciences(webmining,spatialdatabaseanalysis,textualdocumentcollection,imagesegmentation),lifeandmedicalsciences(genetics,biology,microbiology,paleontology,psychiatry,clinic,pathology),toearthsciences(geography.geology,remotesensing),socialsciences(soci-ology,psychology,archeology,education),andeconomics(marketing,business)[88],[127].Accordingly,clusteringisalsoknownasnumericaltaxonomy,learningwithoutateacher(orunsupervisedlearning),typologicalanalysisandpartition.Thediversityreflectstheimportantpositionofclusteringinscientificresearch.Ontheotherhand,itcausesconfusion,duetothedifferingterminologiesandgoals.Clusteringalgorithmsdevelopedtosolveaparticularproblem,inaspecializedfield,usuallymakeassumptionsinfavoroftheapplicationofinterest.Thesebiasesinevitablyaffectperformanceinotherproblemsthatdonotsatisfythesepremises.Forexample,the-meansalgorithmisbasedontheEuclideanmeasureand,hence,tendstogeneratehypersphericalclusters.Butiftherealclustersareinothergeometricforms,-meansmaynolongerbeeffective,andweneedtoresorttootherschemes.Thissituationalsoholdstrueformixture-modelclustering,inwhichamodelisfittodatainadvance.Clusteringhasalonghistory,withlineagedatingbacktoAris-totle[124].Generalreferencesonclusteringtechniquesinclude[14],[75],[77],[88],[111],[127],[150],[161],[259].Importantsurveypapersonclusteringtechniquesalsoexistintheliterature.Startingfromastatisticalpatternrecognitionviewpoint,Jain,Murty,andFlynnreviewedtheclusteringalgorithmsandotherim-portantissuesrelatedtoclusteranalysis[152],whileHansenandJaumarddescribedtheclusteringproblemsunderamathematicalprogrammingscheme[124].KolatchandHeinvestigatedappli-cationsofclusteringalgorithmsforspatialdatabasesystems[171]andinformationretrieval[133],respectively.Berkhinfurtherex-pandedthetopictothewholefieldofdatamining[33].Murtaghreportedtheadvancesinhierarchicalclusteringalgorithms[210]andBaraldisurveyedseveralmodelsforfuzzyandneuralnetworkclustering[24].Somemoresurveypaperscanalsobefoundin[25],[40],[74],[89],and[151].Inadditiontothereviewpapers,comparativeresearchonclusteringalgorithmsisalsosignificant.Rauber,Paralic,andPampalkpresentedempiricalresultsforfivetypicalclusteringalgorithms[231].Wei,Lee,andHsuplacedtheemphasisonthecomparisonoffastalgorithmsforlargedatabases[280].Scheunderscomparedseveralclusteringtechniquesforcolorimagequantization,withemphasisoncomputationaltimeandthepossibilityofobtainingglobaloptima[239].ApplicationsandevaluationsofdifferentclusteringalgorithmsfortheanalysisofgeneexpressiondatafromDNAmicroarrayexperimentsweredescribedin[153],[192],[246],and[271].Experimentalevalua-tionondocumentclusteringtechniques,basedonhierarchicaland-meansclusteringalgorithms,weresummarizedbySteinbach,Karypis,andKumar[261].Incontrasttotheabove,thepurposeofthispaperistopro-videacomprehensiveandsystematicdescriptionoftheinflu-entialandimportantclusteringalgorithmsrootedinstatistics,computerscience,andmachinelearning,withemphasisonnewadvancesinrecentyears.Theremainderofthepaperisorganizedasfollows.InSec-tionII,wereviewclusteringalgorithms,basedonthenaturesofgeneratedclustersandtechniquesandtheoriesbehindthem.Furthermore,wediscussapproachesforclusteringsequentialdata,largedatasets,datavisualization,andhigh-dimensionaldatathroughdimensionreduction.Twoimportantissuesonclusteranalysis,includingproximitymeasureandhowtochoosethenumberofclusters,arealsosummarizedinthesection.Thisisthelongestsectionofthepaper,so,forconve-nience,wegiveanoutlineofSectionIIinbulletformhere:II.ClusteringAlgorithms•A.DistanceandSimilarityMeasures(SeealsoTableI)•B.Hierarchical—AgglomerativeSinglelinkage,completelinkage,groupaveragelinkage,medianlinkage,centroidlinkage,Ward’smethod,balancediterativereducingandclusteringusinghierarchies(BIRCH),clusteringusingrep-resentatives(CURE),robustclusteringusinglinks(ROCK)—Divisivedivisiveanalysis(DIANA),monotheticanalysis(MONA)•C.SquaredError-Based(VectorQuantization)—-means,iterativeself-organizingdataanalysistechnique(ISODATA),genetic-meansalgorithm(GKA),partitioningaroundmedoids(PAM)•D.pdfEstimationviaMixtureDensities—Gaussianmixturedensitydecomposition(GMDD),AutoClass•E.GraphTheory-Based—Chameleon,Delaunaytriangulationgraph(DTG),highlyconnectedsubgraphs(HCS),clusteringiden-�

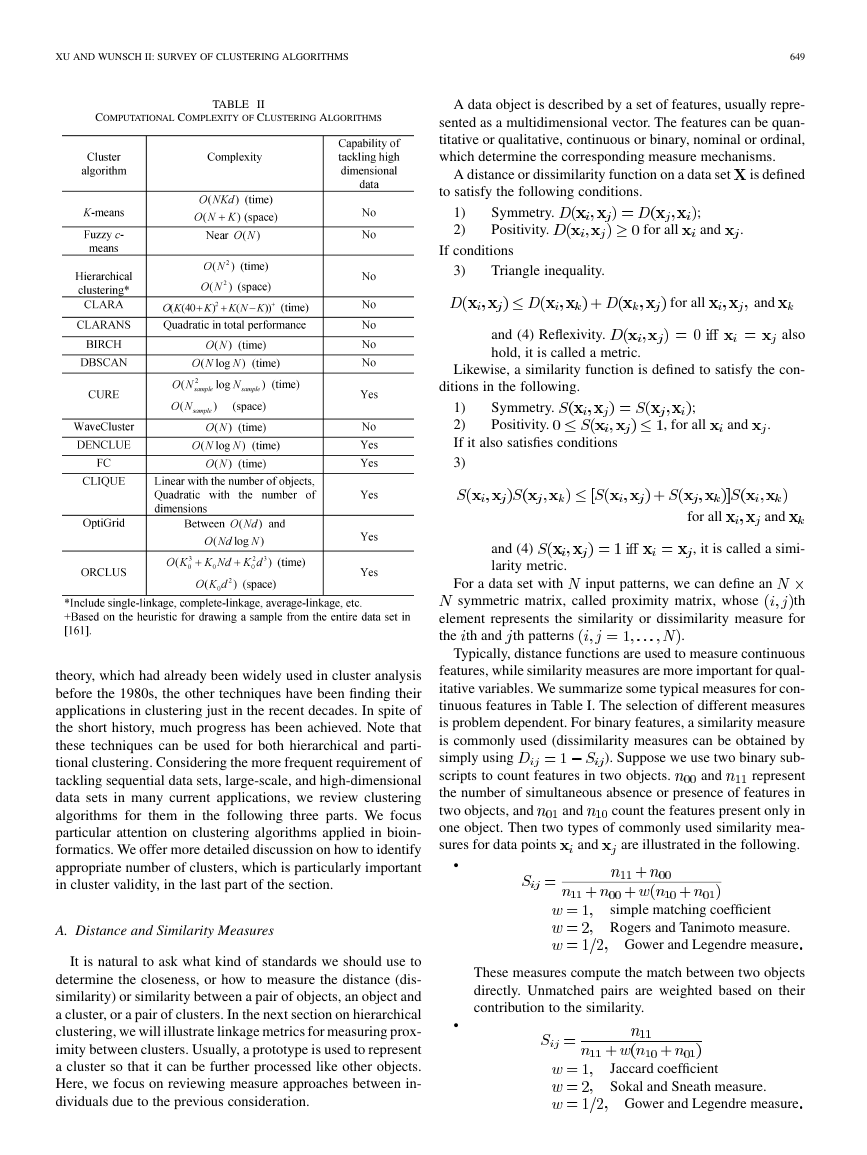

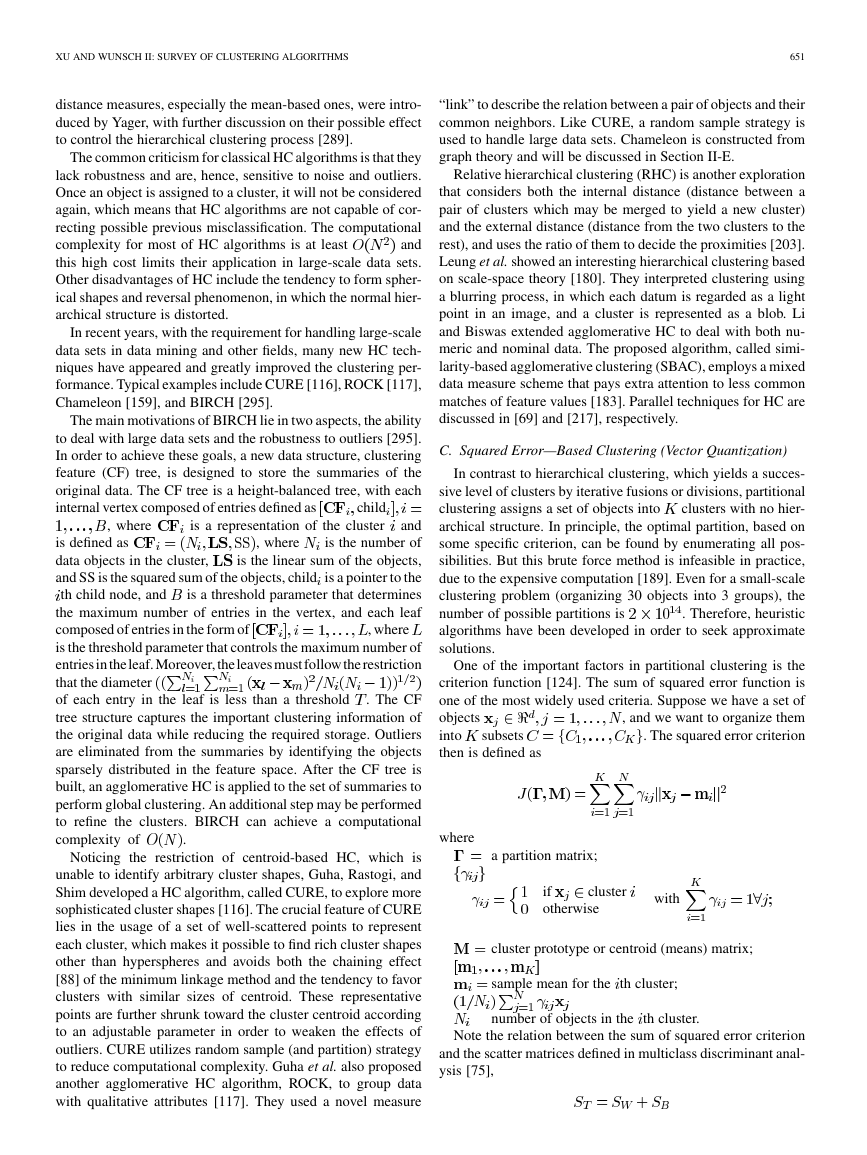

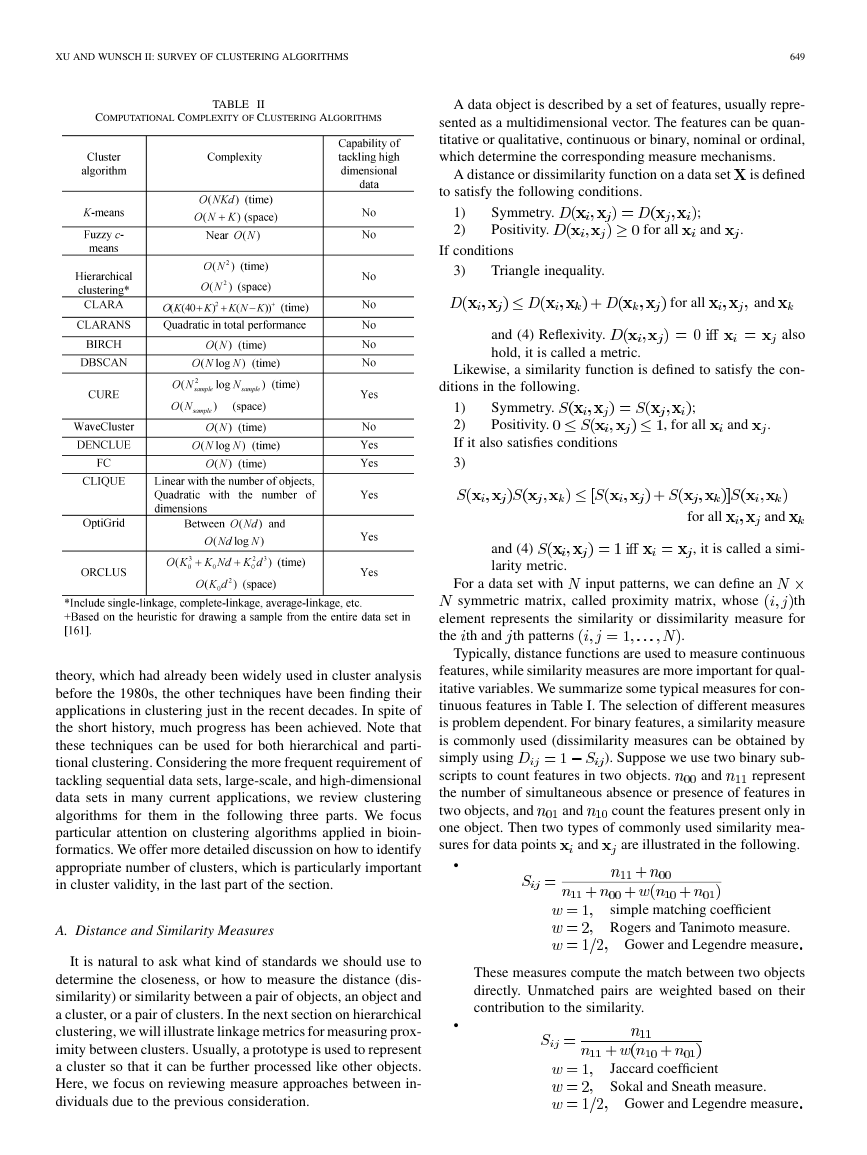

648IEEETRANSACTIONSONNEURALNETWORKS,VOL.16,NO.3,MAY2005TABLEISIMILARITYANDDISSIMILARITYMEASUREFORQUANTITATIVEFEATUREStificationviaconnectivitykernels(CLICK),clusteraffinitysearchtechnique(CAST)•F.CombinatorialSearchTechniques-Based—Geneticallyguidedalgorithm(GGA),TSclustering,SAclustering•G.Fuzzy—Fuzzy-means(FCM),mountainmethod(MM),pos-sibilistic-meansclusteringalgorithm(PCM),fuzzy-shells(FCS)•H.NeuralNetworks-Based—Learningvectorquantization(LVQ),self-organizingfeaturemap(SOFM),ART,simplifiedART(SART),hyperellipsoidalclusteringnetwork(HEC),self-split-tingcompetitivelearningnetwork(SPLL)•I.Kernel-Based—Kernel-means,supportvectorclustering(SVC)•J.SequentialData—SequenceSimilarity—Indirectsequenceclustering—Statisticalsequenceclustering•K.Large-ScaleDataSets(SeealsoTableII)—CLARA,CURE,CLARANS,BIRCH,DBSCAN,DENCLUE,WaveCluster,FC,ART•L.DatavisualizationandHigh-dimensionalData—PCA,ICA,Projectionpursuit,Isomap,LLE,CLIQUE,OptiGrid,ORCLUS•M.HowManyClusters?Applicationsintwobenchmarkdatasets,thetravelingsalesmanproblem,andbioinformaticsareillustratedinSec-tionIII.WeconcludethepaperinSectionIV.II.CLUSTERINGALGORITHMSDifferentstartingpointsandcriteriausuallyleadtodifferenttaxonomiesofclusteringalgorithms[33],[88],[124],[150],[152],[171].Aroughbutwidelyagreedframeistoclassifyclusteringtechniquesashierarchicalclusteringandparti-tionalclustering,basedonthepropertiesofclustersgenerated[88],[152].Hierarchicalclusteringgroupsdataobjectswithasequenceofpartitions,eitherfromsingletonclusterstoaclusterincludingallindividualsorviceversa,whilepartitionalclusteringdirectlydividesdataobjectsintosomeprespecifiednumberofclusterswithoutthehierarchicalstructure.Wefollowthisframeinsurveyingtheclusteringalgorithmsintheliterature.Beginningwiththediscussiononproximitymeasure,whichisthebasisformostclusteringalgorithms,wefocusonhierarchicalclusteringandclassicalpartitionalclusteringalgo-rithmsinSectionII-B–D.StartingfrompartE,weintroduceandanalyzeclusteringalgorithmsbasedonawidevarietyoftheoriesandtechniques,includinggraphtheory,combinato-rialsearchtechniques,fuzzysettheory,neuralnetworks,andkernelstechniques.Comparedwithgraphtheoryandfuzzyset�

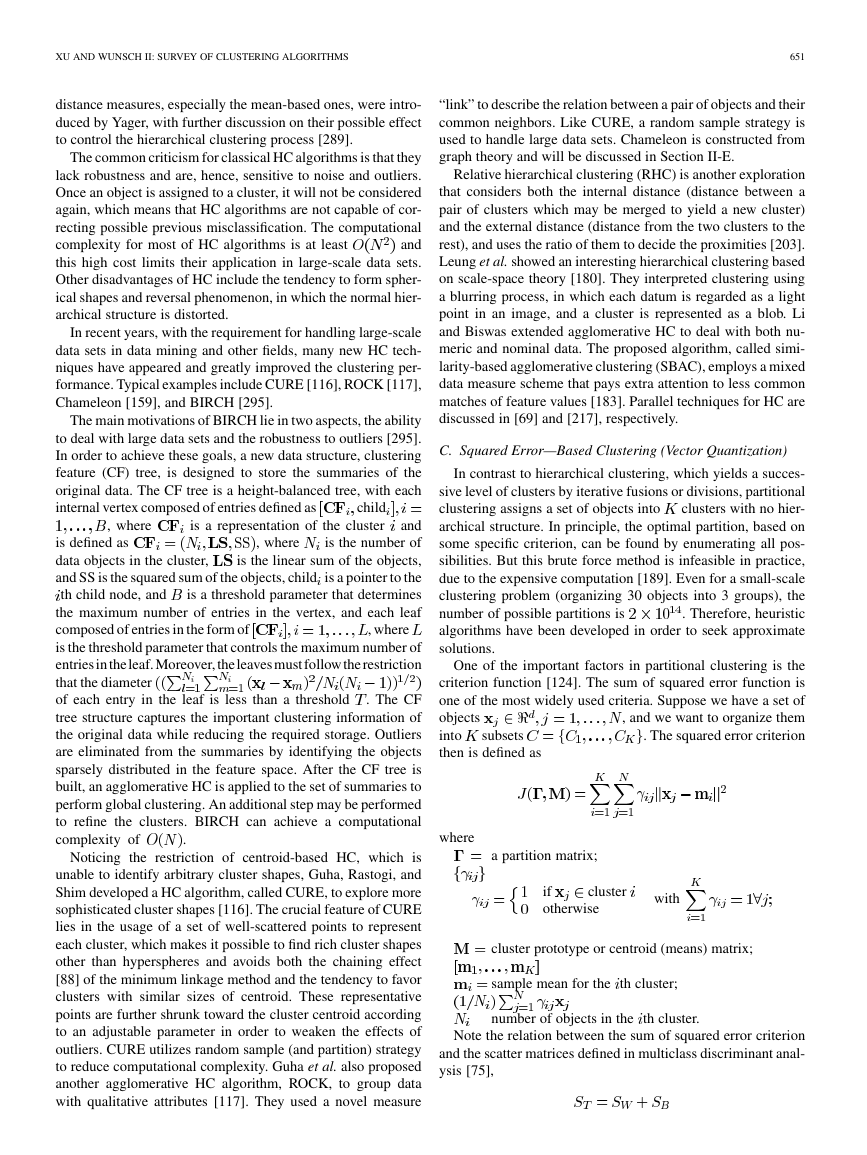

XUANDWUNSCHII:SURVEYOFCLUSTERINGALGORITHMS649TABLEIICOMPUTATIONALCOMPLEXITYOFCLUSTERINGALGORITHMStheory,whichhadalreadybeenwidelyusedinclusteranalysisbeforethe1980s,theothertechniqueshavebeenfindingtheirapplicationsinclusteringjustintherecentdecades.Inspiteoftheshorthistory,muchprogresshasbeenachieved.Notethatthesetechniquescanbeusedforbothhierarchicalandparti-tionalclustering.Consideringthemorefrequentrequirementoftacklingsequentialdatasets,large-scale,andhigh-dimensionaldatasetsinmanycurrentapplications,wereviewclusteringalgorithmsfortheminthefollowingthreeparts.Wefocusparticularattentiononclusteringalgorithmsappliedinbioin-formatics.Weoffermoredetaileddiscussiononhowtoidentifyappropriatenumberofclusters,whichisparticularlyimportantinclustervalidity,inthelastpartofthesection.A.DistanceandSimilarityMeasuresItisnaturaltoaskwhatkindofstandardsweshouldusetodeterminethecloseness,orhowtomeasurethedistance(dis-similarity)orsimilaritybetweenapairofobjects,anobjectandacluster,orapairofclusters.Inthenextsectiononhierarchicalclustering,wewillillustratelinkagemetricsformeasuringprox-imitybetweenclusters.Usually,aprototypeisusedtorepresentaclustersothatitcanbefurtherprocessedlikeotherobjects.Here,wefocusonreviewingmeasureapproachesbetweenin-dividualsduetothepreviousconsideration.Adataobjectisdescribedbyasetoffeatures,usuallyrepre-sentedasamultidimensionalvector.Thefeaturescanbequan-titativeorqualitative,continuousorbinary,nominalorordinal,whichdeterminethecorrespondingmeasuremechanisms.Adistanceordissimilarityfunctiononadatasetisdefinedtosatisfythefollowingconditions.1)Symmetry.;2)Positivity.foralland.Ifconditions3)Triangleinequality.forallandand(4)Reflexivity.alsohold,itiscalledametric.Likewise,asimilarityfunctionisdefinedtosatisfythecon-ditionsinthefollowing.1)Symmetry.;2)Positivity.,foralland.Ifitalsosatisfiesconditions3)forallandand(4),itiscalledasimi-laritymetric.Foradatasetwithinputpatterns,wecandefineansymmetricmatrix,calledproximitymatrix,whosethelementrepresentsthesimilarityordissimilaritymeasureforthethandthpatterns.Typically,distancefunctionsareusedtomeasurecontinuousfeatures,whilesimilaritymeasuresaremoreimportantforqual-itativevariables.Wesummarizesometypicalmeasuresforcon-tinuousfeaturesinTableI.Theselectionofdifferentmeasuresisproblemdependent.Forbinaryfeatures,asimilaritymeasureiscommonlyused(dissimilaritymeasurescanbeobtainedbysimplyusing).Supposeweusetwobinarysub-scriptstocountfeaturesintwoobjects.andrepresentthenumberofsimultaneousabsenceorpresenceoffeaturesintwoobjects,andandcountthefeaturespresentonlyinoneobject.Thentwotypesofcommonlyusedsimilaritymea-suresfordatapointsandareillustratedinthefollowing.•simplematchingcoefficientRogersandTanimotomeasure.GowerandLegendremeasureThesemeasurescomputethematchbetweentwoobjectsdirectly.Unmatchedpairsareweightedbasedontheircontributiontothesimilarity.•JaccardcoefficientSokalandSneathmeasure.GowerandLegendremeasure�

650IEEETRANSACTIONSONNEURALNETWORKS,VOL.16,NO.3,MAY2005Thesemeasuresfocusontheco-occurrencefeatureswhileignoringtheeffectofco-absence.Fornominalfeaturesthathavemorethantwostates,asimplestrategyneedstomapthemintonewbinaryfeatures[161],whileamoreeffectivemethodutilizesthematchingcriterionwhereifanddonotmatchifandmatch[88].Ordinalfeaturesordermultiplestatesaccordingtosomestandardandcanbecomparedbyusingcontinuousdissimi-laritymeasuresdiscussedin[161].EditdistanceforalphabeticsequencesisdiscussedinSectionII-J.Morediscussiononse-quencesandstringscomparisonscanbefoundin[120]and[236].Generally,forobjectsconsistingofmixedvariables,wecanmapallthesevariablesintotheinterval(0,1)andusemea-suresliketheEuclideanmetric.Alternatively,wecantrans-formthemintobinaryvariablesandusebinarysimilarityfunc-tions.Thedrawbackofthesemethodsistheinformationloss.AmorepowerfulmethodwasdescribedbyGowerintheformof,whereindicatesthesimilarityforthethfeatureandisa0–1coefficientbasedonwhetherthemeasureofthetwoobjectsismissing[88],[112].B.HierarchicalClusteringHierarchicalclustering(HC)algorithmsorganizedataintoahierarchicalstructureaccordingtotheproximitymatrix.There-sultsofHCareusuallydepictedbyabinarytreeordendrogram.Therootnodeofthedendrogramrepresentsthewholedatasetandeachleafnodeisregardedasadataobject.Theinterme-diatenodes,thus,describetheextentthattheobjectsareprox-imaltoeachother;andtheheightofthedendrogramusuallyexpressesthedistancebetweeneachpairofobjectsorclusters,oranobjectandacluster.Theultimateclusteringresultscanbeobtainedbycuttingthedendrogramatdifferentlevels.Thisrepresentationprovidesveryinformativedescriptionsandvisu-alizationforthepotentialdataclusteringstructures,especiallywhenrealhierarchicalrelationsexistinthedata,likethedatafromevolutionaryresearchondifferentspeciesoforganizms.HCalgorithmsaremainlyclassifiedasagglomerativemethodsanddivisivemethods.Agglomerativeclusteringstartswithclustersandeachofthemincludesexactlyoneobject.Aseriesofmergeoperationsarethenfollowedoutthatfinallyleadallobjectstothesamegroup.Divisiveclusteringproceedsinanoppositeway.Inthebeginning,theentiredatasetbelongstoaclusterandaproceduresuccessivelydividesituntilallclus-tersaresingletonclusters.Foraclusterwithobjects,therearepossibletwo-subsetdivisions,whichisveryex-pensiveincomputation[88].Therefore,divisiveclusteringisnotcommonlyusedinpractice.Wefocusontheagglomera-tiveclusteringinthefollowingdiscussionandsomeofdivisiveclusteringapplicationsforbinarydatacanbefoundin[88].Twodivisiveclusteringalgorithms,namedMONAandDIANA,aredescribedin[161].Thegeneralagglomerativeclusteringcanbesummarizedbythefollowingprocedure.1)Startwithsingletonclusters.Calculatetheprox-imitymatrixfortheclusters.2)Searchtheminimaldistancewhereisthedistancefunctiondiscussedbe-fore,intheproximitymatrix,andcombineclusterandtoformanewcluster.3)Updatetheproximitymatrixbycomputingthedis-tancesbetweenthenewclusterandtheotherclusters.4)Repeatsteps2)–3)untilallobjectsareinthesamecluster.Basedonthedifferentdefinitionsfordistancebetweentwoclusters,therearemanyagglomerativeclusteringalgorithms.Thesimplestandmostpopularmethodsincludesinglelinkage[256]andcompletelinkagetechnique[258].Forthesinglelinkagemethod,thedistancebetweentwoclustersisdeter-minedbythetwoclosestobjectsindifferentclusters,soitisalsocallednearestneighbormethod.Onthecontrary,thecompletelinkagemethodusesthefarthestdistanceofapairofobjectstodefineinter-clusterdistance.BoththesinglelinkageandthecompletelinkagemethodcanbegeneralizedbytherecurrenceformulaproposedbyLanceandWilliams[178]aswhereisthedistancefunctionand,andarecoefficientsthattakevaluesdependentontheschemeused.Theformuladescribesthedistancebetweenaclusterandanewclusterformedbythemergeoftwoclustersand.Notethatwhen,and,theformulabecomeswhichcorrespondstothesinglelinkagemethod.Whenand,theformulaiswhichcorrespondstothecompletelinkagemethod.Severalmorecomplicatedagglomerativeclusteringalgo-rithms,includinggroupaveragelinkage,medianlinkage,centroidlinkage,andWard’smethod,canalsobeconstructedbyselectingappropriatecoefficientsintheformula.Adetailedtabledescribingthecoefficientvaluesfordifferentalgorithmsisofferedin[150]and[210].Singlelinkage,completelinkageandaveragelinkageconsiderallpointsofapairofclusters,whencalculatingtheirinter-clusterdistance,andarealsocalledgraphmethods.Theothersarecalledgeometricmethodssincetheyusegeometriccenterstorepresentclustersanddeterminetheirdistances.Remarksonimportantfeaturesandpropertiesofthesemethodsaresummarizedin[88].Moreinter-cluster�

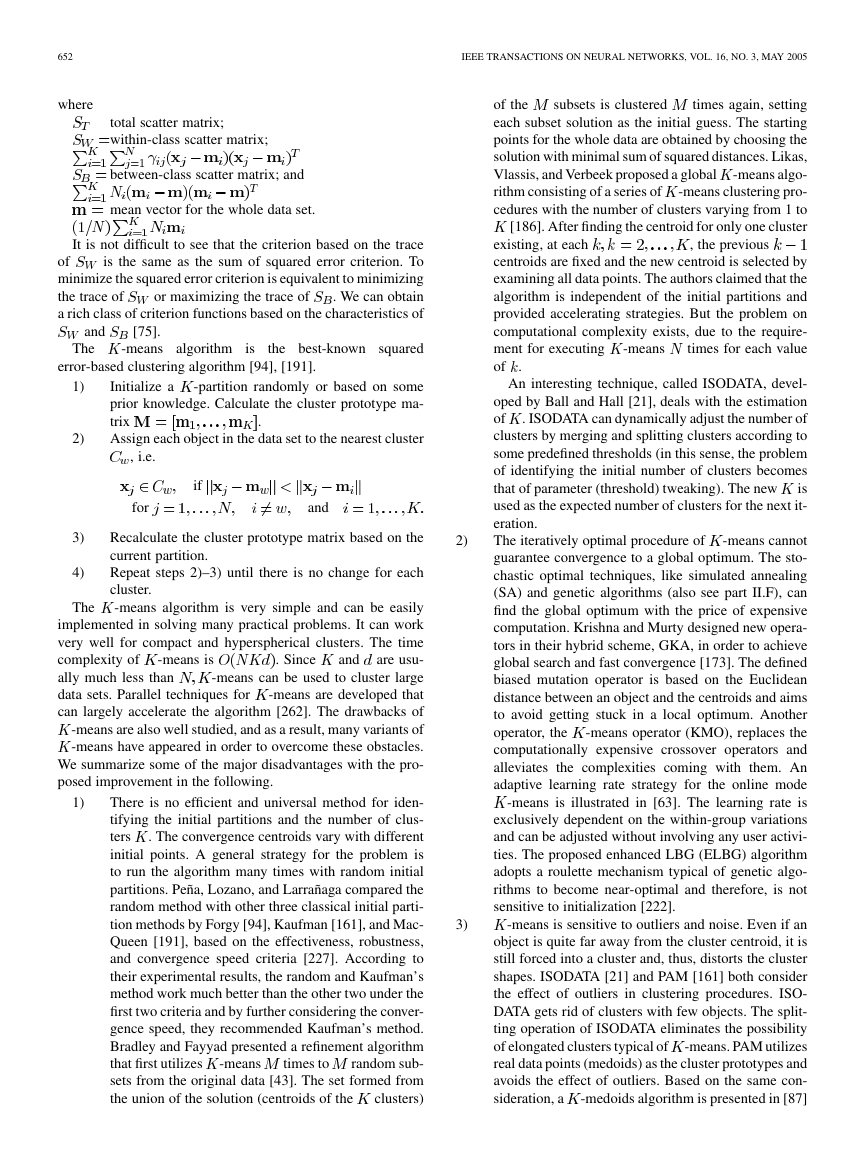

XUANDWUNSCHII:SURVEYOFCLUSTERINGALGORITHMS651distancemeasures,especiallythemean-basedones,wereintro-ducedbyYager,withfurtherdiscussionontheirpossibleeffecttocontrolthehierarchicalclusteringprocess[289].ThecommoncriticismforclassicalHCalgorithmsisthattheylackrobustnessandare,hence,sensitivetonoiseandoutliers.Onceanobjectisassignedtoacluster,itwillnotbeconsideredagain,whichmeansthatHCalgorithmsarenotcapableofcor-rectingpossiblepreviousmisclassification.ThecomputationalcomplexityformostofHCalgorithmsisatleastandthishighcostlimitstheirapplicationinlarge-scaledatasets.OtherdisadvantagesofHCincludethetendencytoformspher-icalshapesandreversalphenomenon,inwhichthenormalhier-archicalstructureisdistorted.Inrecentyears,withtherequirementforhandlinglarge-scaledatasetsindataminingandotherfields,manynewHCtech-niqueshaveappearedandgreatlyimprovedtheclusteringper-formance.TypicalexamplesincludeCURE[116],ROCK[117],Chameleon[159],andBIRCH[295].ThemainmotivationsofBIRCHlieintwoaspects,theabilitytodealwithlargedatasetsandtherobustnesstooutliers[295].Inordertoachievethesegoals,anewdatastructure,clusteringfeature(CF)tree,isdesignedtostorethesummariesoftheoriginaldata.TheCFtreeisaheight-balancedtree,witheachinternalvertexcomposedofentriesdefinedaschild,whereisarepresentationoftheclusterandisdefinedas,whereisthenumberofdataobjectsinthecluster,isthelinearsumoftheobjects,andSSisthesquaredsumoftheobjects,childisapointertothethchildnode,andisathresholdparameterthatdeterminesthemaximumnumberofentriesinthevertex,andeachleafcomposedofentriesintheformof,whereisthethresholdparameterthatcontrolsthemaximumnumberofentriesintheleaf.Moreover,theleavesmustfollowtherestrictionthatthediameterofeachentryintheleafislessthanathreshold.TheCFtreestructurecapturestheimportantclusteringinformationoftheoriginaldatawhilereducingtherequiredstorage.Outliersareeliminatedfromthesummariesbyidentifyingtheobjectssparselydistributedinthefeaturespace.AftertheCFtreeisbuilt,anagglomerativeHCisappliedtothesetofsummariestoperformglobalclustering.Anadditionalstepmaybeperformedtorefinetheclusters.BIRCHcanachieveacomputationalcomplexityof.Noticingtherestrictionofcentroid-basedHC,whichisunabletoidentifyarbitraryclustershapes,Guha,Rastogi,andShimdevelopedaHCalgorithm,calledCURE,toexploremoresophisticatedclustershapes[116].ThecrucialfeatureofCUREliesintheusageofasetofwell-scatteredpointstorepresenteachcluster,whichmakesitpossibletofindrichclustershapesotherthanhyperspheresandavoidsboththechainingeffect[88]oftheminimumlinkagemethodandthetendencytofavorclusterswithsimilarsizesofcentroid.Theserepresentativepointsarefurthershrunktowardtheclustercentroidaccordingtoanadjustableparameterinordertoweakentheeffectsofoutliers.CUREutilizesrandomsample(andpartition)strategytoreducecomputationalcomplexity.Guhaetal.alsoproposedanotheragglomerativeHCalgorithm,ROCK,togroupdatawithqualitativeattributes[117].Theyusedanovelmeasure“link”todescribetherelationbetweenapairofobjectsandtheircommonneighbors.LikeCURE,arandomsamplestrategyisusedtohandlelargedatasets.ChameleonisconstructedfromgraphtheoryandwillbediscussedinSectionII-E.Relativehierarchicalclustering(RHC)isanotherexplorationthatconsidersboththeinternaldistance(distancebetweenapairofclusterswhichmaybemergedtoyieldanewcluster)andtheexternaldistance(distancefromthetwoclusterstotherest),andusestheratioofthemtodecidetheproximities[203].Leungetal.showedaninterestinghierarchicalclusteringbasedonscale-spacetheory[180].Theyinterpretedclusteringusingablurringprocess,inwhicheachdatumisregardedasalightpointinanimage,andaclusterisrepresentedasablob.LiandBiswasextendedagglomerativeHCtodealwithbothnu-mericandnominaldata.Theproposedalgorithm,calledsimi-larity-basedagglomerativeclustering(SBAC),employsamixeddatameasureschemethatpaysextraattentiontolesscommonmatchesoffeaturevalues[183].ParalleltechniquesforHCarediscussedin[69]and[217],respectively.C.SquaredError—BasedClustering(VectorQuantization)Incontrasttohierarchicalclustering,whichyieldsasucces-sivelevelofclustersbyiterativefusionsordivisions,partitionalclusteringassignsasetofobjectsintoclusterswithnohier-archicalstructure.Inprinciple,theoptimalpartition,basedonsomespecificcriterion,canbefoundbyenumeratingallpos-sibilities.Butthisbruteforcemethodisinfeasibleinpractice,duetotheexpensivecomputation[189].Evenforasmall-scaleclusteringproblem(organizing30objectsinto3groups),thenumberofpossiblepartitionsis.Therefore,heuristicalgorithmshavebeendevelopedinordertoseekapproximatesolutions.Oneoftheimportantfactorsinpartitionalclusteringisthecriterionfunction[124].Thesumofsquarederrorfunctionisoneofthemostwidelyusedcriteria.Supposewehaveasetofobjects,andwewanttoorganizethemintosubsets.Thesquarederrorcriterionthenisdefinedaswhereapartitionmatrix;ifclusterotherwisewithclusterprototypeorcentroid(means)matrix;samplemeanforthethcluster;numberofobjectsinthethcluster.Notetherelationbetweenthesumofsquarederrorcriterionandthescattermatricesdefinedinmulticlassdiscriminantanal-ysis[75],�

652IEEETRANSACTIONSONNEURALNETWORKS,VOL.16,NO.3,MAY2005wheretotalscattermatrix;within-classscattermatrix;between-classscattermatrix;andmeanvectorforthewholedataset.Itisnotdifficulttoseethatthecriterionbasedonthetraceofisthesameasthesumofsquarederrorcriterion.Tominimizethesquarederrorcriterionisequivalenttominimizingthetraceoformaximizingthetraceof.Wecanobtainarichclassofcriterionfunctionsbasedonthecharacteristicsofand[75].The-meansalgorithmisthebest-knownsquarederror-basedclusteringalgorithm[94],[191].1)Initializea-partitionrandomlyorbasedonsomepriorknowledge.Calculatetheclusterprototypema-trix.2)Assigneachobjectinthedatasettothenearestcluster,i.e.ifforand3)Recalculatetheclusterprototypematrixbasedonthecurrentpartition.4)Repeatsteps2)–3)untilthereisnochangeforeachcluster.The-meansalgorithmisverysimpleandcanbeeasilyimplementedinsolvingmanypracticalproblems.Itcanworkverywellforcompactandhypersphericalclusters.Thetimecomplexityof-meansis.Sinceandareusu-allymuchlessthan-meanscanbeusedtoclusterlargedatasets.Paralleltechniquesfor-meansaredevelopedthatcanlargelyacceleratethealgorithm[262].Thedrawbacksof-meansarealsowellstudied,andasaresult,manyvariantsof-meanshaveappearedinordertoovercometheseobstacles.Wesummarizesomeofthemajordisadvantageswiththepro-posedimprovementinthefollowing.1)Thereisnoefficientanduniversalmethodforiden-tifyingtheinitialpartitionsandthenumberofclus-ters.Theconvergencecentroidsvarywithdifferentinitialpoints.Ageneralstrategyfortheproblemistorunthealgorithmmanytimeswithrandominitialpartitions.Peña,Lozano,andLarrañagacomparedtherandommethodwithotherthreeclassicalinitialparti-tionmethodsbyForgy[94],Kaufman[161],andMac-Queen[191],basedontheeffectiveness,robustness,andconvergencespeedcriteria[227].Accordingtotheirexperimentalresults,therandomandKaufman’smethodworkmuchbetterthantheothertwounderthefirsttwocriteriaandbyfurtherconsideringtheconver-gencespeed,theyrecommendedKaufman’smethod.BradleyandFayyadpresentedarefinementalgorithmthatfirstutilizes-meanstimestorandomsub-setsfromtheoriginaldata[43].Thesetformedfromtheunionofthesolution(centroidsoftheclusters)ofthesubsetsisclusteredtimesagain,settingeachsubsetsolutionastheinitialguess.Thestartingpointsforthewholedataareobtainedbychoosingthesolutionwithminimalsumofsquareddistances.Likas,Vlassis,andVerbeekproposedaglobal-meansalgo-rithmconsistingofaseriesof-meansclusteringpro-cedureswiththenumberofclustersvaryingfrom1to[186].Afterfindingthecentroidforonlyoneclusterexisting,ateach,thepreviouscentroidsarefixedandthenewcentroidisselectedbyexaminingalldatapoints.Theauthorsclaimedthatthealgorithmisindependentoftheinitialpartitionsandprovidedacceleratingstrategies.Buttheproblemoncomputationalcomplexityexists,duetotherequire-mentforexecuting-meanstimesforeachvalueof.Aninterestingtechnique,calledISODATA,devel-opedbyBallandHall[21],dealswiththeestimationof.ISODATAcandynamicallyadjustthenumberofclustersbymergingandsplittingclustersaccordingtosomepredefinedthresholds(inthissense,theproblemofidentifyingtheinitialnumberofclustersbecomesthatofparameter(threshold)tweaking).Thenewisusedastheexpectednumberofclustersforthenextit-eration.2)Theiterativelyoptimalprocedureof-meanscannotguaranteeconvergencetoaglobaloptimum.Thesto-chasticoptimaltechniques,likesimulatedannealing(SA)andgeneticalgorithms(alsoseepartII.F),canfindtheglobaloptimumwiththepriceofexpensivecomputation.KrishnaandMurtydesignednewopera-torsintheirhybridscheme,GKA,inordertoachieveglobalsearchandfastconvergence[173].ThedefinedbiasedmutationoperatorisbasedontheEuclideandistancebetweenanobjectandthecentroidsandaimstoavoidgettingstuckinalocaloptimum.Anotheroperator,the-meansoperator(KMO),replacesthecomputationallyexpensivecrossoveroperatorsandalleviatesthecomplexitiescomingwiththem.Anadaptivelearningratestrategyfortheonlinemode-meansisillustratedin[63].Thelearningrateisexclusivelydependentonthewithin-groupvariationsandcanbeadjustedwithoutinvolvinganyuseractivi-ties.TheproposedenhancedLBG(ELBG)algorithmadoptsaroulettemechanismtypicalofgeneticalgo-rithmstobecomenear-optimalandtherefore,isnotsensitivetoinitialization[222].3)-meansissensitivetooutliersandnoise.Evenifanobjectisquitefarawayfromtheclustercentroid,itisstillforcedintoaclusterand,thus,distortstheclustershapes.ISODATA[21]andPAM[161]bothconsidertheeffectofoutliersinclusteringprocedures.ISO-DATAgetsridofclusterswithfewobjects.Thesplit-tingoperationofISODATAeliminatesthepossibilityofelongatedclusterstypicalof-means.PAMutilizesrealdatapoints(medoids)astheclusterprototypesandavoidstheeffectofoutliers.Basedonthesamecon-sideration,a-medoidsalgorithmispresentedin[87]�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc