�

PROBABILITY AND STATISTICAL INFERENCE

Ninth Edition

Robert V. Hogg

Elliot A. Tanis

Dale L. Zimmerman

Boston Columbus Indianapolis New York San Francisco

Upper Saddle River Amsterdam Cape Town Dubai

London Madrid Milan Munich Paris Montreal Toronto

Delhi Mexico City Sao Paulo Sydney Hong Kong Seoul

Singapore Taipei Tokyo

Editor in Chief: Deirdre Lynch

Acquisitions Editor: Christopher Cummings

Sponsoring Editor: Christina Lepre

Assistant Editor: Sonia Ashraf

Marketing Manager: Erin Lane

Marketing Assistant: Kathleen DeChavez

Senior Managing Editor: Karen Wernholm

Senior Production Editor: Beth Houston

Procurement Manager: Vincent Scelta

Procurement Specialist: Carol Melville

Associate Director of Design, USHE EMSS/HSC/EDU: Andrea Nix

Art Director: Heather Scott

Interior Designer: Tamara Newnam

Cover Designer: Heather Scott

Cover Image: Agsandrew/Shutterstock

Full-Service Project Management: Integra Software Services

Composition: Integra Software Services

Copyright c 2015, 2010, 2006 by Pearson Education, Inc. All rights reserved. Manufactured

in the United States of America. This publication is protected by Copyright, and permission

should be obtained from the publisher prior to any prohibited reproduction, storage in a

retrieval system, or transmission in any form or by any means, electronic, mechanical,

photocopying, recording, or likewise. To obtain permission(s) to use material from this work,

please submit a written request to Pearson Higher Education, Rights and Contracts

Department, One Lake Street, Upper Saddle River, NJ 07458, or fax your request to

201-236-3290.

Many of the designations by manufacturers and seller to distinguish their products are

claimed as trademarks. Where those designations appear in this book, and the publisher was

aware of a trademark claim, the designations have been printed in initial caps or all caps.

Library of Congress Cataloging-in-Publication Data

Hogg, Robert V.

Probability and Statistical Inference/

Robert V. Hogg, Elliot A. Tanis, Dale Zimmerman. – 9th ed.

p. cm.

ISBN 978-0-321-92327-1

1. Mathematical statistics. I. Hogg, Robert V., II. Tanis, Elliot A. III. Title.

QA276.H59 2013

519.5–dc23

2011034906

10

9 8 7

6 5

4 3 2

1 EBM 17 16

15 14

13

www.pearsonhighered.com

ISBN-10:

0-321-92327-8

ISBN-13: 978-0-321-92327-1

�

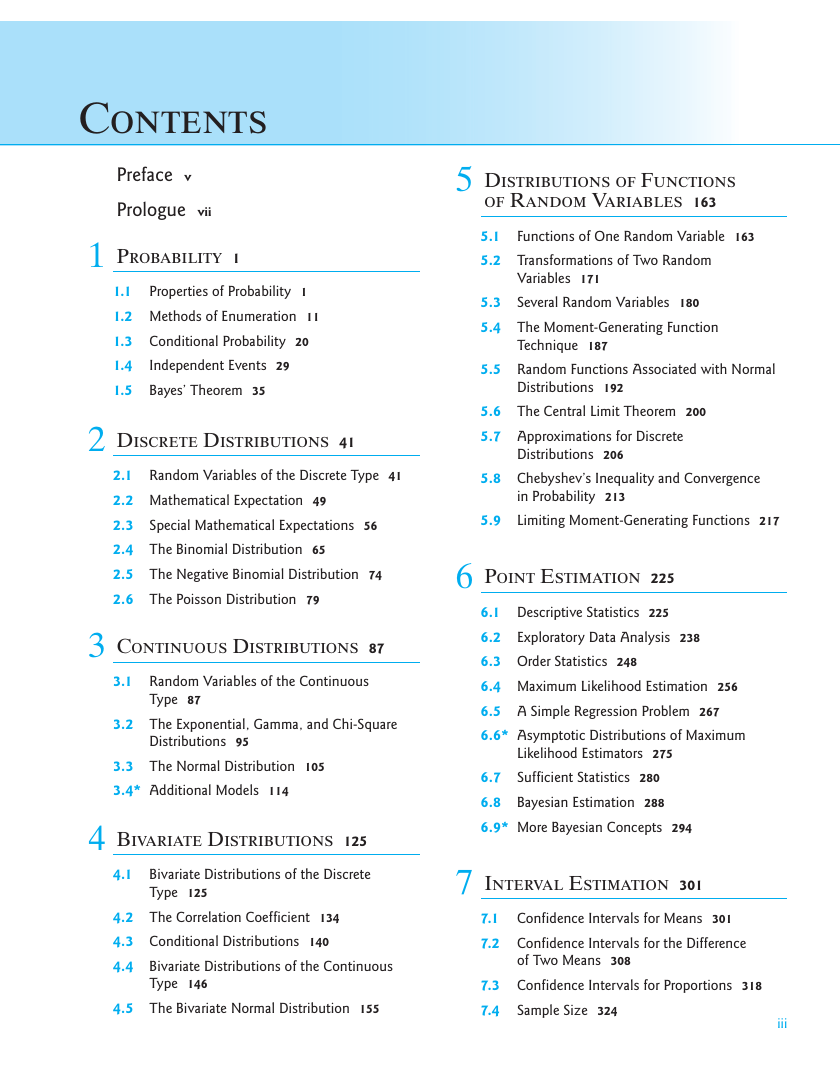

Contents

Preface v

Prologue vii

1 Probability 1

Properties of Probability 1

1.1

1.2 Methods of Enumeration 11

1.3 Conditional Probability 20

1.4

1.5

Independent Events 29

Bayes’ Theorem 35

2 Discrete Distributions 41

Special Mathematical Expectations 56

2.1 Random Variables of the Discrete Type 41

2.2 Mathematical Expectation 49

2.3

2.4 The Binomial Distribution 65

2.5 The Negative Binomial Distribution 74

2.6 The Poisson Distribution 79

3 Continuous Distributions 87

3.1 Random Variables of the Continuous

Type 87

3.2 The Exponential, Gamma, and Chi-Square

Distributions 95

3.3 The Normal Distribution 105

3.4* Additional Models 114

4 Bivariate Distributions 125

4.1

Bivariate Distributions of the Discrete

Type 125

4.2 The Correlation Coefficient 134

4.3 Conditional Distributions 140

4.4

Bivariate Distributions of the Continuous

Type 146

4.5 The Bivariate Normal Distribution 155

5 Distributions of Functions

of Random Variables 163

Functions of One Random Variable 163

5.1

5.2 Transformations of Two Random

Variables 171

Several Random Variables 180

5.3

5.4 The Moment-Generating Function

Technique 187

5.5 Random Functions Associated with Normal

Distributions 192

5.6 The Central Limit Theorem 200

5.7 Approximations for Discrete

Distributions 206

5.8 Chebyshev’s Inequality and Convergence

in Probability 213

Limiting Moment-Generating Functions 217

5.9

6 Point Estimation 225

Exploratory Data Analysis 238

6.1 Descriptive Statistics 225

6.2

6.3 Order Statistics 248

6.4 Maximum Likelihood Estimation 256

6.5 A Simple Regression Problem 267

6.6* Asymptotic Distributions of Maximum

Likelihood Estimators 275

Sufficient Statistics 280

Bayesian Estimation 288

6.7

6.8

6.9* More Bayesian Concepts 294

7 Interval Estimation 301

Confidence Intervals for Means 301

7.1

7.2 Confidence Intervals for the Difference

of Two Means 308

7.3 Confidence Intervals for Proportions 318

7.4

Sample Size 324

iii

�

iv Contents

7.5 Distribution-Free Confidence Intervals

for Percentiles 331

Epilogue 479

7.6* More Regression 338

7.7* Resampling Methods 347

8 Tests of Statistical

Hypotheses 355

8.1 Tests About One Mean 355

8.2 Tests of the Equality of Two Means 365

8.3 Tests About Proportions 373

8.4 The Wilcoxon Tests 381

8.5

Power of a Statistical Test 392

8.6

Best Critical Regions 399

8.7* Likelihood Ratio Tests 406

9 More Tests 415

9.1 Chi-Square Goodness-of-Fit Tests 415

9.2 Contingency Tables 424

9.3 One-Factor Analysis of Variance 435

9.4 Two-Way Analysis of Variance 445

9.5* General Factorial and 2k Factorial

Designs 455

9.6* Tests Concerning Regression and

Correlation 462

9.7* Statistical Quality Control 467

Appendices

A References 481

B Tables 483

C Answers to Odd-Numbered

Exercises 509

D Review of Selected

Mathematical Techniques 521

D.1 Algebra of Sets 521

D.2 Mathematical Tools for the Hypergeometric

Distribution 525

D.3 Limits 528

D.4 Infinite Series 529

D.5 Integration 533

D.6 Multivariate Calculus 535

Index 541

�

Preface

In this Ninth Edition of Probability and Statistical Inference, Bob Hogg and Elliot

Tanis are excited to add a third person to their writing team to contribute to the

continued success of this text. Dale Zimmerman is the Robert V. Hogg Professor in

the Department of Statistics and Actuarial Science at the University of Iowa. Dale

has rewritten several parts of the text, making the terminology more consistent and

contributing much to a substantial revision. The text is designed for a two-semester

course, but it can be adapted for a one-semester course. A good calculus background

is needed, but no previous study of probability or statistics is required.

CONTENT AND COURSE PLANNING

In this revision, the first five chapters on probability are much the same as in the

eighth edition. They include the following topics: probability, conditional probability,

independence, Bayes’ theorem, discrete and continuous distributions, certain math-

ematical expectations, bivariate distributions along with marginal and conditional

distributions, correlation, functions of random variables and their distributions,

including the moment-generating function technique, and the central limit theorem.

While this strong probability coverage of the course is important for all students, it

has been particularly helpful to actuarial students who are studying for Exam P in

the Society of Actuaries’ series (or Exam 1 of the Casualty Actuarial Society).

The greatest change to this edition is in the statistical inference coverage, now

Chapters 6–9. The first two of these chapters provide an excellent presentation

of estimation. Chapter 6 covers point estimation, including descriptive and order

statistics, maximum likelihood estimators and their distributions, sufficient statis-

tics, and Bayesian estimation. Interval estimation is covered in Chapter 7, including

the topics of confidence intervals for means and proportions, distribution-free con-

fidence intervals for percentiles, confidence intervals for regression coefficients, and

resampling methods (in particular, bootstrapping).

The last two chapters are about tests of statistical hypotheses. Chapter 8 consid-

ers terminology and standard tests on means and proportions, the Wilcoxon tests,

the power of a test, best critical regions (Neyman/Pearson) and likelihood ratio

tests. The topics in Chapter 9 are standard chi-square tests, analysis of variance

including general factorial designs, and some procedures associated with regression,

correlation, and statistical quality control.

The first semester of the course should contain most of the topics in Chapters

1–5. The second semester includes some topics omitted there and many of those

in Chapters 6–9. A more basic course might omit some of the (optional) starred

sections, but we believe that the order of topics will give the instructor the flexibility

needed in his or her course. The usual nonparametric and Bayesian techniques are

placed at appropriate places in the text rather than in separate chapters. We find that

many persons like the applications associated with statistical quality control in the

last section. Overall, one of the authors, Hogg, believes that the presentation (at a

somewhat reduced mathematical level) is much like that given in the earlier editions

of Hogg and Craig (see References).

v

�

vi Preface

The Prologue suggests many fields in which statistical methods can be used. In

the Epilogue, the importance of understanding variation is stressed, particularly for

its need in continuous quality improvement as described in the usual Six-Sigma pro-

grams. At the end of each chapter we give some interesting historical comments,

which have proved to be very worthwhile in the past editions.

The answers given in this text for questions that involve the standard distribu-

tions were calculated using our probability tables which, of course, are rounded off

for printing. If you use a statistical package, your answers may differ slightly from

those given.

ANCILLARIES

Data sets from this textbook are available on Pearson Education’s Math & Statistics

Student Resources website: http://www.pearsonhighered.com/mathstatsresources.

An Instructor’s Solutions Manual containing worked-out solutions to the even-

numbered exercises in the text is available for download from Pearson Education’s

Instructor Resource Center at www.pearsonhighered.com/irc. Some of the numer-

ical exercises were solved with Maple. For additional exercises that involve sim-

ulations, a separate manual, Probability & Statistics: Explorations with MAPLE,

second edition, by Zaven Karian and Elliot Tanis, is also available for download from

Pearson Education’s Instructor Resource Center. Several exercises in that manual

also make use of the power of Maple as a computer algebra system.

If you find any errors in this text, please send them to tanis@hope.edu so that

they can be corrected in a future printing. These errata will also be posted on

http://www.math.hope.edu/tanis/.

ACKNOWLEDGMENTS

We wish to thank our colleagues, students, and friends for many suggestions and

for their generosity in supplying data for exercises and examples. In particular, we

would like to thank the reviewers of the eighth edition who made suggestions for

this edition. They are Steven T. Garren from James Madison University, Daniel C.

Weiner from Boston University, and Kyle Siegrist from the University of Alabama

in Huntsville. Mark Mills from Central College in Iowa also made some helpful com-

ments. We also acknowledge the excellent suggestions from our copy editor, Kristen

Cassereau Ng, and the fine work of our accuracy checkers, Kyle Siegrist and Steven

Garren. We also thank the University of Iowa and Hope College for providing office

space and encouragement. Finally, our families, through nine editions, have been

most understanding during the preparation of all of this material. We would espe-

cially like to thank our wives, Ann, Elaine, and Bridget. We truly appreciate their

patience and needed their love.

Robert V. Hogg

Elliot A. Tanis

tanis@hope.edu

Dale L. Zimmerman

dale-zimmerman@uiowa.edu

�

Prologue

The discipline of statistics deals with the collection and analysis of data. Advances

in computing technology, particularly in relation to changes in science and business,

have increased the need for more statistical scientists to examine the huge amount

of data being collected. We know that data are not equivalent to information. Once

data (hopefully of high quality) are collected, there is a strong need for statisticians

to make sense of them. That is, data must be analyzed in order to provide informa-

tion upon which decisions can be made. In light of this great demand, opportunities

for the discipline of statistics have never been greater, and there is a special need for

more bright young persons to go into statistical science.

If we think of fields in which data play a major part, the list is almost endless:

accounting, actuarial science, atmospheric science, biological science, economics,

educational measurement, environmental science, epidemiology, finance, genetics,

manufacturing, marketing, medicine, pharmaceutical industries, psychology, sociol-

ogy, sports, and on and on. Because statistics is useful in all of these areas, it really

should be taught as an applied science. Nevertheless, to go very far in such an applied

science, it is necessary to understand the importance of creating models for each sit-

uation under study. Now, no model is ever exactly right, but some are extremely

useful as an approximation to the real situation. Most appropriate models in statis-

tics require a certain mathematical background in probability. Accordingly, while

alluding to applications in the examples and the exercises, this textbook is really

about the mathematics needed for the appreciation of probabilistic models necessary

for statistical inferences.

In a sense, statistical techniques are really the heart of the scientific method.

Observations are made that suggest conjectures. These conjectures are tested, and

data are collected and analyzed, providing information about the truth of the

conjectures. Sometimes the conjectures are supported by the data, but often the

conjectures need to be modified and more data must be collected to test the mod-

ifications, and so on. Clearly, in this iterative process, statistics plays a major role

with its emphasis on the proper design and analysis of experiments and the resulting

inferences upon which decisions can be made. Through statistics, information is pro-

vided that is relevant to taking certain actions, including improving manufactured

products, providing better services, marketing new products or services, forecasting

energy needs, classifying diseases better, and so on.

Statisticians recognize that there are often small errors in their inferences, and

they attempt to quantify the probabilities of those mistakes and make them as small

as possible. That these uncertainties even exist is due to the fact that there is variation

in the data. Even though experiments are repeated under seemingly the same condi-

tions, the results vary from trial to trial. We try to improve the quality of the data by

making them as reliable as possible, but the data simply do not fall on given patterns.

In light of this uncertainty, the statistician tries to determine the pattern in the best

possible way, always explaining the error structures of the statistical estimates.

This is an important lesson to be learned: Variation is almost everywhere. It is

the statistician’s job to understand variation. Often, as in manufacturing, the desire is

to reduce variation because the products will be more consistent. In other words, car

vii

�

2023年江西萍乡中考道德与法治真题及答案.doc

2023年江西萍乡中考道德与法治真题及答案.doc 2012年重庆南川中考生物真题及答案.doc

2012年重庆南川中考生物真题及答案.doc 2013年江西师范大学地理学综合及文艺理论基础考研真题.doc

2013年江西师范大学地理学综合及文艺理论基础考研真题.doc 2020年四川甘孜小升初语文真题及答案I卷.doc

2020年四川甘孜小升初语文真题及答案I卷.doc 2020年注册岩土工程师专业基础考试真题及答案.doc

2020年注册岩土工程师专业基础考试真题及答案.doc 2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc

2023-2024学年福建省厦门市九年级上学期数学月考试题及答案.doc 2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc

2021-2022学年辽宁省沈阳市大东区九年级上学期语文期末试题及答案.doc 2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc

2022-2023学年北京东城区初三第一学期物理期末试卷及答案.doc 2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc

2018上半年江西教师资格初中地理学科知识与教学能力真题及答案.doc 2012年河北国家公务员申论考试真题及答案-省级.doc

2012年河北国家公务员申论考试真题及答案-省级.doc 2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc

2020-2021学年江苏省扬州市江都区邵樊片九年级上学期数学第一次质量检测试题及答案.doc 2022下半年黑龙江教师资格证中学综合素质真题及答案.doc

2022下半年黑龙江教师资格证中学综合素质真题及答案.doc